Collections

Generate images

Use AI to generate images & photos with an API

Caption videos

Use AI to caption videos with an API

Generate speech

Use AI for text-to-speech or to clone your voice via API

Generate images from a face

Use AI to generate images from a face with an API

Generate videos

Use AI to generate videos with an API

Upscale images with super resolution

Use AI to upscale images with super resolution with an API

Generate music

Use AI to generate music with an API

Edit any image

Use AI to edit any image via API

Transcribe speech to text

Use AI to transcribe speech to text via API

OCR to extract text from images

Use AI For Optical Character Recognition (OCR) to extract text from images via API

Remove backgrounds

Use AI to remove backgrounds from images and videos with an API

FLUX family of models

FLUX AI models: advanced image generation & editing via API

Restore images

Use AI to restore images via API

Enhance videos

Use AI to enhance videos via API - Replicate

Detect NSFW content

Detect NSFW content in images and text

Classify text

Classify text by sentiment, topic, intent, or safety

Speaker diarization

Identify speakers from audio and video inputs

Create realistic face swaps

Replace faces across images with natural-looking results.

Turn sketches into images

Transform rough sketches into polished visuals

Generate emojis

Generate custom emojis from text or images

Generate anime-style images and videos

Create anime-style characters, scenes, and animations

Large Language Models (LLMs)

Explore Large Language Models (LLMs) for chat, generation & NLP tasks via API

Try AI models for free

Try AI Models for free: video generation, image generation, upscaling, and photo restoration

Generate videos from images

Use AI to Generate Videos from Images with API

Lipsync videos

Use AI to generate lipsync videos with an API

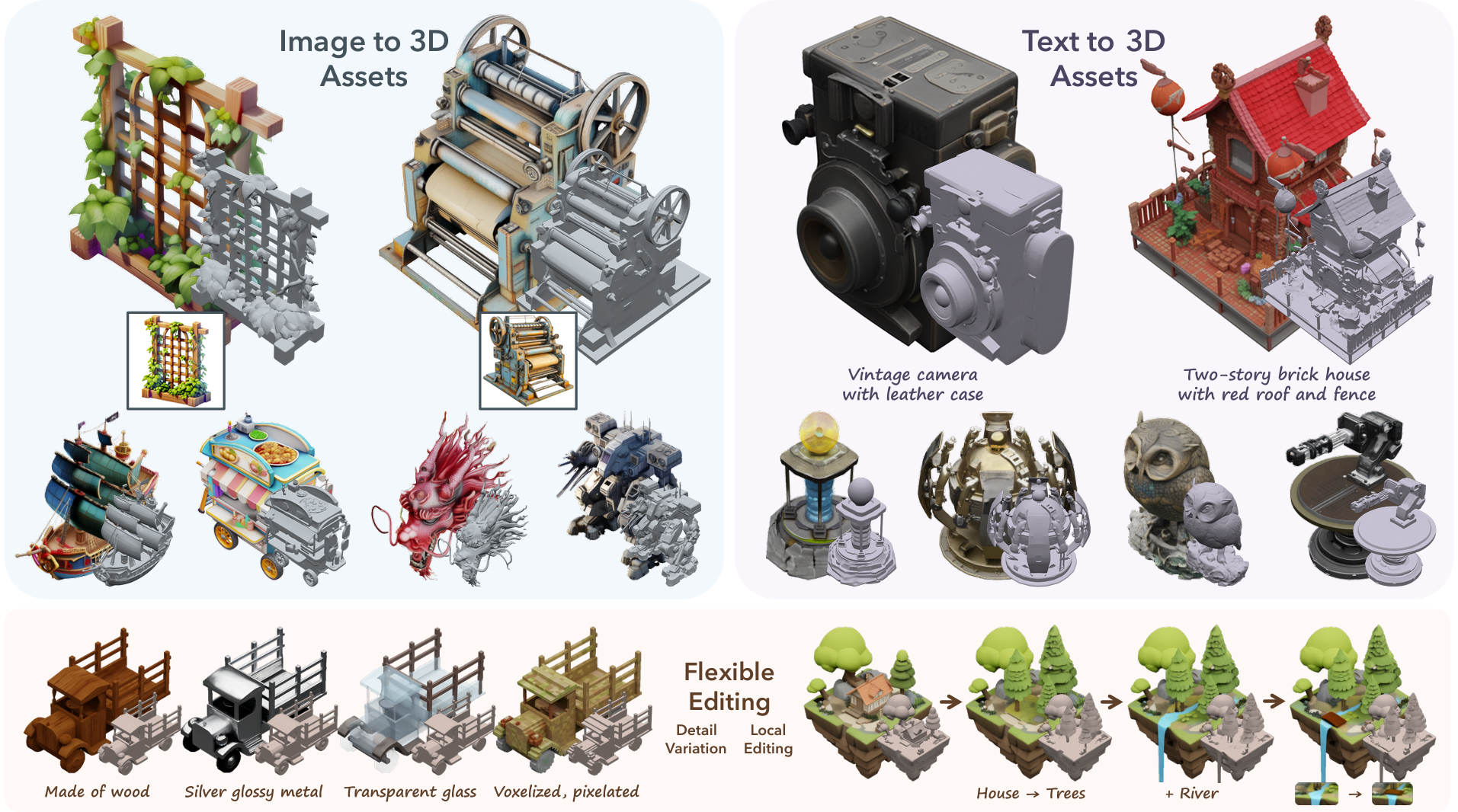

Create 3D content

Use AI to create 3D content with an API

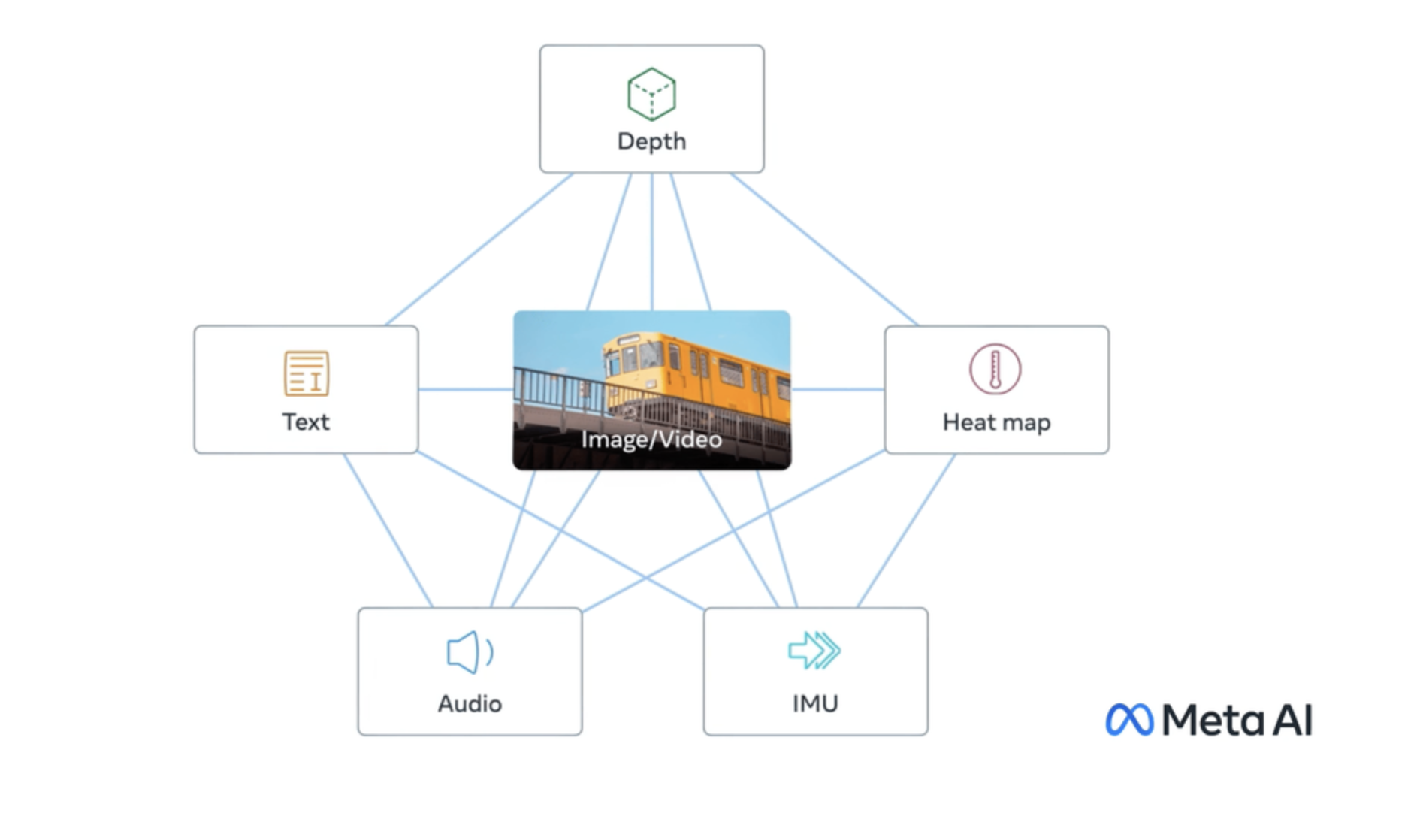

Vision models

Chat with images for understanding, captioning & detection via API

Control image generation

Use AI to control image generation with an API

Embedding models

Embedding models for AI search and analysis

Edit your videos

Use AI to edit your videos with an API

Object detection and segmentation

Use AI object detection and segmentation models to distinguish objects in images & videos

Official AI models

Official AI models: Always available, stable, and predictably priced

Flux fine-tunes

Flux fine-tunes: build and run custom AI image models via API

Kontext fine-tunes

Kontext fine-tunes: Build custom AI image models with an API

Create songs with voice cloning

Create songs with voice cloning models via API

Media utilities

AI media utilities: auto-caption, watermark, frame extraction & more via API

Qwen-Image fine-tunes

Browse the diverse range of qwen-image fine-tunes the community has custom-trained on Replicate.

WAN family of models

WAN family of models: powerful image-to-video & text-to-video models

Caption Images

Use AI To Caption Images with an API