Transcribe speech to text

Transcribe audio to text in multiple languages.

Our pick: Incredibly Fast Whisper

For most needs, use vaibhavs10/incredibly-fast-whisper. It really is fast (10x quicker than original Whisper), cheap, accurate, and supports tons of languages.

For speaker labels: WhisperX

Need to label speakers or get word-level timestamps? victor-upmeet/whisperx has you covered. Slightly more expensive than incredibly-fast-whisper but still very fast and useful.

You can also check out our Speaker Diarization collection for models that can identify speakers from audio and video.

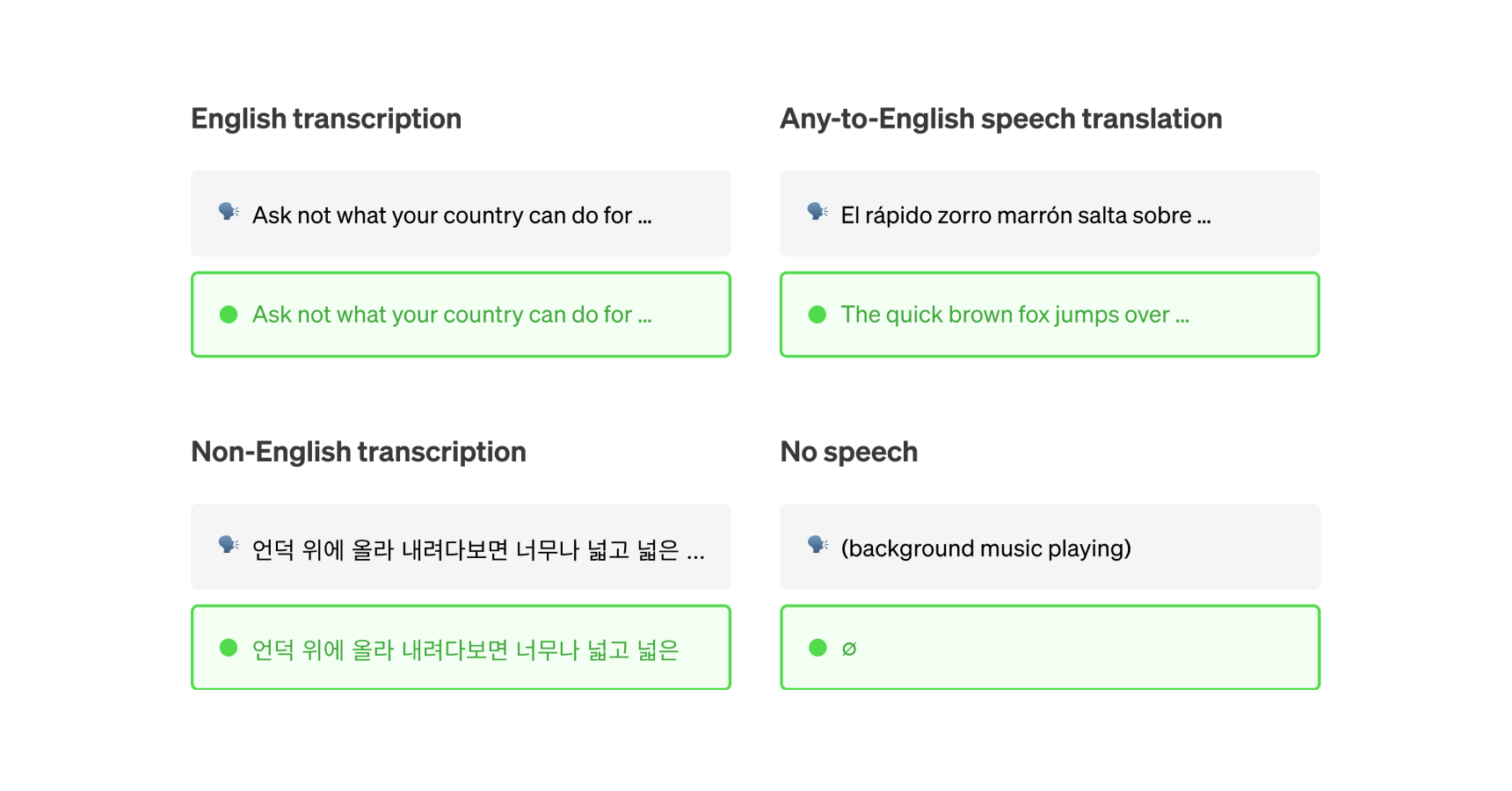

Translation: SeamlessM4T

To translate speech between languages, cjwbw/seamless_communication is your friend.

This unified model enables multiple tasks without relying on multiple separate models:

- Speech-to-speech translation (S2ST)

- Speech-to-text translation (S2TT)

- Text-to-speech translation (T2ST)

- Text-to-text translation (T2TT)

- Automatic speech recognition (ASR)

Featured models

openai/gpt-4o-transcribe

openai/gpt-4o-transcribeA speech-to-text model that uses GPT-4o to transcribe audio

Updated 3 months, 2 weeks ago

35.8K runs

victor-upmeet/whisperx

victor-upmeet/whisperxAccelerated transcription, word-level timestamps and diarization with whisperX large-v3

Updated 1 year, 5 months ago

6.4M runs

vaibhavs10/incredibly-fast-whisper

vaibhavs10/incredibly-fast-whisperwhisper-large-v3, incredibly fast, powered by Hugging Face Transformers! 🤗

Updated 2 years ago

26.5M runs

Recommended Models

Frequently asked questions

Which models are the fastest for transcribing speech?

If speed is your top priority, vaibhavs10/incredibly-fast-whisper and openai/gpt-4o-transcribe are among the fastest models in the speech-to-text collection. They’re designed for low-latency transcription, which makes them ideal for live or near real-time scenarios like voice notes, quick interviews, or interactive applications.

Keep in mind that faster models may not include advanced features like speaker labeling or word-level timestamps.

Which models offer the best balance of transcription accuracy and flexibility?

openai/whisper is a reliable general-purpose option that works well with clean audio and single-speaker recordings. It offers multilingual support and solid accuracy for most everyday transcription needs.

If you need more structure—like timestamps or speaker labels—victor-upmeet/whisperx adds those capabilities without a massive jump in runtime.

What works best for clean, single-speaker audio?

For clear recordings like lectures, podcasts, or voice memos, vaibhavs10/incredibly-fast-whisper or openai/whisper are great choices. They deliver accurate transcripts quickly and handle common accents well.

What’s best for transcribing meetings or multi-speaker conversations?

If your audio includes multiple speakers—like team meetings, interviews, or panel discussions—victor-upmeet/whisperx is your best bet. It adds speaker diarization and word-level timestamps so you can keep track of who said what.

How do the main types of speech-to-text models differ?

- Basic transcription: Converts audio to text with no extra metadata. Good for single-speaker, clean audio.

- Diarization and timestamps: Adds speaker labels and word-level timing, ideal for meetings and interviews (e.g., whisperx).

- Multilingual and translation: Some models can detect or translate languages directly (see seamless_communication).

- Speed vs features: Faster models (e.g., incredibly-fast-whisper, gpt-4o-transcribe) focus on getting text out quickly, while feature-rich ones provide more structured output.

What’s best for multilingual or translation-heavy work?

If you need transcription in multiple languages or want translations built in, cjwbw/seamless_communication is a strong option. It supports multiple languages and can handle more complex audio scenarios like mixed-language conversations.

What types of outputs can I expect from speech-to-text models?

Most models produce plain text transcripts. Some also include:

- Word- or phrase-level timestamps.

- Speaker labels for multi-speaker audio.

- Language detection and confidence metadata.

- Optional translations if the model supports it.

How can I self-host or push a speech-to-text model to Replicate?

You can package your own model with Cog and push it to Replicate. This lets you control how it’s run, updated, and shared, whether you’re adapting an open-source model or deploying a fine-tuned one.

Can I use speech-to-text models for commercial work?

Many models in the speech-to-text collection allow commercial use, but licenses vary. Some models have conditions or attribution requirements, so always check the model page before using transcripts in commercial projects.

How do I use speech-to-text models on Replicate?

- Choose a model from the speech-to-text collection.

- Upload your audio file or paste a URL.

- Set options like language hints or diarization if supported.

- Run the model to generate a transcript.

- Download or integrate the results into your workflow.

What should I keep in mind when transcribing audio?

- Clear audio improves transcription quality. Minimize background noise when possible.

- Not every model supports timestamps, speaker labels, or translation—check before running.

- For long recordings, splitting the file can speed up processing and improve reliability.

- File format matters: many models expect WAV at 16 kHz.

- If you’re working with multilingual audio, test a short clip first to gauge accuracy.

- For larger projects, plan your workflow with model runtime and capabilities in mind.