Recommended Models

rafaelgalle/whisper-diarization-advanced

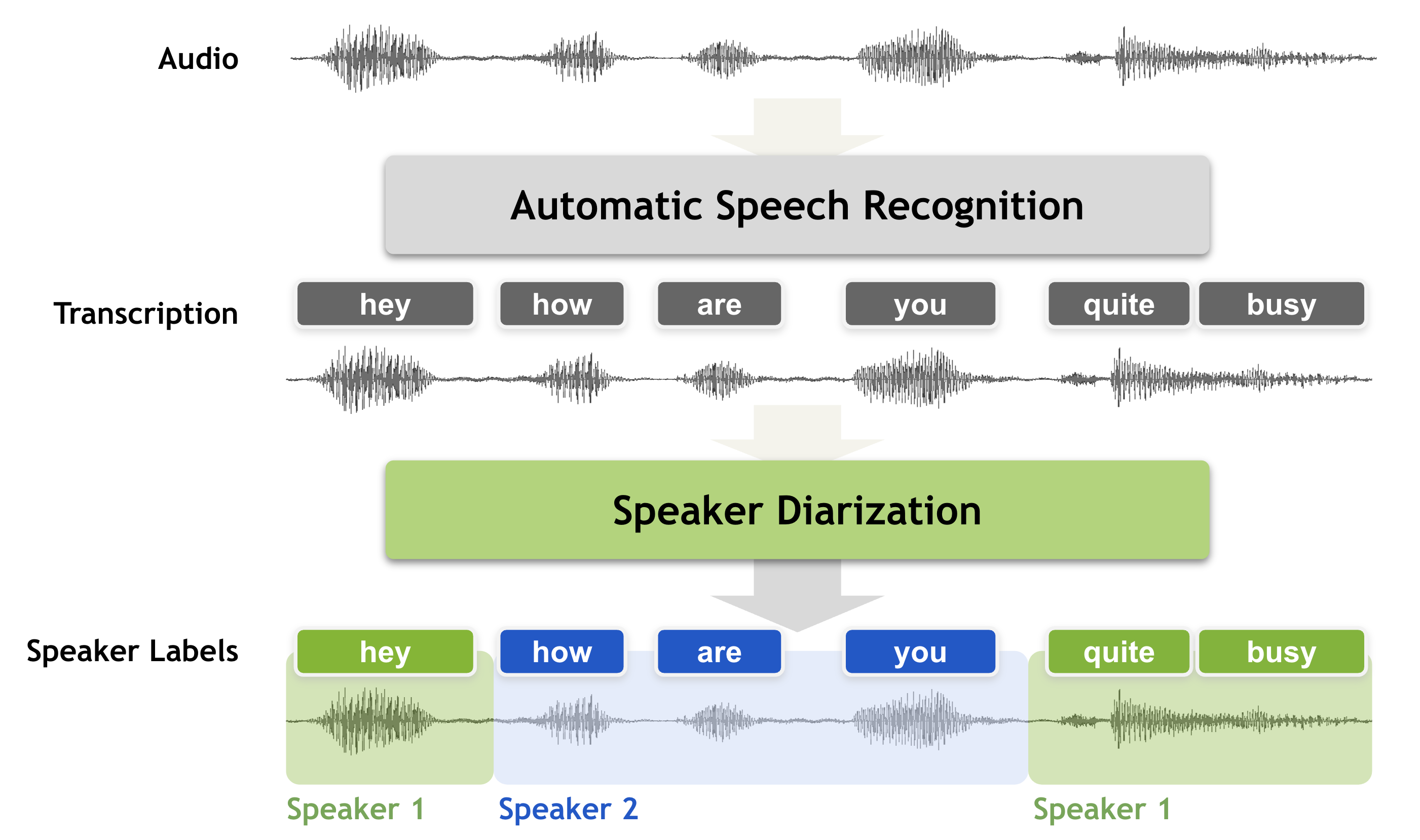

rafaelgalle/whisper-diarization-advancedUltra-fast, customizable speech-to-text and speaker diarization for noisy, multi-speaker audio. Includes advanced noise reduction, stereo channel support, and flexible audio preprocessing—ideal for call centers, meetings, and podcasts.

Updated 3 months, 3 weeks ago

398.4K runs

thomasmol/whisper-diarization

thomasmol/whisper-diarization⚡️ Blazing fast audio transcription with speaker diarization | Whisper Large V3 Turbo | word & sentence level timestamps | prompt

Updated 1 year ago

6.1M runs

victor-upmeet/whisperx

victor-upmeet/whisperxAccelerated transcription, word-level timestamps and diarization with whisperX large-v3

Updated 1 year, 5 months ago

6.4M runs

collectiveai-team/speaker-diarization-3

collectiveai-team/speaker-diarization-3Segments an audio recording based on who is speaking

Updated 2 years ago

5.7K runs

vaibhavs10/incredibly-fast-whisper

vaibhavs10/incredibly-fast-whisperwhisper-large-v3, incredibly fast, powered by Hugging Face Transformers! 🤗

Updated 2 years ago

27M runs

awerks/whisperx

awerks/whisperxFast automatic speech recognition (70x realtime with large-v2) with word-level timestamps and speaker diarization.

Updated 2 years, 5 months ago

26.1K runs

sabuhigr/sabuhi-model

sabuhigr/sabuhi-modelWhisper AI with channel separation and speaker diarization

Updated 2 years, 6 months ago

25.5K runs

lucataco/speaker-diarization

lucataco/speaker-diarizationSegments an audio recording based on who is speaking (on A100)

Updated 2 years, 7 months ago

12.8K runs

meronym/speaker-transcription

meronym/speaker-transcriptionWhisper transcription plus speaker diarization

Updated 2 years, 9 months ago

28.3K runs