Create 3D content

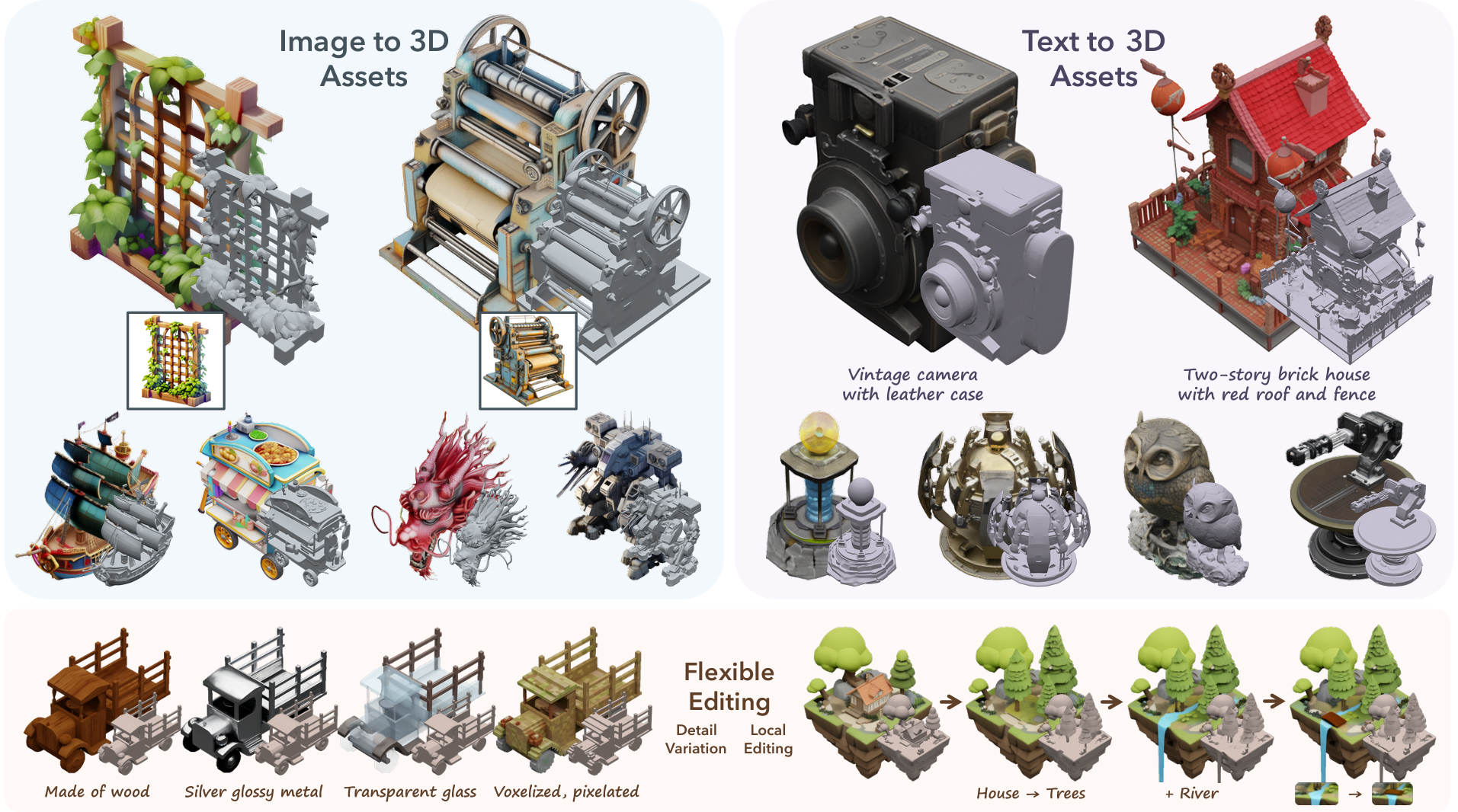

These models generate 3D content like objects, scenes, and textures. They enable creating 3D assets from text prompts and images. Key capabilities include:

- 3D shape generation: Create 3D shapes from text prompts and images.

- Multi-view rendering: Generate consistent views of 3D objects from different angles.

- Texturing: Generate textures for 3D assets based on text prompts.

Our Pick: TRELLIS

We recommend TRELLIS as the best all-around model for 3D content generation. The community-uploaded model can create a detailed 3D asset from a single image in under a minute.

Don't have an image of the asset you want to create? Create one from a text prompt with our image generation models →

Recommended Models

Frequently asked questions

Which models are the fastest?

For most use cases, firtoz/trellis is both fast and reliable. It can turn a single reference image into a complete 3D object in under a minute.

If you’re working with smaller assets or simple geometry, adirik/wonder3d and tencent/hunyuan3d-2mv also generate results quickly, especially when multiview control isn’t needed.

Which models offer the best balance of cost and quality?

firtoz/trellis strikes the best overall balance — it’s accurate, fast, and easy to use from either an image or text-generated reference.

If you need finer geometry control or multi-angle consistency, tencent/hunyuan3d-2 and tencent/hunyuan3d-2mv deliver excellent results with slightly higher compute requirements.

What works best for creating 3D objects from images?

firtoz/trellis is the go-to model for image-to-3D workflows. Upload a single image, and it reconstructs a 3D asset that captures shape and texture details accurately.

adirik/wonder3d also works well for turning images into full 3D objects but emphasizes realistic mesh generation over artistic interpretation.

What works best for text-to-3D generation?

If you’re starting from a prompt instead of an image, adirik/mvdream and cjwbw/shap-e can generate 3D assets directly from text.

They’re ideal for prototyping objects quickly or generating creative concepts for games and design projects.

What’s the difference between shape generation, multi-view rendering, and texturing?

- Shape generation builds the base 3D mesh or geometry of your object.

- Multi-view rendering creates consistent views from multiple angles, useful for refining perspective or motion.

- Texturing adds surface details and materials—models like adirik/text2tex or adirik/texture specialize in this step.

You can combine all three processes to create production-ready 3D assets.

What kinds of outputs can I expect from these models?

Most models output 3D meshes (like .obj or .glb files) or multi-view renders (a set of images showing the object from different angles).

Texturing models output texture maps or material layers that can be applied to your 3D meshes in external software.

How can I self-host or push a model to Replicate?

Models like tencent/hunyuan3d-2 and cjwbw/shap-e are open source and can be self-hosted with Cog or Docker.

If you want to publish your own model on Replicate, create a replicate.yaml with defined inputs/outputs, push it to your account, and Replicate will handle serving and scaling.

Can I use these models for commercial work?

Yes — most 3D generation models support commercial use, though it depends on the individual license.

Always review the License section on each model page, especially for non-commercial research models like adirik/text2tex.

How do I use or run these models?

Start with a clear reference image or a short text prompt describing your object.

For example:

- “A detailed bronze sculpture of a lion” → adirik/mvdream

- “Generate a textured 3D mug from this image” → firtoz/trellis

You’ll receive a downloadable 3D file or image renders that can be previewed or imported into tools like Blender or Unity.

What should I know before running a job in this collection?

- The clearer and more centered your reference image, the better the resulting mesh.

- Complex lighting or reflections can confuse 3D reconstruction—choose balanced, well-lit photos.

- Multi-view models take longer but produce higher fidelity across angles.

- Texturing can be run as a second step after geometry generation for better results.

Any other collection-specific tips or considerations?

- For game or film assets, pair firtoz/trellis or tencent/hunyuan3d-2 with adirik/text2tex for realistic surfaces.

- adarik/zero123plusplus is great for generating multi-view reference sets before 3D modeling.

- If you only need 2D-to-3D video conversion, check out lucataco/deep3d instead.

- For consistent results, keep object scale and framing uniform across your inputs.