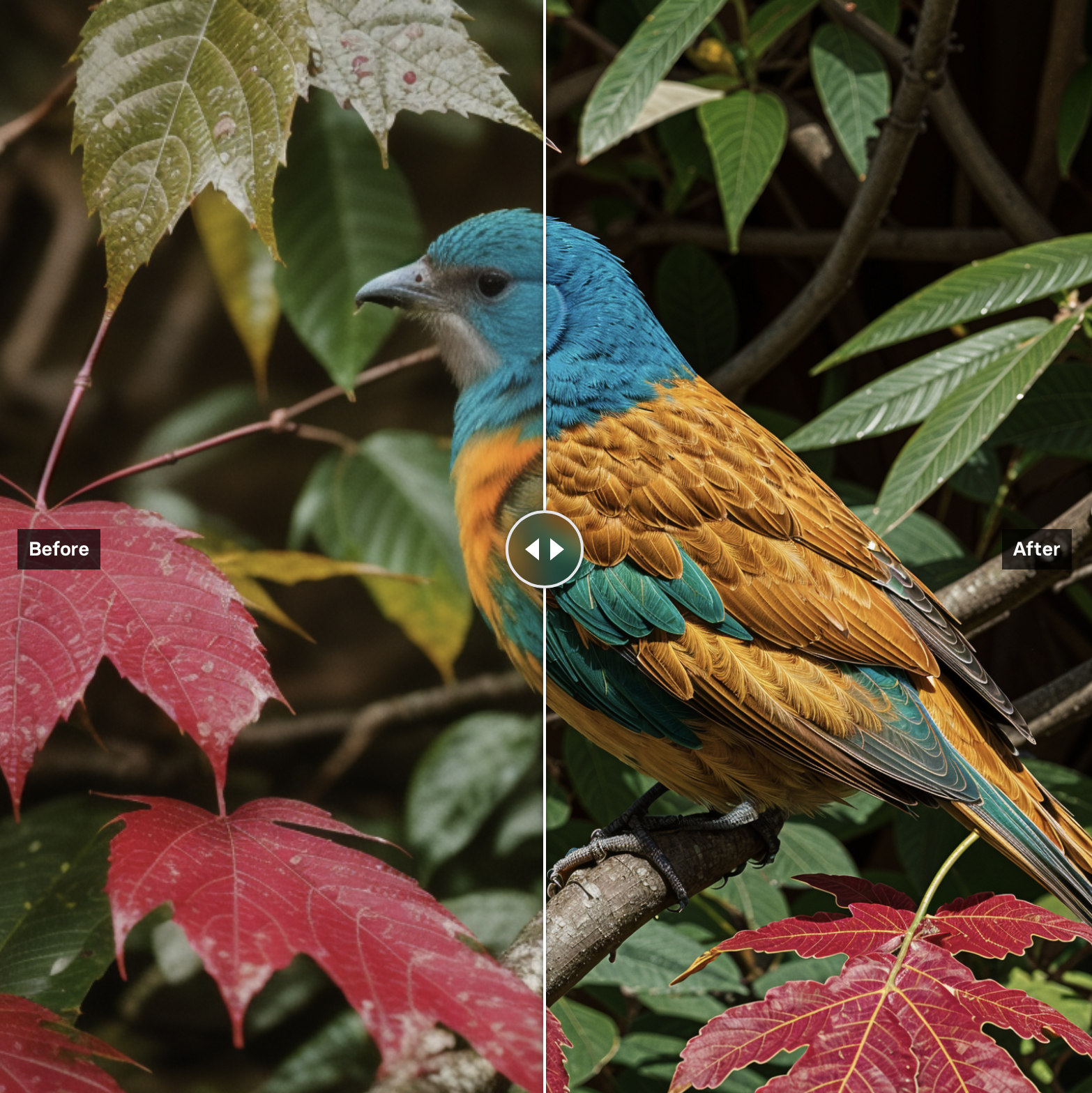

Upscale images with super resolution

These models increase image resolution and quality.

Read our guide to upscaling images with AI to learn about various upscaling models.

Key capabilities of upscaling models:

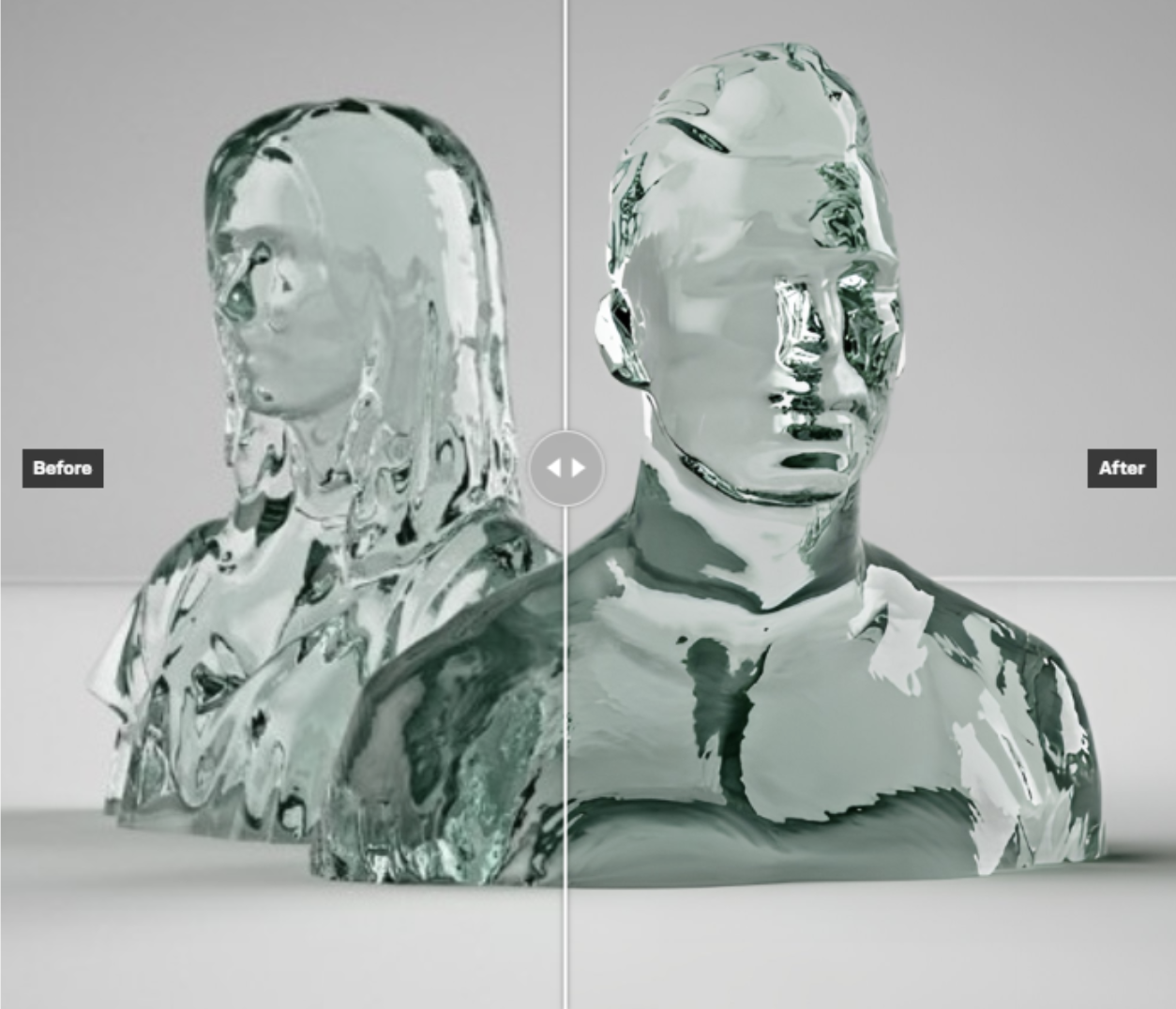

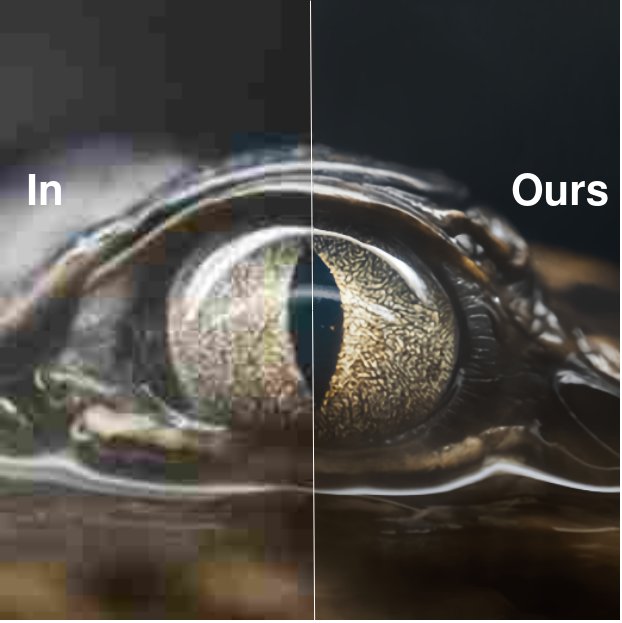

- Super-resolution - Upscale images by inferring high frequency details. Allows increasing resolution without losing quality.

- Noise reduction - Remove artifacts and imperfections during upscaling. Results in cleaner image.

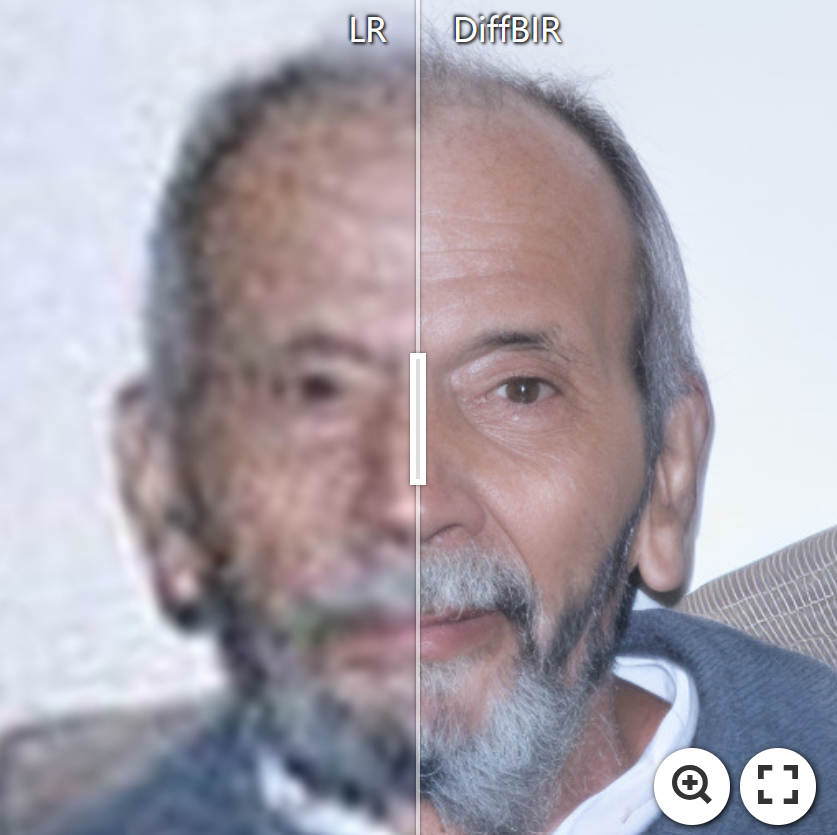

- Face restoration - Improve facial features and textures for more natural faces.

- Control over style - Adjust degree of realism vs hallucination during upscaling.

Our Pick: batouresearch/magic-image-refiner

For most upscaling needs, we recommend the batouresearch/magic-image-refiner model. This very flexible model can be used for upscaling, refining an image, or inpainting. The model can upscale images to either 1024x1024px or 2048x2048px, producing great results much faster than comparable models.

Increase the resemblance parameter to get a more precise recreation of your original input image. Or, if you’re looking for something new and interesting, crank up the creativity parameter to encourage hallucination and create a new image inspired by your original input.

You may also be interested in the sister model, batouresearch/high-resolution-controlnet-tile, which upscales to a larger resolution of 2560x2560. However, it runs slower and produces less realistic-looking results.

Budget Pick: nightmareai/real-esrgan

If you need to upscale a large volume of images, we suggest using nightmareai/real-esrgan.

It runs fast on cheaper GPUs, like the Nvidia T4 (~1.8s for a 2x upscale), and produces reasonably good upscaled images without too many image scaling artifacts. Real-ESRGAN also includes an optional face_enhance option, which can help improve the quality and realism of AI-generated faces.

You can run Real-ESRGAN on an Nvidia A100 for faster upscaling speed (~0.7s for a 2x upscale) and 2.5x the amount of GPU RAM, allowing for significantly larger images. Just keep in mind that A100 GPUs cost more than T4 GPUs.

Featured models

Upscale images 2x or 4x times

Updated 3 days, 14 hours ago

205.9K runs

philz1337x/crystal-upscaler

philz1337x/crystal-upscalerHigh-precision image upscaler optimized for portraits, faces and products. One of the upscale modes powered by Clarity AI. X:https://x.com/philz1337x

Updated 2 months, 3 weeks ago

476.1K runs

recraft-ai/recraft-crisp-upscale

recraft-ai/recraft-crisp-upscaleDesigned to make images sharper and cleaner, Crisp Upscale increases overall quality, making visuals suitable for web use or print-ready materials.

Updated 3 months, 1 week ago

1.9M runs

nightmareai/real-esrgan

nightmareai/real-esrganReal-ESRGAN with optional face correction and adjustable upscale

Updated 3 months, 2 weeks ago

85.2M runs

philz1337x/clarity-upscaler

philz1337x/clarity-upscalerHigh resolution image Upscaler and Enhancer. Use at ClarityAI.co. A free Magnific alternative. Twitter/X: @philz1337x

Updated 1 year, 7 months ago

26.8M runs

A better alternative to SDXL refiners, providing a lot of quality and detail. Can also be used for inpainting or upscaling.

Updated 2 years ago

950.5K runs

Recommended Models

Frequently asked questions

Which models are the fastest?

If you need to upscale images quickly while maintaining decent quality, nightmareai/real-esrgan is one of the fastest and most reliable models. It’s well-suited for bulk processing and supports both general images and portraits.

For a balance between speed and creative flexibility, batouresearch/magic-image-refiner offers fast results with adjustable refinement parameters for more control.

Which models offer the best balance of cost and quality?

batouresearch/magic-image-refiner provides an excellent middle ground—it’s capable of 2K upscales with impressive detail and allows you to balance between accurate and creative outputs.

If you need consistent, realistic results without much tuning, recraft-ai/recraft-crisp-upscale delivers sharp, print-ready images suitable for professional use.

What works best for portraits and facial details?

For restoring and upscaling faces, tencentarc/gfpgan and sczhou/codeformer are industry standards. They repair facial features, reduce artifacts, and bring old or AI-generated faces to life.

If you want an all-in-one solution that upscales and enhances at the same time, philz1337x/crystal-upscaler specializes in facial sharpness and skin texture.

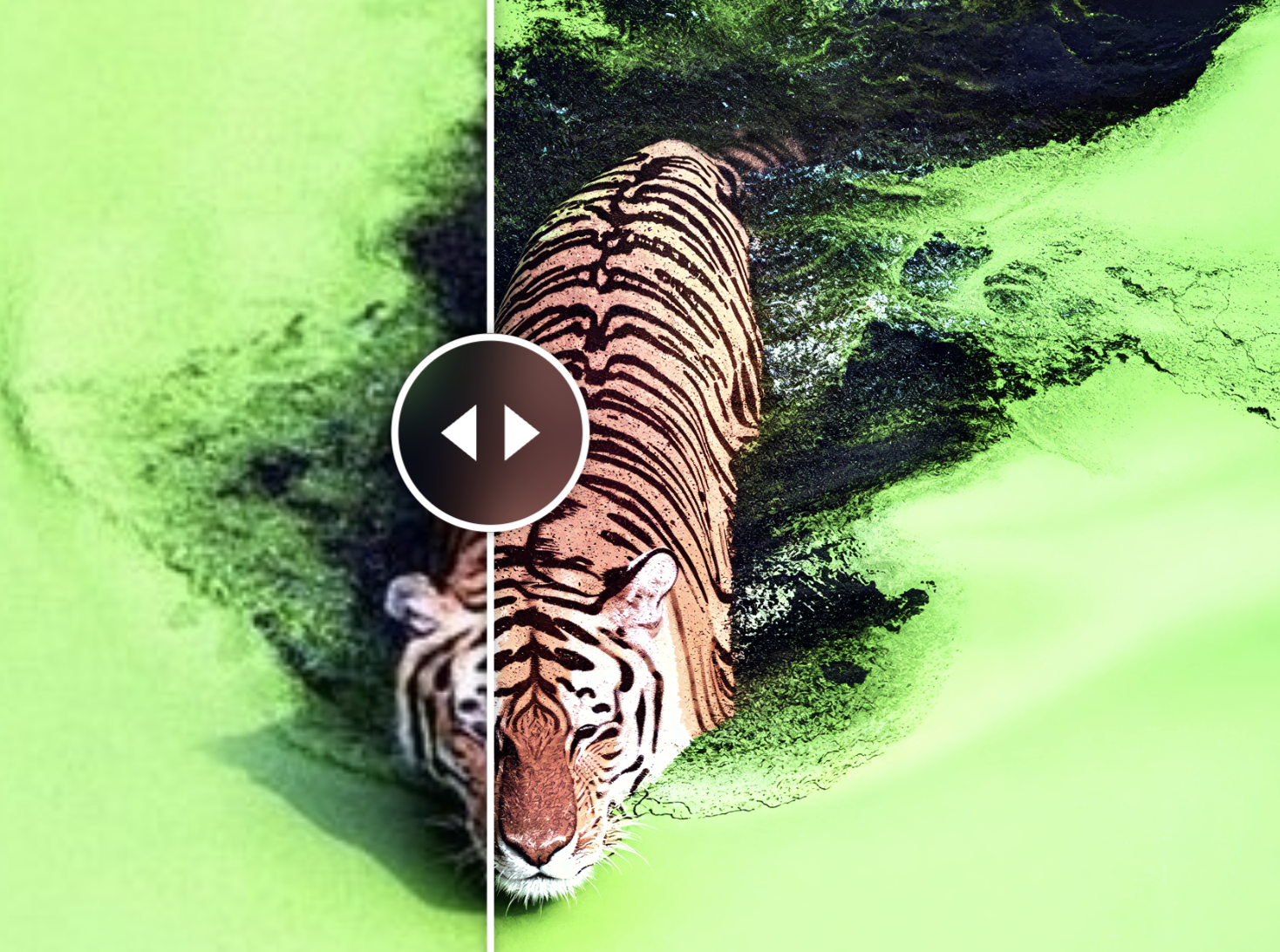

What works best for creative or stylized upscaling?

If you want to push beyond pure realism, recraft-ai/recraft-creative-upscale and batouresearch/high-resolution-controlnet-tile can hallucinate new details while preserving structure.

These models work great for AI art refinement or stylized photography, especially when you want to improve texture, lighting, or composition during upscaling.

What's the difference between key subtypes or approaches in this collection?

There are two main kinds of upscalers:

- Restorative upscalers (like tencentarc/gfpgan, sczhou/codeformer, nightmareai/real-esrgan): These focus on cleaning, sharpening, and accurately restoring the input image.

- Generative upscalers (like batouresearch/magic-image-refiner, recraft-ai/recraft-creative-upscale, batouresearch/high-resolution-controlnet-tile): These use diffusion or transformer methods to enhance or reimagine the original, sometimes adding fine details not present before.

Restorative models are better for realism; generative ones are better for stylized or creative work.

What kinds of outputs can I expect from these models?

Upscaling models typically produce high-resolution images at 2×, 4×, or higher scale, depending on the input and model.

Some, like batouresearch/magic-image-refiner, also support refinement or inpainting, allowing you to fill in missing regions or adjust small imperfections while upscaling.

How can I self-host or push a model to Replicate?

Open-source models such as nightmareai/real-esrgan or tencentarc/gfpgan can be run locally using Cog or Docker.

To publish your own upscaler, create a replicate.yaml file specifying image inputs and upscale factors, push it to your account, and it will automatically run on managed GPUs.

Can I use these models for commercial work?

Yes—many upscaling models are available for commercial use. Always review the License section on each model’s page, as some (especially academic models) may have research-only clauses or require attribution.

How do I use or run these models?

Go to the model page on Replicate, upload your image, and select your preferred upscale multiplier or options (such as face_enhance or creativity).

The model will generate a higher-resolution image that either matches or improves upon the original’s detail and texture.

What should I know before running a job in this collection?

- Start with high-quality inputs when possible—upscalers enhance details but can’t always fix heavily compressed or blurry images.

- For portraits, use face-specific models like tencentarc/gfpgan or sczhou/codeformer for the best natural look.

- If your image has artifacts or AI-related distortions, use a model with a denoise or refinement toggle (e.g., batouresearch/magic-image-refiner).

- Generative models may “hallucinate” new details—good for art, but less ideal for documentary-style work.

Any other collection-specific tips or considerations?

- For pure speed and batch jobs, use nightmareai/real-esrgan.

- For realism and fine detail, use batouresearch/magic-image-refiner or philz1337x/crystal-upscaler.

- For creative or painterly results, try recraft-ai/recraft-creative-upscale or batouresearch/high-resolution-controlnet-tile.

- When upscaling old photos, combine tencentarc/gfpgan or sczhou/codeformer first, then feed the output into a super-resolution model like batouresearch/magic-image-refiner for best results.

- Keep in mind that upscaling large images (4K and beyond) requires more GPU memory, so plan accordingly.