Control image generation

Control image generation

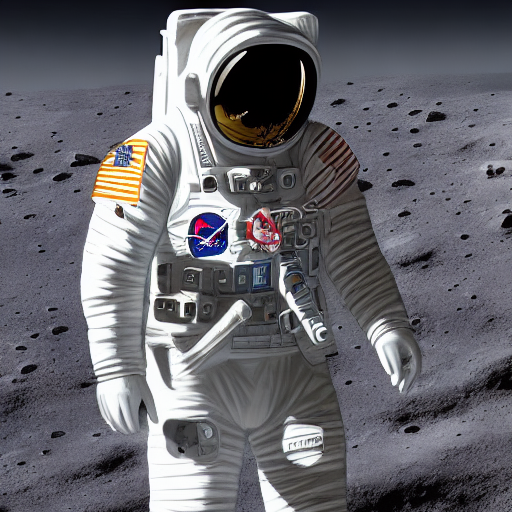

Guide image generation with more than just text. Use edge detection, depth maps, and sketches to get the results you want.

What you can do

Use edge detection to keep specific shapes and lines from an image. Great for preserving architecture or recreating specific compositions.

Control depth and space with depth maps. Perfect for retexturing objects while keeping their 3D structure intact.

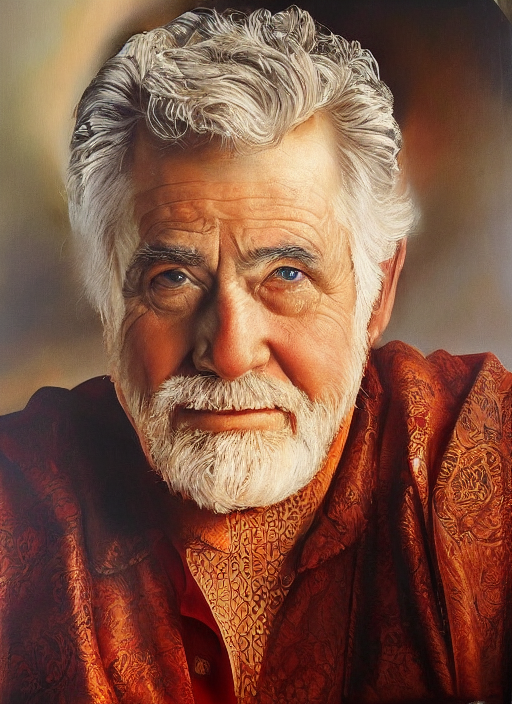

Turn rough sketches into detailed images. Start with a simple drawing and let the model fill in the details.

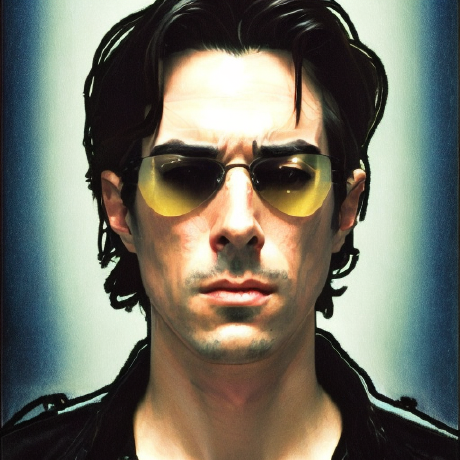

Create artistic QR codes and patterns that actually work when scanned.

Models we recommend

For professional work

The FLUX models deliver reliable results with clean outputs:

- FLUX.1 Depth Pro for depth-based control

- FLUX.1 Canny Pro for edge detection

For speed

Latent Consistency Model runs in about 0.6 seconds per image. It includes:

- Edge detection control

- Image-to-image generation

- Support for large batches (up to 50 images)

- Quality results in just 4-8 steps

For creative projects

Illusion makes cool images with spiral or other patterns, and artistic QR codes that still scan.

ControlNet Scribble turns simple drawings into detailed images - great for sketching out ideas.

Try it out

Play with different control methods in the playground. Test models side by side and experiment until you find what works.

Want to learn how it works? Read our guide →

Questions? Join us on Discord.

Featured models

Professional edge-guided image generation. Control structure and composition using Canny edge detection

Updated 3 months, 2 weeks ago

417.2K runs

Professional depth-aware image generation. Edit images while preserving spatial relationships.

Updated 3 months, 2 weeks ago

297.2K runs

fofr/latent-consistency-model

fofr/latent-consistency-modelSuper-fast, 0.6s per image. LCM with img2img, large batching and canny controlnet

Updated 2 years, 1 month ago

1.5M runs

andreasjansson/illusion

andreasjansson/illusionMonster Labs' control_v1p_sd15_qrcode_monster ControlNet on top of SD 1.5

Updated 2 years, 5 months ago

409.3K runs

zylim0702/qr_code_controlnet

zylim0702/qr_code_controlnetControlNet QR Code Generator: Simplify QR code creation for various needs using ControlNet's user-friendly neural interface, making integration a breeze. Just key in the url !

Updated 2 years, 7 months ago

795.8K runs

jagilley/controlnet-scribble

jagilley/controlnet-scribbleGenerate detailed images from scribbled drawings

Updated 3 years ago

38.3M runs

Recommended Models

Frequently asked questions

Which models are the fastest?

If you want quick, guided image generation, fofr/latent-consistency-model is the fastest option — it can generate an image in under a second while supporting Canny edge detection and batch processing.

For simpler control tasks like sketch-to-image or QR pattern generation, jagilley/controlnet-scribble and andreasjansson/illusion also run quickly and work well for experimentation.

Which models offer the best balance of cost and quality?

black-forest-labs/flux-depth-pro and black-forest-labs/flux-canny-pro deliver professional-grade results with strong prompt adherence and high image quality.

They’re ideal when you need precise control (like retexturing or preserving shapes) without the heavy compute requirements of large multi-control setups.

What works best for keeping composition or structure consistent?

For structure-preserving edits, use black-forest-labs/flux-canny-pro. It locks in the edges and layout from your reference image so your generated version stays faithful to the original.

If you’re working with 3D shapes, architecture, or anything requiring spatial depth, black-forest-labs/flux-depth-pro preserves the scene’s geometry while allowing stylistic retexturing.

What works best for creative or experimental projects?

For artistic outputs, andreasjansson/illusion creates intricate patterns, spirals, and QR code art that still scans correctly.

jagilley/controlnet-scribble is another favorite — it turns simple line drawings into detailed, high-quality scenes, great for quick sketches or brainstorming visuals.

What’s the difference between edge, depth, and scribble control?

- Edge control (like black-forest-labs/flux-canny-pro or lllyasviel/controlnet-canny) focuses on the outlines and contours of your input, preserving structural details.

- Depth control (like black-forest-labs/flux-depth-pro or lllyasviel/controlnet-depth2img) maintains 3D spatial relationships, perfect for realistic lighting and object placement.

- Scribble or sketch control (like jagilley/controlnet-scribble) starts from rough shapes or doodles and fills in fine detail based on your prompt.

What kinds of outputs can I expect from these models?

All models output images that follow both your text prompt and structural guidance input.

Depending on the model, you might input a depth map, edge map, or sketch, and the output will match that composition while adopting the style you describe.

How can I self-host or push a model to Replicate?

Open-weight models like black-forest-labs/flux-depth-dev and black-forest-labs/flux-canny-dev can be self-hosted using Cog or Docker.

If you’re training or experimenting, you can fork an existing ControlNet model, define inputs in a replicate.yaml, and push it to your account to make it available on Replicate.

Can I use these models for commercial work?

Yes, most ControlNet-based models are cleared for commercial use, especially the black-forest-labs/flux-pro variants and fofr/latent-consistency-model.

Always confirm license terms on the model’s page — some community models or QR-based tools might have additional attribution requirements.

How do I use or run these models?

Upload a reference image or control map (like an edge or depth image), describe what you want in plain text, and run the model.

For example:

- “Turn this sketch into a watercolor painting” → jagilley/controlnet-scribble

- “Keep the building outline but change it to nighttime” → black-forest-labs/flux-canny-pro

You’ll get a generated image that follows both your guide and your description.

What should I know before running a job in this collection?

- Your input control image determines how closely the output follows structure—edges and depth maps give the most consistency.

- Lower creativity or guidance values produce more faithful edits, while higher values lead to freer, more artistic interpretations.

- Batch generation (available in fofr/latent-consistency-model) is efficient for large-scale workflows.

Any other collection-specific tips or considerations?

- Combine depth and edge control for highly detailed scene edits.

- If you’re doing architectural or interior work, adirik/interior-design can generate photorealistic rooms with ControlNet guidance.

- For stylized or surreal outputs, experiment with multiple control layers using pharmapsychotic/realvisxl-v3-multi-controlnet-lora.

- When generating artistic QR codes, test scanability with your phone to ensure the pattern still functions.