Edit any image

What you can do

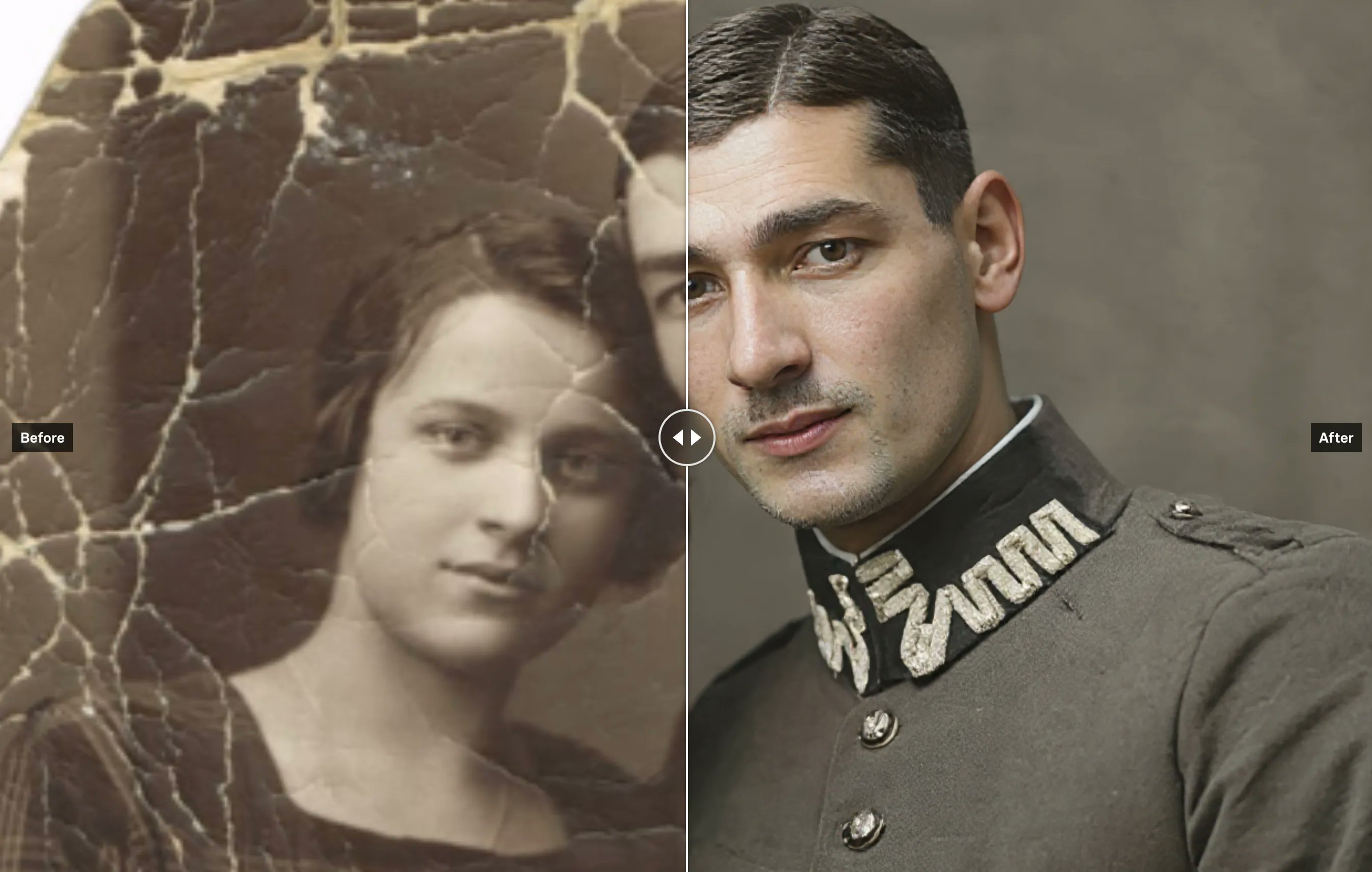

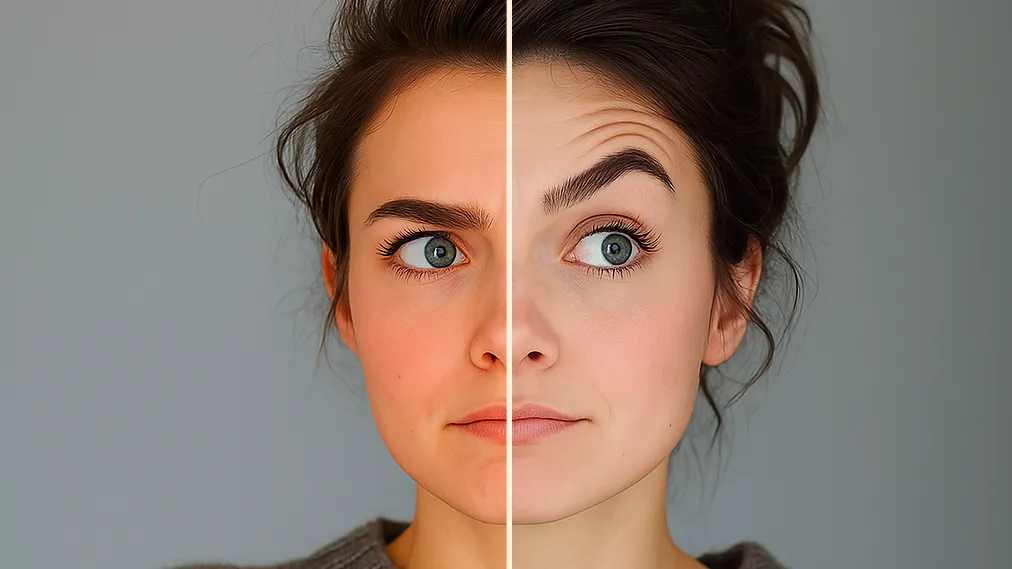

Remove or replace objects in your images.

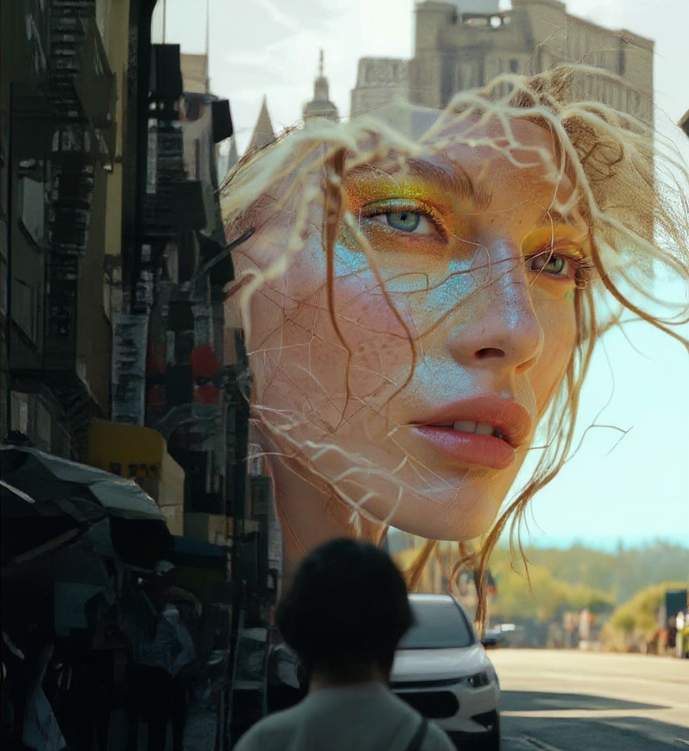

Change the style of your images (e.g. "make this Studio Ghibli style")

Add text that looks natural. Generate images with text that matches specific fonts and styles.

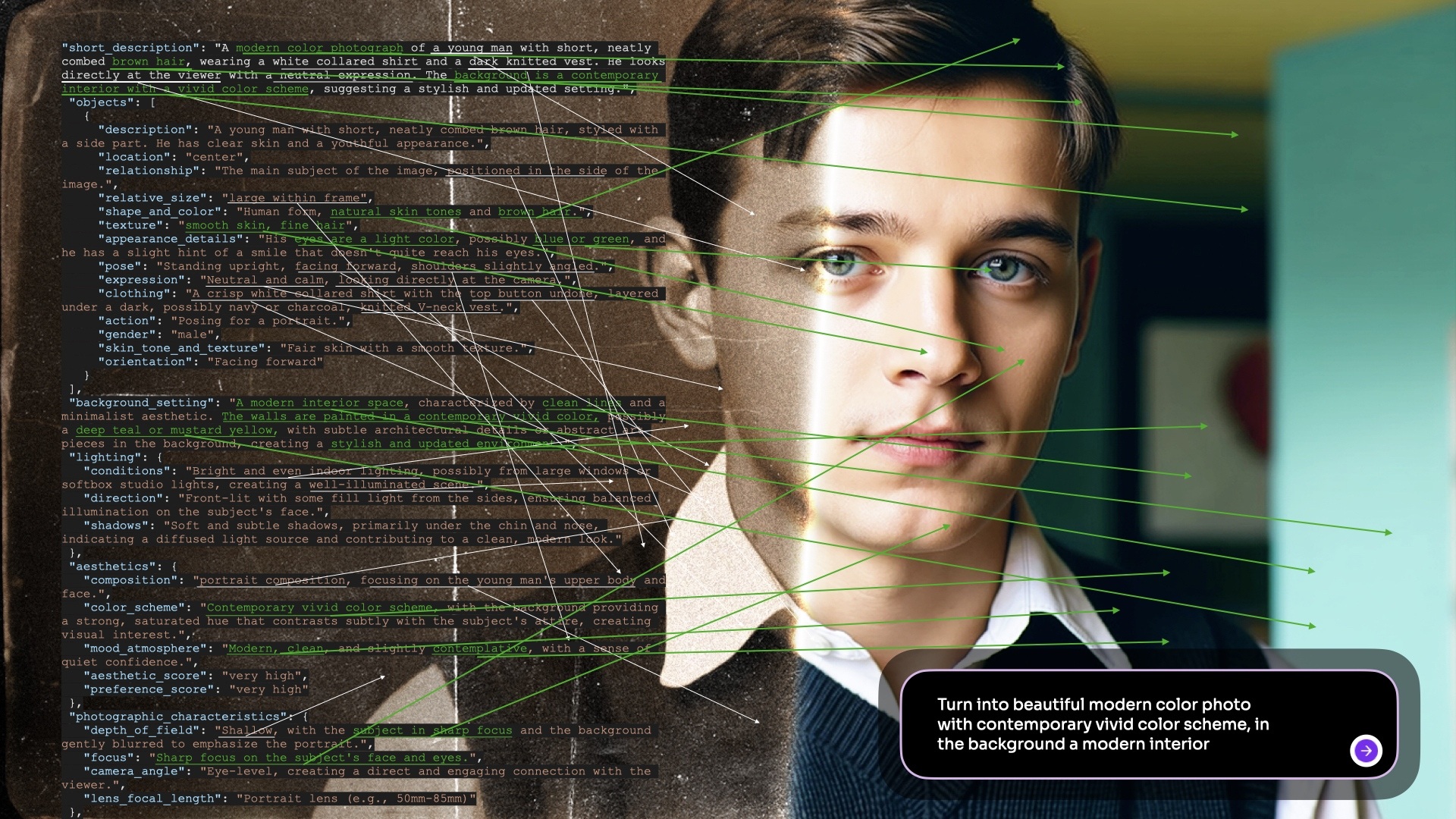

Change specific parts of an image while keeping its structure. Use depth maps or edge detection to control what changes.

Create variations of your images. Keep what works while exploring new possibilities.

Need an in-depth exploration of all the latest image editing models? Check out this blog post

Models we recommend

For precise edits

Nano-banana and Nano-banana-pro could truly be the end of Photoshop. Great at following instructions and supports multi-image input. Here are some sample prompts:

- "Add a plant next to the woman"

- "Change the text in the image from 'Hi' to 'Hello'"

- "Turn me into a Simpsons character"

FLUX.1 Kontext Pro and the more advanced FLUX.1 Kontext Max are the first of its kind, and many still find these to perform better than some of the latest.

gpt-image-1.5 from OpenAI is also a solid choice for image editing and supports multiple image inputs (i.e. "combine all of these images into one scene"). (This model requires an OpenAI API key.)

For fast edits

prunaai/p-image-edit is a sub 1 second 0.01$ multi-image editing model built for production use cases.

qwen/qwen-image-edit-plus is fantastic for simple image editing capabilities and is on the cheaper side among all the state-of-the-arts.

For text and typography

Ideogram v3 excels at:

- Adding text that looks natural

- Matching specific fonts and styles

- High-quality general inpainting

Need faster results? Try Ideogram v3 Turbo. Or learn more about running Ideogram models with an API.

Nano-banana and Nano-banana-pro can also be your go-tos here.

Try it out

Test different editing approaches in the playground. Compare models side by side to find what works best for your project.

Want to learn more about inpainting? Check out our guide →

Questions? Join us on Discord.

Featured models

Google's state of the art image generation and editing model 🍌🍌

Updated 15 hours ago

14.5M runs

Google's latest image editing model in Gemini 2.5

Updated 2 weeks ago

85.1M runs

openai/gpt-image-1.5

openai/gpt-image-1.5OpenAI's latest image generation model with better instruction following and adherence to prompts

Updated 4 weeks, 1 day ago

3.2M runs

bytedance/seedream-4.5

bytedance/seedream-4.5Seedream 4.5: Upgraded Bytedance image model with stronger spatial understanding and world knowledge

Updated 2 months, 2 weeks ago

3.6M runs

prunaai/p-image-edit

prunaai/p-image-editA sub 1 second 0.01$ multi-image editing model built for production use cases. For image generation, check out p-image here: https://replicate.com/prunaai/p-image

Updated 2 months, 2 weeks ago

14.8M runs

bytedance/seedream-4

bytedance/seedream-4Unified text-to-image generation and precise single-sentence editing at up to 4K resolution

Updated 2 months, 4 weeks ago

27.3M runs

A premium text-based image editing model that delivers maximum performance and improved typography generation for transforming images through natural language prompts

Updated 3 months, 1 week ago

9.8M runs

prunaai/flux-kontext-fast

prunaai/flux-kontext-fastUltra fast flux kontext endpoint

Updated 3 months, 2 weeks ago

19.3M runs

The latest Qwen-Image’s iteration with improved multi-image editing, single-image consistency, and native support for ControlNet

Updated 4 months, 4 weeks ago

9.5M runs

Edit images using a prompt. This model extends Qwen-Image’s unique text rendering capabilities to image editing tasks, enabling precise text editing

Updated 6 months ago

1.6M runs

Open-weight version of FLUX.1 Kontext

Updated 7 months, 3 weeks ago

6.5M runs

Recommended Models

Frequently asked questions

Which models are the fastest?

If you want quick edits to an image, google/nano-banana is a strong choice—it handles editing using simple instructions in text, and it supports multi-image input.

Another fast option is bytedance/seedream-4, which supports editing at higher resolution without huge compute time.

Which models offer the best balance of cost and quality?

For reliable edits with good prompt following and identity preservation, black-forest-labs/flux-kontext-pro offers a solid middle ground.

If you’re working on a more premium workflow (typography, precise edits, full stylization), black-forest-labs/flux-kontext-max scales up quality and control.

What works best for removing or replacing objects in an image?

If you need to remove or swap items (for example a sign, person, or piece of furniture), models like bria/eraser and bria/genfill are designed for clean object removal and visual continuity.

For broader edits—such as changing a background or adding new elements while retaining structure—black-forest-labs/flux-kontext-pro works well with directed prompts.

What works best for changing style or adding text in images?

If your edit centers on changing style (for instance “make this image look like a Studio Ghibli painting”) or adding text that looks like it belongs, ideogram-ai/ideogram-v3 is excellent for natural typography and stylized inpainting.

For depth-aware or edge-preserving edits (e.g., changing pose or structure while keeping main subjects intact), black-forest-labs/flux-depth-pro or black-forest-labs/flux-canny-pro provide more control.

What’s the difference between key subtypes or approaches in this collection?

There are two main approaches:

- Instruction-based image editors (like google/nano-banana, black-forest-labs/flux-kontext-pro) where you supply a text prompt and the model edits the image accordingly. These are very flexible for arbitrary changes.

- Structure-aware or control-based editors (like black-forest-labs/flux-depth-pro, black-forest-labs/flux-canny-pro, bria/eraser) where you use additional input (edge maps, depth maps, masking) to steer how the edit happens. These are great when you need fidelity to original composition.

What kinds of outputs can I expect from these models?

You’ll typically get an edited image file (same resolution or slightly changed depending on settings) where the requested modifications have been applied.

Some models also output additional metadata or allow multi-image input (for example combining two photos or layering edits) depending on the version.

How can I self-host or push a model to Replicate?

If the model is open source, you can clone the repo and run it locally using Cog or Docker.

To publish your own model, prepare a replicate.yaml file that defines inputs (image, mask, prompt) and outputs, then push it to your Replicate account for use on managed hardware.

Can I use these models for commercial work?

Yes—many image-editing models support commercial use. Always check the License section on each model’s page to confirm.

Also ensure you have rights to the image you are editing, especially if you plan to publish or monetize the output.

How do I use or run these models?

Open a model’s page on Replicate, upload your image (and optional mask or reference image), and enter a prompt describing your edit (e.g., “change the car colour to red and add a banner”).

The model will return a modified image you can download. Some models support further options like preserving identity, controlling style strength, or combining multiple inputs.

What should I know before running a job in this collection?

- Use a good quality input image (well lit, clear subject) for best results—editing messy inputs is harder.

- If you want to keep a person’s identity, mention that in the prompt (e.g., “keep the same face, just change the outfit”).

- For structural edits (pose, background, mask), models that support control maps (depth/edges) will yield better fidelity.

- Complex edits are often better performed in stages (first replace object, then refine style) rather than one large prompt.

Any other collection-specific tips or considerations?

- For combining images (e.g., merge two photos into one scene), pick models that accept multi-image input, like black-forest-labs/flux-kontext-max or qwen/qwen-image-edit.

- For text overlays (signs, posters), prefer models optimized for typography (like ideogram-ai/ideogram-v3).

- When doing style transfer (photo → painting, sketch, anime), be explicit in your prompt about the style and what to keep.

- Always check that your output respects original subjects (especially people), and if you’re using commercial content verify rights accordingly.