Restore images

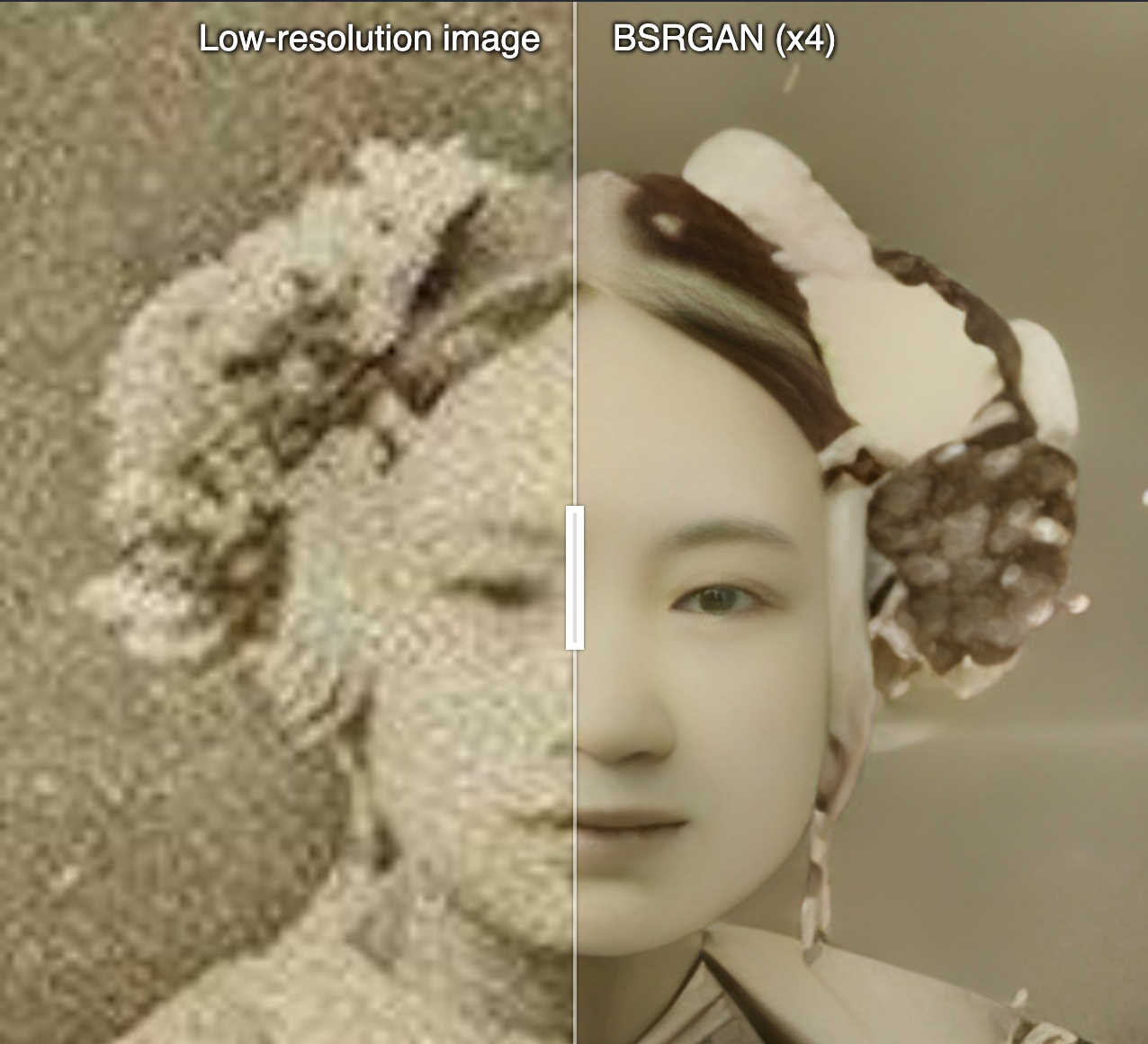

These models restore and improve images by fixing defects like blur, noise, and low resolution. Key capabilities:

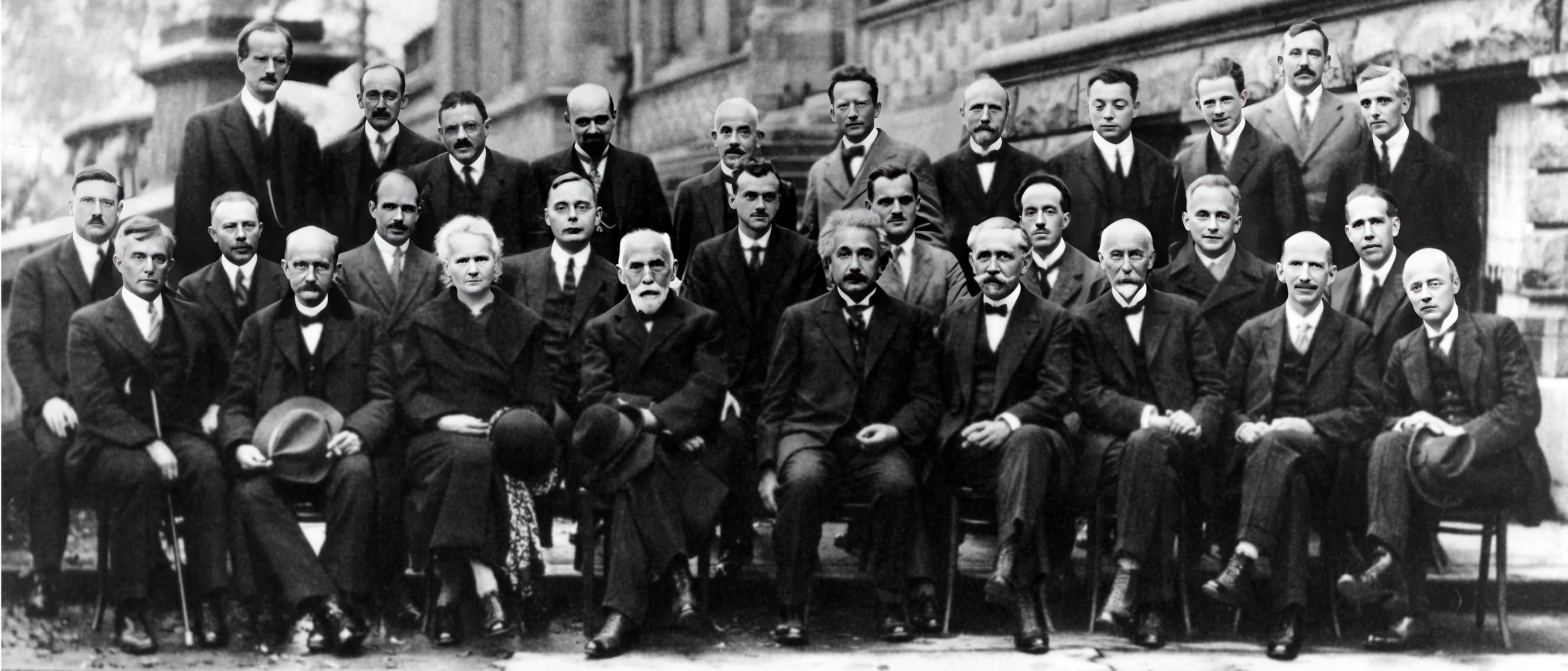

- Deblurring - Sharpen blurry images by reversing blur effects. Useful for old photos.

- Denoising - Remove grain and artifacts by learning noise patterns.

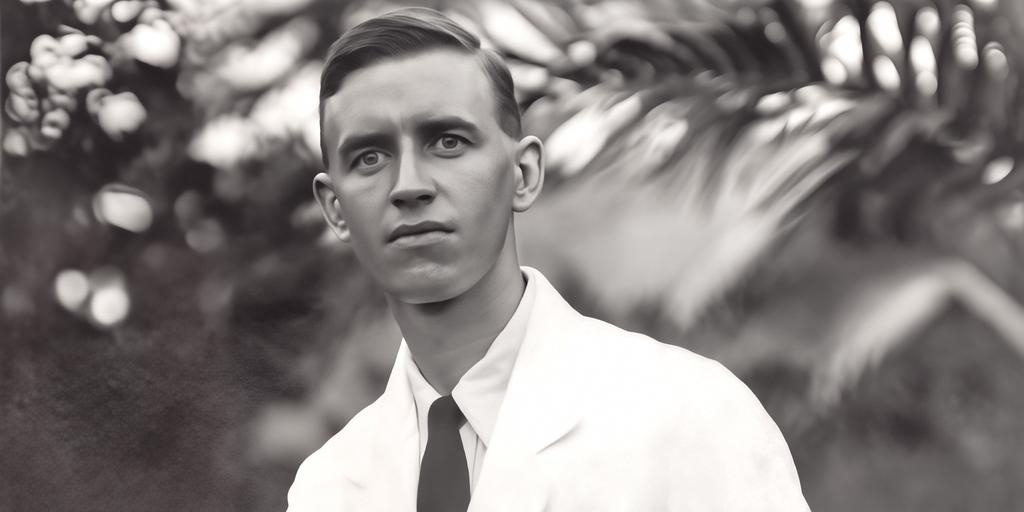

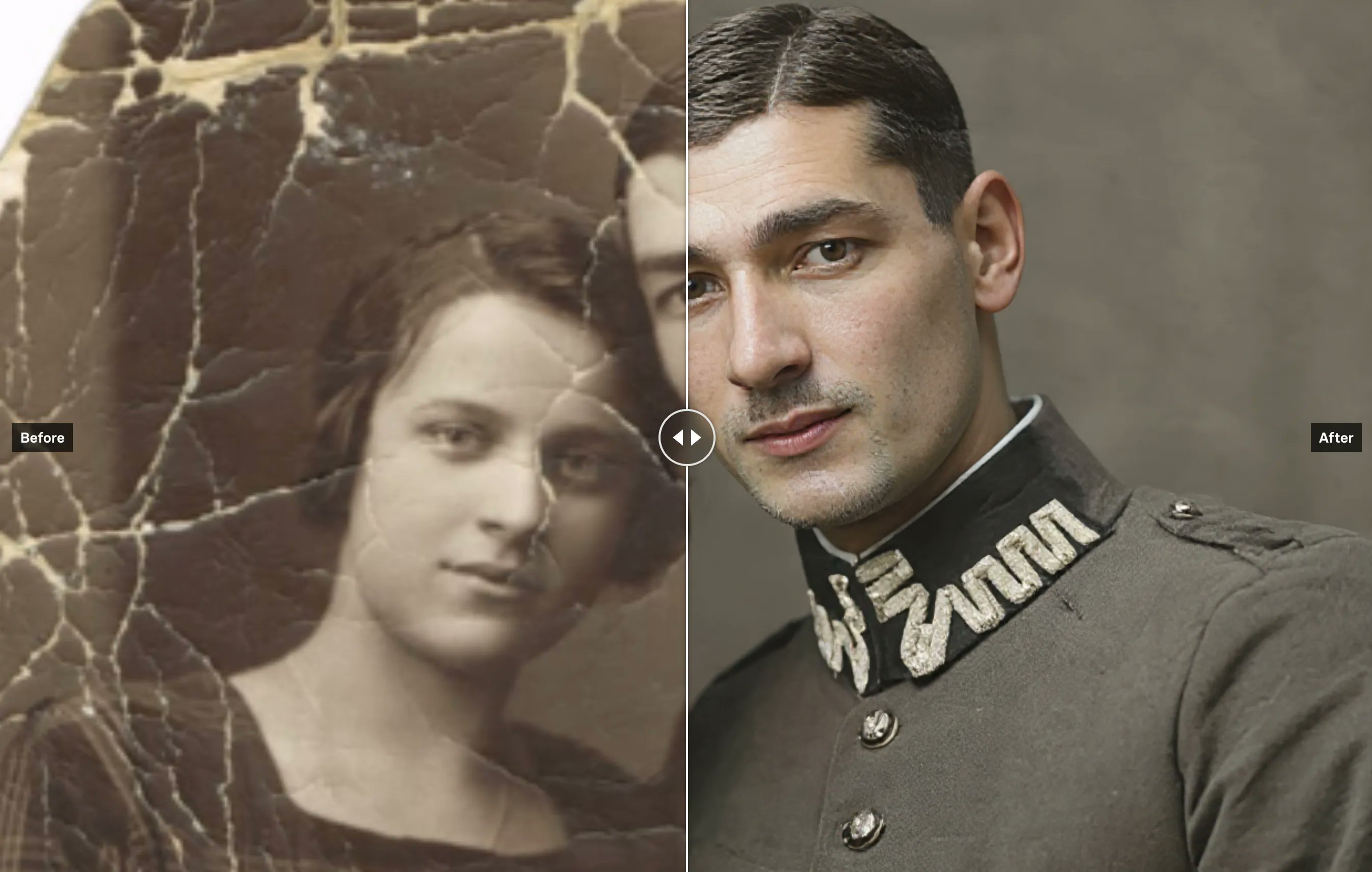

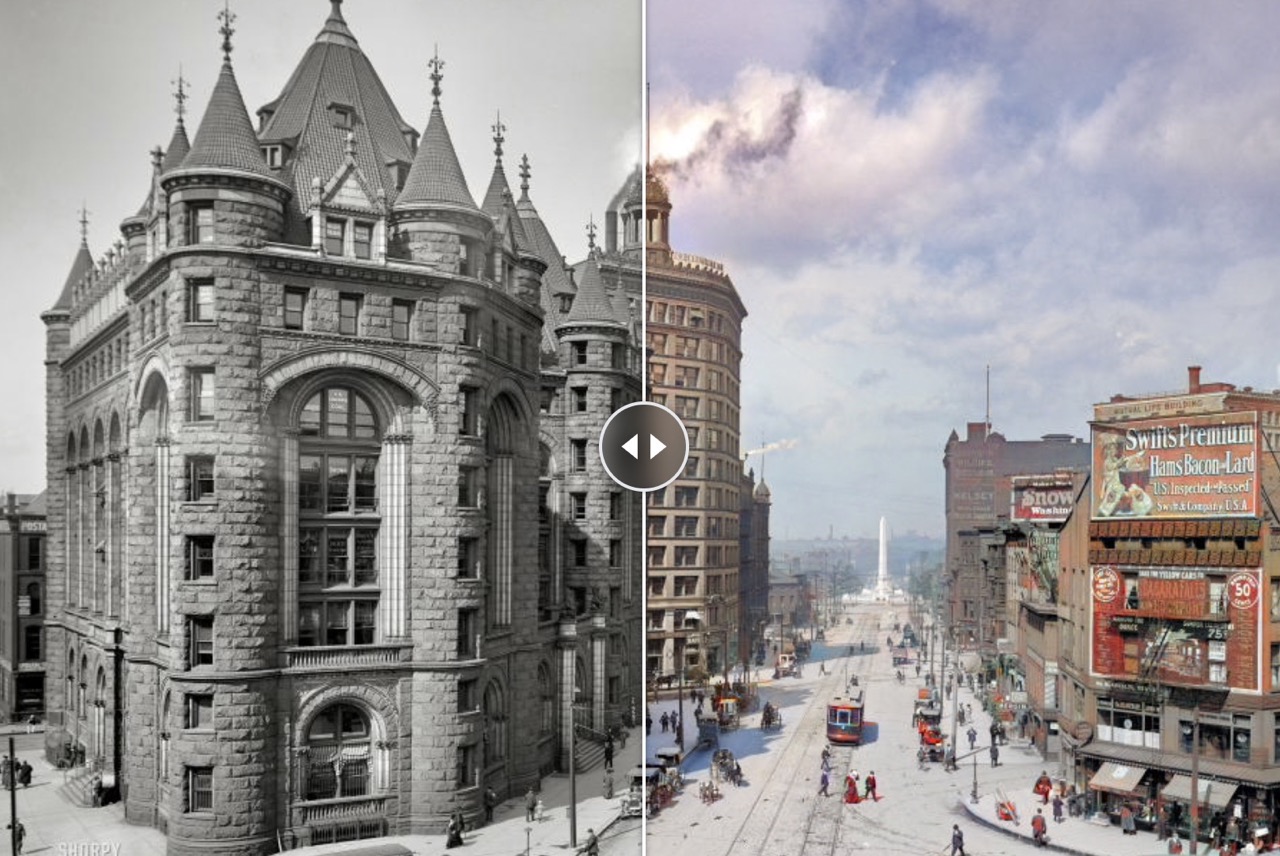

- Colorization - Add realistic color to black and white photos.

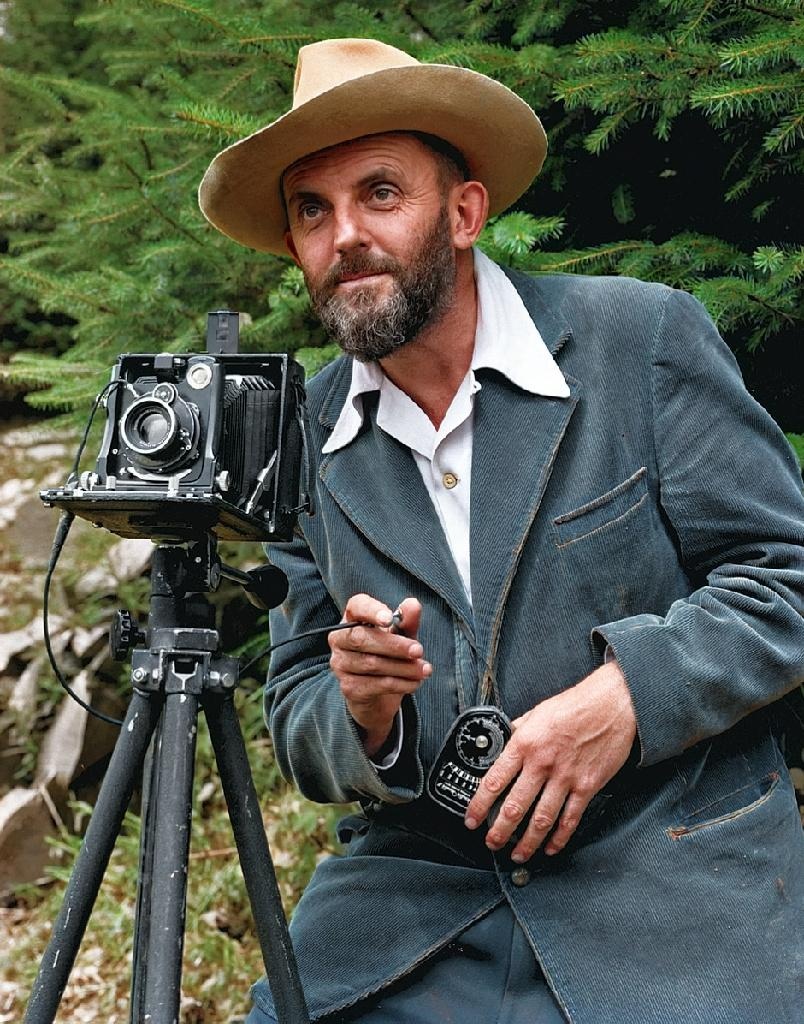

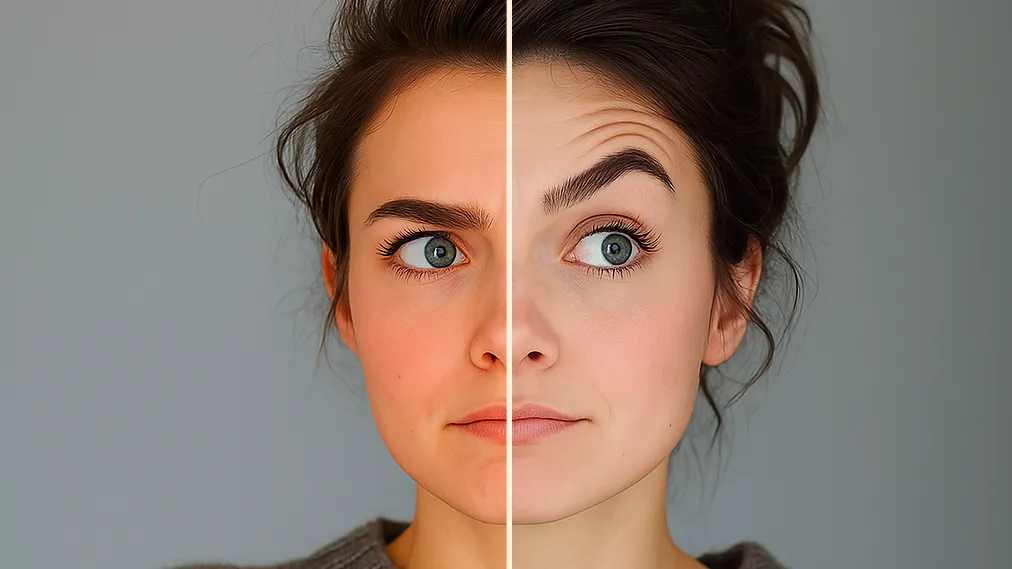

- Face restoration - Improve the image quality of faces in old photos, or unrealistic AI generated faces.

Our Picks

Best photo restoration model: jingyunliang/swinir.

If you need to sharpen a blurry photo, upscale a small picture, or remove noise or compression artifacts, start with jingyunliang/swinir. It's a fast and powerful model for many types of photo restoration. Another popular choice is megvii-research/nafnet.

Best colorization model: piddnad/ddcolor

The best model for adding color to black and white photos is piddnad/ddcolor. It runs faster and produces more vibrant results than other models. If you are looking for more diverse outputs, try cjwbw/bigcolor which will give you several options from one input.

Best face restoration model: tencentarc/gfpgan

If you’re looking for a face restoration model, start with tencentarc/gfpgan, which runs in a fraction of a second. If you need even more realistic faces, try lucataco/codeformer.

For more options, make sure to check out our image upscaling collection →

Featured models

Use FLUX Kontext to restore, fix scratches and damage, and colorize old photos

Updated 3 months, 1 week ago

943.5K runs

sczhou/codeformer

sczhou/codeformerRobust face restoration algorithm for old photos / AI-generated faces

Updated 1 year, 1 month ago

50.3M runs

tencentarc/gfpgan

tencentarc/gfpganPractical face restoration algorithm for *old photos* or *AI-generated faces*

Updated 1 year, 11 months ago

109.2M runs

piddnad/ddcolor

piddnad/ddcolorTowards Photo-Realistic Image Colorization via Dual Decoders

Updated 2 years, 1 month ago

1.9M runs

jingyunliang/swinir

jingyunliang/swinirImage Restoration Using Swin Transformer

Updated 3 years, 4 months ago

6.3M runs

megvii-research/nafnet

megvii-research/nafnetNonlinear Activation Free Network for Image Restoration

Updated 3 years, 9 months ago

1.5M runs

Recommended Models

Frequently asked questions

Which models are the fastest for restoring images?

If you need quick results, tencentarc/gfpgan and cjwbw/bigcolor are among the fastest options in the image-restoration collection. They can process typical images in just a few seconds, which makes them great for quick cleanup of old or low-quality photos.

Faster models can sometimes leave minor artifacts, especially on heavily damaged images.

Which models offer the best balance of quality and flexibility?

jingyunliang/swinir is a strong all-around model for general image restoration — it handles denoising, deblurring, and improving low-resolution images with consistent results.

For more specialised tasks, models like piddnad/ddcolor can add color to black-and-white photos, while megvii-research/nafnet is good for restoring sharpness and structure without over-smoothing.

What works best for restoring old or damaged photos with faces?

If your image includes faces — like family portraits or old photographs — tencentarc/gfpgan is designed for this exact purpose. It reconstructs facial features realistically even when the original image is blurry or heavily degraded.

You can pair it with another restoration model like jingyunliang/swinir if you want to clean up the background or sharpen other areas.

What’s best if I want to add color to vintage black-and-white images?

For faithful colorisation of grayscale images, piddnad/ddcolor is the top choice in the image-restoration collection. It can handle portraits, landscapes, and even artistic stills with good accuracy.

If you prefer more varied or stylised color options, cjwbw/bigcolor generates multiple alternative colorisations from the same input.

How do the main types of image-restoration models differ?

- Face restoration models like tencentarc/gfpgan are focused on reconstructing facial details while keeping them natural.

- General restoration models such as jingyunliang/swinir and megvii-research/nafnet clean up noise, fix blur, and enhance structure across the whole image.

- Colorisation models like piddnad/ddcolor or cjwbw/bigcolor add or enhance color information after restoration.

- Some models are speed-oriented, while others focus on fine detail and quality.

What kinds of outputs can I expect?

Most models in this collection return restored image files in PNG or JPG format. Depending on the model, you might see:

- Sharper, clearer faces.

- Reduced noise and blur.

- Improved resolution and structure.

- Realistic or stylised color added to grayscale images.

Each model focuses on different aspects of restoration, so results will vary.

How can I self-host or publish my own image-restoration model?

You can package your own restoration model with Cog and push it to Replicate. This lets you set up inputs, outputs, and configuration while deciding whether to keep it private or share it with others.

Can I use image-restoration models for commercial work?

Many models in the image-restoration collection allow commercial use, but license terms vary. Always check the model’s license and attribution requirements before using outputs in commercial projects.

How do I use these models on Replicate?

- Choose a model from the image-restoration collection.

- Upload your image or provide a URL.

- Select options like face restoration, colorisation, or enhancement if available.

- Run the model and download the restored output.

- Use the restored image in your workflow — for printing, archiving, or creative projects.

What should I know before restoring an image?

- The better your input scan or photo, the better the result.

- Face restoration works best when faces are at least partially visible.

- Colorisation works best when the base image is clean — consider running a restoration model first.

- Larger images may take longer to process.

- Restoration can recover or enhance details, but it won’t perfectly recreate lost information.

- Always inspect outputs carefully, especially for historical or sentimental photos.