Generate speech

Generate natural-sounding speech from text with these powerful models. Clone your own voice or pick from a variety of languages and speaking styles.

Featured models

Minimax Speech 2.8 Turbo: Turn text into natural, expressive speech with voice cloning, emotion control, and support for 40+ languages

Updated 2 weeks ago

11.5K runs

The fastest open source TTS model without sacrificing quality.

Updated 2 months ago

130.5K runs

jaaari/kokoro-82m

jaaari/kokoro-82mKokoro v1.0 - text-to-speech (82M params, based on StyleTTS2)

Updated 1 year ago

79.7M runs

Recommended Models

Frequently asked questions

Which models are the fastest for generating speech?

If low latency matters most, minimax/speech-02-turbo is the standout model in the text-to-speech collection. It’s designed for near real-time audio generation, making it ideal for interactive experiences like chatbots, voice assistants, and in-game dialogue.

Higher-fidelity models like afiaka87/tortoise-tts are slower and better suited for offline rendering or projects where speed isn’t critical.

Which models offer the best balance of cost, speed, and voice quality?

minimax/speech-02-hd is a strong all-around option in the text-to-speech collection. It provides clear, natural voices with expressive control and reasonable generation time.

Open-source options like afiaka87/tortoise-tts may be more cost-efficient to self-host, but they’re slower and less predictable in performance.

What works best for creating high-quality voiceovers or narration?

For polished audio content like voiceovers, podcasts, audiobooks, or narration, minimax/speech-02-hd is a great fit. It supports expressive delivery, natural pacing, and multiple languages. If you need finer emotional control or unique character voices, resemble-ai/chatterbox also performs well.

What’s best for real-time speech in apps or games?

For applications where speed is essential—like voice-enabled apps, live interactions, or game characters—minimax/speech-02-turbo is the best match. It prioritizes fast generation and low latency while maintaining solid audio clarity.

How do the main types of text-to-speech models differ?

- Turbo vs HD: Turbo models focus on speed with lower latency, while HD models prioritize audio quality and expressiveness.

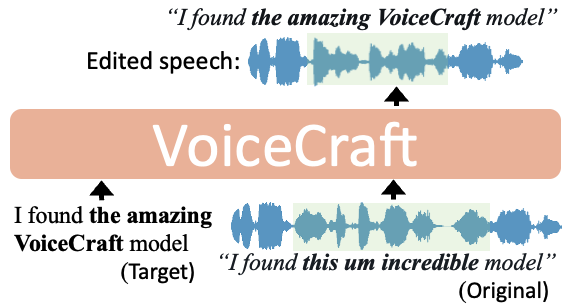

- Voice cloning vs preset voices: Some models (e.g., resemble-ai/chatterbox, MiniMax cloning) let you clone a voice from a short sample. Others offer fixed voice options.

- Emotion and language support: Advanced models support multiple languages and emotional tone, while simpler ones stick to a single voice style.

- Open vs hosted: Open-source models can be self-hosted with more setup, while hosted models on Replicate run instantly without extra configuration.

What’s best for expressive or character-driven audio?

For projects like games, animations, or storytelling, resemble-ai/chatterbox excels. It supports emotion control and fast voice cloning, letting you generate distinct character voices from just a few seconds of reference audio.

What types of outputs can I expect from text-to-speech models?

Most text-to-speech models return audio files, typically in MP3 format. Some also support WAV. Output voice options and supported languages vary by model, so check the model page for specifics.

How can I self-host or push a text-to-speech model to Replicate?

Open-source models like afiaka87/tortoise-tts can be self-hosted with standard tooling. If you want to publish your own model on Replicate, package it with the required files and configuration and push it from your account.

Can I use text-to-speech models for commercial work?

Many models in the text-to-speech collection support commercial use, but always check the license. Some models include watermarking or usage restrictions that may affect how you use the audio in commercial projects.

How do I use text-to-speech models on Replicate?

- Choose a model from the text-to-speech collection.

- Provide your text input, and optionally a voice sample if cloning is supported.

- Run the model to generate audio.

- Download the file in the available output format (usually MP3 or WAV).

What else should I consider when working with text-to-speech models?

- Turbo models are ideal for low-latency use; HD models shine in polished, high-quality audio.

- Check language support if you need multilingual output.

- Some models apply audio watermarking, which may limit how you can use the output.

- If you’re cloning voices, make sure you have rights to use the source audio.

- Always review the model license before using outputs commercially.