Failed to load versions. Head to the versions page to see all versions for this model.

You're looking at a specific version of this model. Jump to the model overview.

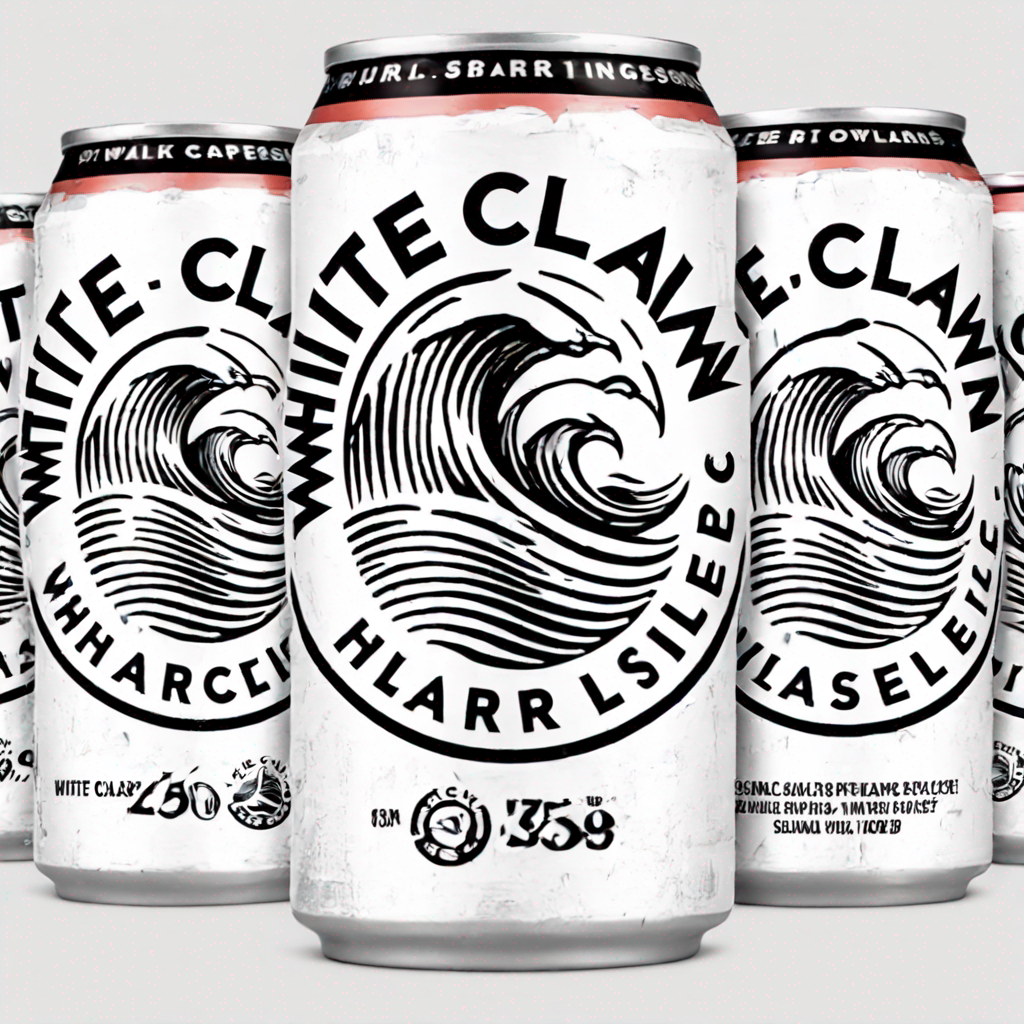

shahildhotre /sdxl-finetune-whiteclaw:8ead6062

Input

Run this model in Node.js with one line of code:

npm install replicateREPLICATE_API_TOKEN environment variable:export REPLICATE_API_TOKEN=<paste-your-token-here>Find your API token in your account settings.

import Replicate from "replicate";

import fs from "node:fs";

const replicate = new Replicate({

auth: process.env.REPLICATE_API_TOKEN,

});Run shahildhotre/sdxl-finetune-whiteclaw using Replicate’s API. Check out the model's schema for an overview of inputs and outputs.

const output = await replicate.run(

"shahildhotre/sdxl-finetune-whiteclaw:8ead60620ef130afb844f351e95777224e05a2792c221089461869ee9e4385cf",

{

input: {

width: 1024,

height: 1024,

prompt: "white claw cans",

refine: "no_refiner",

scheduler: "K_EULER",

lora_scale: 0.6,

num_outputs: 1,

guidance_scale: 7.5,

apply_watermark: true,

high_noise_frac: 0.8,

negative_prompt: "",

prompt_strength: 0.8,

num_inference_steps: 50

}

}

);

// To access the file URL:

console.log(output[0].url()); //=> "http://example.com"

// To write the file to disk:

fs.writeFile("my-image.png", output[0]);To learn more, take a look at the guide on getting started with Node.js.

pip install replicateREPLICATE_API_TOKEN environment variable:export REPLICATE_API_TOKEN=<paste-your-token-here>Find your API token in your account settings.

import replicateRun shahildhotre/sdxl-finetune-whiteclaw using Replicate’s API. Check out the model's schema for an overview of inputs and outputs.

output = replicate.run(

"shahildhotre/sdxl-finetune-whiteclaw:8ead60620ef130afb844f351e95777224e05a2792c221089461869ee9e4385cf",

input={

"width": 1024,

"height": 1024,

"prompt": "white claw cans",

"refine": "no_refiner",

"scheduler": "K_EULER",

"lora_scale": 0.6,

"num_outputs": 1,

"guidance_scale": 7.5,

"apply_watermark": True,

"high_noise_frac": 0.8,

"negative_prompt": "",

"prompt_strength": 0.8,

"num_inference_steps": 50

}

)

print(output)To learn more, take a look at the guide on getting started with Python.

REPLICATE_API_TOKEN environment variable:export REPLICATE_API_TOKEN=<paste-your-token-here>Find your API token in your account settings.

Run shahildhotre/sdxl-finetune-whiteclaw using Replicate’s API. Check out the model's schema for an overview of inputs and outputs.

curl -s -X POST \

-H "Authorization: Bearer $REPLICATE_API_TOKEN" \

-H "Content-Type: application/json" \

-H "Prefer: wait" \

-d $'{

"version": "shahildhotre/sdxl-finetune-whiteclaw:8ead60620ef130afb844f351e95777224e05a2792c221089461869ee9e4385cf",

"input": {

"width": 1024,

"height": 1024,

"prompt": "white claw cans",

"refine": "no_refiner",

"scheduler": "K_EULER",

"lora_scale": 0.6,

"num_outputs": 1,

"guidance_scale": 7.5,

"apply_watermark": true,

"high_noise_frac": 0.8,

"negative_prompt": "",

"prompt_strength": 0.8,

"num_inference_steps": 50

}

}' \

https://api.replicate.com/v1/predictionsTo learn more, take a look at Replicate’s HTTP API reference docs.

Add a payment method to run this model.

By signing in, you agree to our

terms of service and privacy policy

Output

{

"completed_at": "2024-06-28T18:55:28.515955Z",

"created_at": "2024-06-28T18:55:05.850000Z",

"data_removed": false,

"error": null,

"id": "cxx8t9kyf9rgg0cgc0svj2b70c",

"input": {

"width": 1024,

"height": 1024,

"prompt": "white claw cans",

"refine": "no_refiner",

"scheduler": "K_EULER",

"lora_scale": 0.6,

"num_outputs": 1,

"guidance_scale": 7.5,

"apply_watermark": true,

"high_noise_frac": 0.8,

"negative_prompt": "",

"prompt_strength": 0.8,

"num_inference_steps": 50

},

"logs": "Using seed: 6337\nEnsuring enough disk space...\nFree disk space: 1732876619776\nDownloading weights: https://replicate.delivery/pbxt/rwvzo0hcN0IWNdZCgfbpizCVnYZH6X7BSjGZLgdFfFvwjLDTA/trained_model.tar\n2024-06-28T18:55:09Z | INFO | [ Initiating ] chunk_size=150M dest=/src/weights-cache/3a601f772800c45d url=https://replicate.delivery/pbxt/rwvzo0hcN0IWNdZCgfbpizCVnYZH6X7BSjGZLgdFfFvwjLDTA/trained_model.tar\n2024-06-28T18:55:12Z | INFO | [ Complete ] dest=/src/weights-cache/3a601f772800c45d size=\"186 MB\" total_elapsed=2.974s url=https://replicate.delivery/pbxt/rwvzo0hcN0IWNdZCgfbpizCVnYZH6X7BSjGZLgdFfFvwjLDTA/trained_model.tar\nb''\nDownloaded weights in 3.123924493789673 seconds\nLoading fine-tuned model\nDoes not have Unet. assume we are using LoRA\nLoading Unet LoRA\nPrompt: white claw cans\ntxt2img mode\n 0%| | 0/50 [00:00<?, ?it/s]/usr/local/lib/python3.9/site-packages/diffusers/models/attention_processor.py:1946: FutureWarning: `LoRAAttnProcessor2_0` is deprecated and will be removed in version 0.26.0. Make sure use AttnProcessor2_0 instead by settingLoRA layers to `self.{to_q,to_k,to_v,to_out[0]}.lora_layer` respectively. This will be done automatically when using `LoraLoaderMixin.load_lora_weights`\ndeprecate(\n 2%|▏ | 1/50 [00:00<00:11, 4.22it/s]\n 4%|▍ | 2/50 [00:00<00:11, 4.22it/s]\n 6%|▌ | 3/50 [00:00<00:11, 4.23it/s]\n 8%|▊ | 4/50 [00:00<00:10, 4.23it/s]\n 10%|█ | 5/50 [00:01<00:10, 4.22it/s]\n 12%|█▏ | 6/50 [00:01<00:10, 4.21it/s]\n 14%|█▍ | 7/50 [00:01<00:10, 4.21it/s]\n 16%|█▌ | 8/50 [00:01<00:09, 4.21it/s]\n 18%|█▊ | 9/50 [00:02<00:09, 4.21it/s]\n 20%|██ | 10/50 [00:02<00:09, 4.21it/s]\n 22%|██▏ | 11/50 [00:02<00:09, 4.21it/s]\n 24%|██▍ | 12/50 [00:02<00:09, 4.21it/s]\n 26%|██▌ | 13/50 [00:03<00:08, 4.21it/s]\n 28%|██▊ | 14/50 [00:03<00:08, 4.21it/s]\n 30%|███ | 15/50 [00:03<00:08, 4.22it/s]\n 32%|███▏ | 16/50 [00:03<00:08, 4.21it/s]\n 34%|███▍ | 17/50 [00:04<00:07, 4.20it/s]\n 36%|███▌ | 18/50 [00:04<00:07, 4.20it/s]\n 38%|███▊ | 19/50 [00:04<00:07, 4.20it/s]\n 40%|████ | 20/50 [00:04<00:07, 4.21it/s]\n 42%|████▏ | 21/50 [00:04<00:06, 4.21it/s]\n 44%|████▍ | 22/50 [00:05<00:06, 4.21it/s]\n 46%|████▌ | 23/50 [00:05<00:06, 4.21it/s]\n 48%|████▊ | 24/50 [00:05<00:06, 4.20it/s]\n 50%|█████ | 25/50 [00:05<00:05, 4.21it/s]\n 52%|█████▏ | 26/50 [00:06<00:05, 4.20it/s]\n 54%|█████▍ | 27/50 [00:06<00:05, 4.20it/s]\n 56%|█████▌ | 28/50 [00:06<00:05, 4.21it/s]\n 58%|█████▊ | 29/50 [00:06<00:05, 4.20it/s]\n 60%|██████ | 30/50 [00:07<00:04, 4.20it/s]\n 62%|██████▏ | 31/50 [00:07<00:04, 4.19it/s]\n 64%|██████▍ | 32/50 [00:07<00:04, 4.19it/s]\n 66%|██████▌ | 33/50 [00:07<00:04, 4.19it/s]\n 68%|██████▊ | 34/50 [00:08<00:03, 4.19it/s]\n 70%|███████ | 35/50 [00:08<00:03, 4.20it/s]\n 72%|███████▏ | 36/50 [00:08<00:03, 4.20it/s]\n 74%|███████▍ | 37/50 [00:08<00:03, 4.19it/s]\n 76%|███████▌ | 38/50 [00:09<00:02, 4.19it/s]\n 78%|███████▊ | 39/50 [00:09<00:02, 4.19it/s]\n 80%|████████ | 40/50 [00:09<00:02, 4.19it/s]\n 82%|████████▏ | 41/50 [00:09<00:02, 4.19it/s]\n 84%|████████▍ | 42/50 [00:09<00:01, 4.19it/s]\n 86%|████████▌ | 43/50 [00:10<00:01, 4.19it/s]\n 88%|████████▊ | 44/50 [00:10<00:01, 4.19it/s]\n 90%|█████████ | 45/50 [00:10<00:01, 4.19it/s]\n 92%|█████████▏| 46/50 [00:10<00:00, 4.20it/s]\n 94%|█████████▍| 47/50 [00:11<00:00, 4.19it/s]\n 96%|█████████▌| 48/50 [00:11<00:00, 4.19it/s]\n 98%|█████████▊| 49/50 [00:11<00:00, 4.19it/s]\n100%|██████████| 50/50 [00:11<00:00, 4.19it/s]\n100%|██████████| 50/50 [00:11<00:00, 4.20it/s]",

"metrics": {

"predict_time": 19.541705237,

"total_time": 22.665955

},

"output": [

"https://replicate.delivery/pbxt/zsR8vhHFrnq3FxvF7ZI1wsI1IlkIHdOi9ceX6QtMTC5PylhJA/out-0.png"

],

"started_at": "2024-06-28T18:55:08.974250Z",

"status": "succeeded",

"urls": {

"get": "https://api.replicate.com/v1/predictions/cxx8t9kyf9rgg0cgc0svj2b70c",

"cancel": "https://api.replicate.com/v1/predictions/cxx8t9kyf9rgg0cgc0svj2b70c/cancel"

},

"version": "8ead60620ef130afb844f351e95777224e05a2792c221089461869ee9e4385cf"

}Using seed: 6337

Ensuring enough disk space...

Free disk space: 1732876619776

Downloading weights: https://replicate.delivery/pbxt/rwvzo0hcN0IWNdZCgfbpizCVnYZH6X7BSjGZLgdFfFvwjLDTA/trained_model.tar

2024-06-28T18:55:09Z | INFO | [ Initiating ] chunk_size=150M dest=/src/weights-cache/3a601f772800c45d url=https://replicate.delivery/pbxt/rwvzo0hcN0IWNdZCgfbpizCVnYZH6X7BSjGZLgdFfFvwjLDTA/trained_model.tar

2024-06-28T18:55:12Z | INFO | [ Complete ] dest=/src/weights-cache/3a601f772800c45d size="186 MB" total_elapsed=2.974s url=https://replicate.delivery/pbxt/rwvzo0hcN0IWNdZCgfbpizCVnYZH6X7BSjGZLgdFfFvwjLDTA/trained_model.tar

b''

Downloaded weights in 3.123924493789673 seconds

Loading fine-tuned model

Does not have Unet. assume we are using LoRA

Loading Unet LoRA

Prompt: white claw cans

txt2img mode

0%| | 0/50 [00:00<?, ?it/s]/usr/local/lib/python3.9/site-packages/diffusers/models/attention_processor.py:1946: FutureWarning: `LoRAAttnProcessor2_0` is deprecated and will be removed in version 0.26.0. Make sure use AttnProcessor2_0 instead by settingLoRA layers to `self.{to_q,to_k,to_v,to_out[0]}.lora_layer` respectively. This will be done automatically when using `LoraLoaderMixin.load_lora_weights`

deprecate(

2%|▏ | 1/50 [00:00<00:11, 4.22it/s]

4%|▍ | 2/50 [00:00<00:11, 4.22it/s]

6%|▌ | 3/50 [00:00<00:11, 4.23it/s]

8%|▊ | 4/50 [00:00<00:10, 4.23it/s]

10%|█ | 5/50 [00:01<00:10, 4.22it/s]

12%|█▏ | 6/50 [00:01<00:10, 4.21it/s]

14%|█▍ | 7/50 [00:01<00:10, 4.21it/s]

16%|█▌ | 8/50 [00:01<00:09, 4.21it/s]

18%|█▊ | 9/50 [00:02<00:09, 4.21it/s]

20%|██ | 10/50 [00:02<00:09, 4.21it/s]

22%|██▏ | 11/50 [00:02<00:09, 4.21it/s]

24%|██▍ | 12/50 [00:02<00:09, 4.21it/s]

26%|██▌ | 13/50 [00:03<00:08, 4.21it/s]

28%|██▊ | 14/50 [00:03<00:08, 4.21it/s]

30%|███ | 15/50 [00:03<00:08, 4.22it/s]

32%|███▏ | 16/50 [00:03<00:08, 4.21it/s]

34%|███▍ | 17/50 [00:04<00:07, 4.20it/s]

36%|███▌ | 18/50 [00:04<00:07, 4.20it/s]

38%|███▊ | 19/50 [00:04<00:07, 4.20it/s]

40%|████ | 20/50 [00:04<00:07, 4.21it/s]

42%|████▏ | 21/50 [00:04<00:06, 4.21it/s]

44%|████▍ | 22/50 [00:05<00:06, 4.21it/s]

46%|████▌ | 23/50 [00:05<00:06, 4.21it/s]

48%|████▊ | 24/50 [00:05<00:06, 4.20it/s]

50%|█████ | 25/50 [00:05<00:05, 4.21it/s]

52%|█████▏ | 26/50 [00:06<00:05, 4.20it/s]

54%|█████▍ | 27/50 [00:06<00:05, 4.20it/s]

56%|█████▌ | 28/50 [00:06<00:05, 4.21it/s]

58%|█████▊ | 29/50 [00:06<00:05, 4.20it/s]

60%|██████ | 30/50 [00:07<00:04, 4.20it/s]

62%|██████▏ | 31/50 [00:07<00:04, 4.19it/s]

64%|██████▍ | 32/50 [00:07<00:04, 4.19it/s]

66%|██████▌ | 33/50 [00:07<00:04, 4.19it/s]

68%|██████▊ | 34/50 [00:08<00:03, 4.19it/s]

70%|███████ | 35/50 [00:08<00:03, 4.20it/s]

72%|███████▏ | 36/50 [00:08<00:03, 4.20it/s]

74%|███████▍ | 37/50 [00:08<00:03, 4.19it/s]

76%|███████▌ | 38/50 [00:09<00:02, 4.19it/s]

78%|███████▊ | 39/50 [00:09<00:02, 4.19it/s]

80%|████████ | 40/50 [00:09<00:02, 4.19it/s]

82%|████████▏ | 41/50 [00:09<00:02, 4.19it/s]

84%|████████▍ | 42/50 [00:09<00:01, 4.19it/s]

86%|████████▌ | 43/50 [00:10<00:01, 4.19it/s]

88%|████████▊ | 44/50 [00:10<00:01, 4.19it/s]

90%|█████████ | 45/50 [00:10<00:01, 4.19it/s]

92%|█████████▏| 46/50 [00:10<00:00, 4.20it/s]

94%|█████████▍| 47/50 [00:11<00:00, 4.19it/s]

96%|█████████▌| 48/50 [00:11<00:00, 4.19it/s]

98%|█████████▊| 49/50 [00:11<00:00, 4.19it/s]

100%|██████████| 50/50 [00:11<00:00, 4.19it/s]

100%|██████████| 50/50 [00:11<00:00, 4.20it/s]