Readme

OverLORD

Scaling-up Disentanglement for Image Translation

Aviv Gabbay and Yedid Hoshen

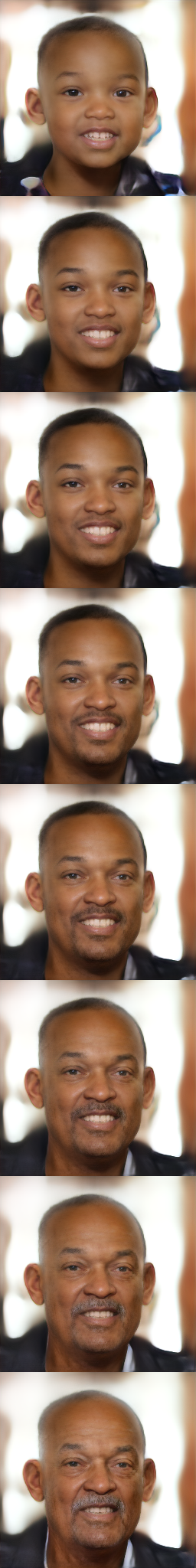

International Conference on Computer Vision (ICCV), 2021.Abstract: Image translation methods typically aim to manipulate a set of labeled attributes (given as supervision at training time e.g. domain label) while leaving the unlabeled attributes intact. Current methods achieve either: (i) disentanglement, which exhibits low visual fidelity and can only be satisfied where the attributes are perfectly uncorrelated. (ii) visually-plausible translations, which are clearly not disentangled. In this work, we propose OverLORD, a single framework for disentangling labeled and unlabeled attributes as well as synthesizing high-fidelity images, which is composed of two stages; (i) Disentanglement: Learning disentangled representations with latent optimization. Differently from previous approaches, we do not rely on adversarial training or any architectural biases. (ii) Synthesis: Training feed-forward encoders for inferring the learned attributes and tuning the generator in an adversarial manner to increase the perceptual quality. When the labeled and unlabeled attributes are correlated, we model an additional representation that accounts for the correlated attributes and improves disentanglement. We highlight that our flexible framework covers multiple settings as disentangling labeled attributes, pose and appearance, localized concepts, and shape and texture. We present significantly better disentanglement with higher translation quality and greater output diversity than state-of-the-art methods.

Description

A framework for high-fidelity disentanglement of labeled and unlabeled attributes. We support two general cases: (i) The labeled and unlabeled attributes are approximately uncorrelated. (ii) The labeled and unlabeled attributes are correlated. For this case, we suggest simple forms of transformations for learning pose-independent or localized correlated attributes, by which we achieve better disentanglement both quantitatively and qualitatively than state-of-the-art methods.

Citation

@inproceedings{gabbay2021overlord,

author = {Aviv Gabbay and Yedid Hoshen},

title = {Scaling-up Disentanglement for Image Translation},

booktitle = {International Conference on Computer Vision (ICCV)},

year = {2021}

}