Readme

Neural-Texture-Extraction-Distribution

The PyTorch implementation for our paper “Neural Texture Extraction and Distribution for Controllable Person Image Synthesis” (CVPR2022 Oral)

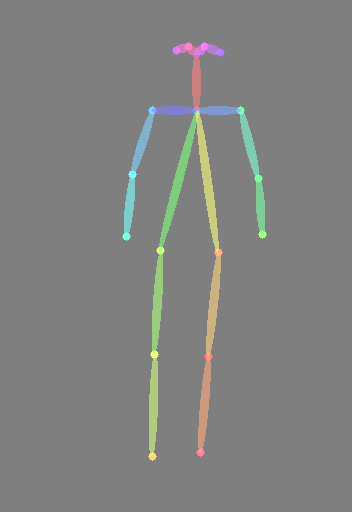

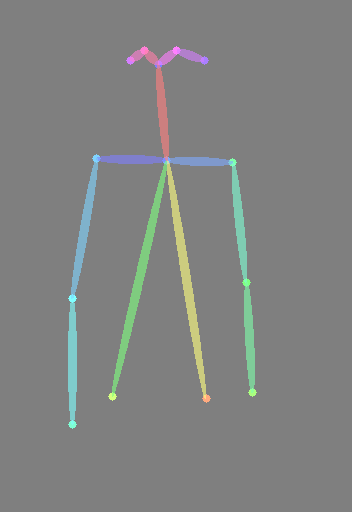

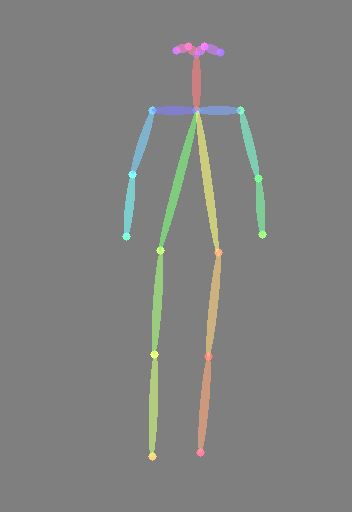

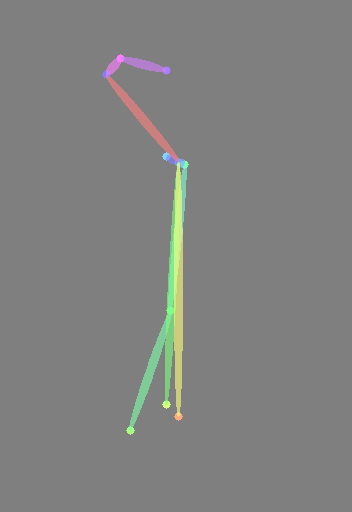

We propose a Neural-Texture-Extraction-Distribution operation for controllable person image synthesis. Our model can be used to control the pose and appearance of a reference image:

NOTE: This demo only supports pose control. For appearance control, please see the original repo to run models locally.

- Pose Control

Usage

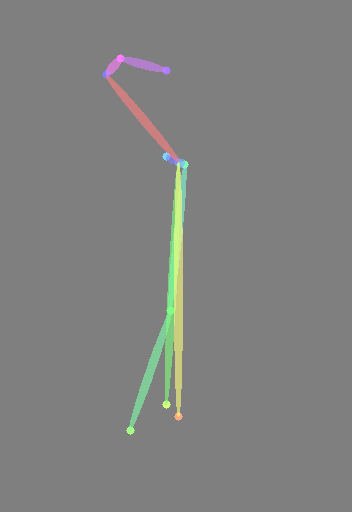

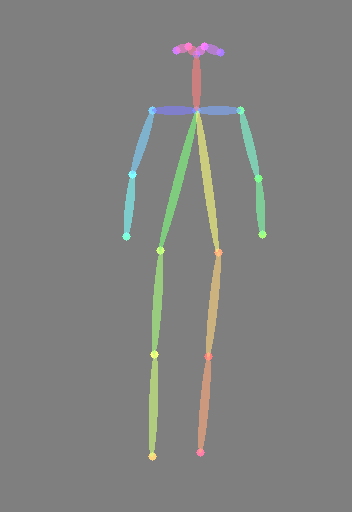

The model takes in as input a reference image, as well a .txt file containing a 18x2 array of OpenPose keypoints. See Pose Output Format: COCO here.

Ensure that the reference image has a clean, monocolor background to optimize feature extraction and prevent artifacts. Also note that the model is trained on a limited range of demographics, which may cause potential artifacts.

The model outputs a visualization of the target skeleton, as well as the reference model manipulated to fit the target skeleton pose.