Readme

High-Fidelity GAN Inversion for Image Attribute Editing

Update: We released the inference code and the pre-trained model on Oct. 31. The training code is coming soon.

paper | project website | demo video

Introduction

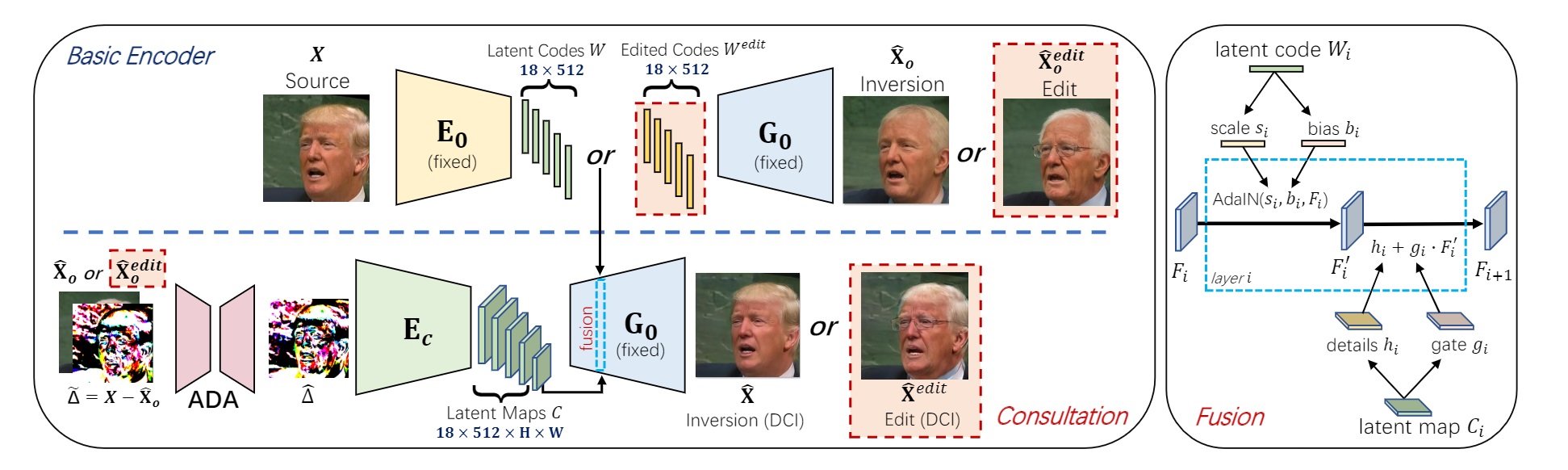

We present a novel high-fidelity GAN inversion framework that enables attribute editing with image-specific details well-preserved (e.g., background, appearance and illumination).

Citation

If you find this work useful for your research, please cite:

@article{wang2021HFGI,

author = {Tengfei Wang and Yong Zhang and Yanbo Fan and Jue Wang and Qifeng Chen},

title = {High-Fidelity GAN Inversion for Image Attribute Editing},

journal = {arxiv:2109.06590},

year = {2021}

}