Readme

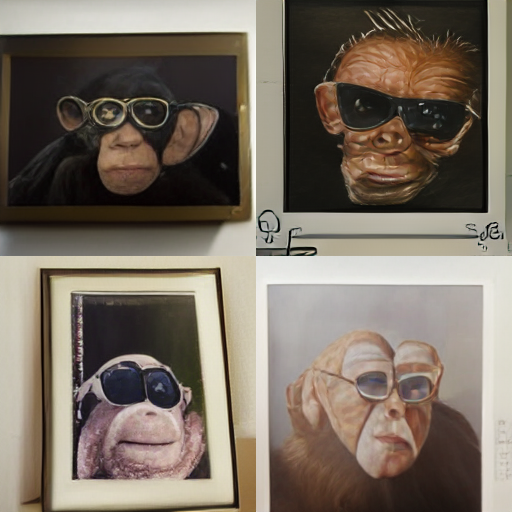

minDALL-E on Conceptual Captions

minDALL-E, named after minGPT, is a 1.3B text-to-image generation model trained on 14 million

image-text pairs for non-commercial purposes.

BibTex

If you find this repository useful in your research, please cite:

@misc{kakaobrain2021minDALL-E,

title = {minDALL-E on Conceptual Captions},

author = {Saehoon Kim, Sanghun Cho, Chiheon Kim, Doyup Lee, and Woonhyuk Baek},

year = {2021},

howpublished = {\url{https://github.com/kakaobrain/minDALL-E}},

}

References

- [1] Ramesh et al. Zero-Shot Text-to-Image Generation, ICML 2021.

- [2] Esser et al. Taming Transformers for High-Resolution Image Synthesis, CVPR 2021.

- [3] Karras et al. A Style-Based Generator Architecture for Generative Adversarial Networks, CVPR 2019.

- [4] Sharma et al. Conceptual Captions: A Cleaned, Hypernymed, Image Alt-text Dataset For Automatic Image Captioning, ACL 2018.

- [5] Changpinyo et al. Conceptual 12M: Pushing Web-Scale Image-Text Pre-Training To Recognize Long-Tail Visual Concepts, CVPR 2021.

- [6] Radford et al. Learning Transferable Visual Models From Natural Language Supervision, ICML 2021.

- [7] Esser et al. ImageBART: Bidirectional Context with Multinomial Diffusion for Autoregressive Image Synthesis, NeurIPS 2021.

- [8] https://github.com/karpathy/minGPT

Licenses

- The

source codesare licensed under Apache 2.0 License. - The

stage2 pretrained weightsare licensed under CC-BY-NC-SA 4.0 License.

Contact

We hope that minDALL-E helps various projects in research-oriented institutes and startups.

If you would like to collaborate with us or share a feedback, please e-mail to us, contact@kakaobrain.com

Limitations

Although minDALL-E is trained on a small set (14M image-text pairs), this might be vulnerable to malicious attacks from the prompt engineering to generate socially unacceptable images. If you obersve these images, please report the “prompt” and “generated images” to us.