Readme

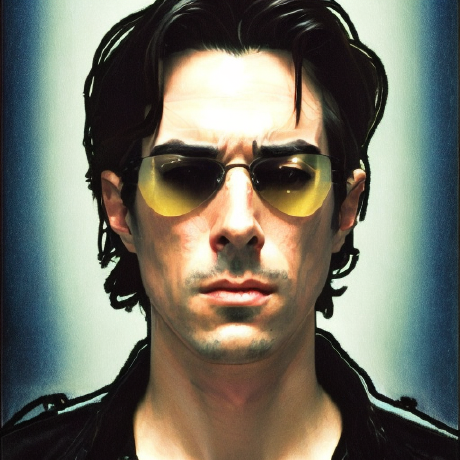

Create variations of an image while preserving shape and depth.

This stable-diffusion-2-depth model is resumed from stable-diffusion-2-base (512-base-ema.ckpt) and finetuned for 200k steps. Added an extra input channel to process the (relative) depth prediction produced by MiDaS (dpt_hybrid) which is used as an additional conditioning.

- Developed by: Robin Rombach, Patrick Esser

- Model type: Diffusion-based text-to-image generation model

- Language(s): English

- License: CreativeML Open RAIL++-M License

- Model Description: This is a model that can be used to generate and modify images based on text prompts. It is a Latent Diffusion Model that uses a fixed, pretrained text encoder (OpenCLIP-ViT/H).

- Resources for more information: GitHub Repository.

Intended use

See stability-ai/stable-diffusion for direct use, misuse, malicious use, out-of-scope use, limitations, and bias.