Readme

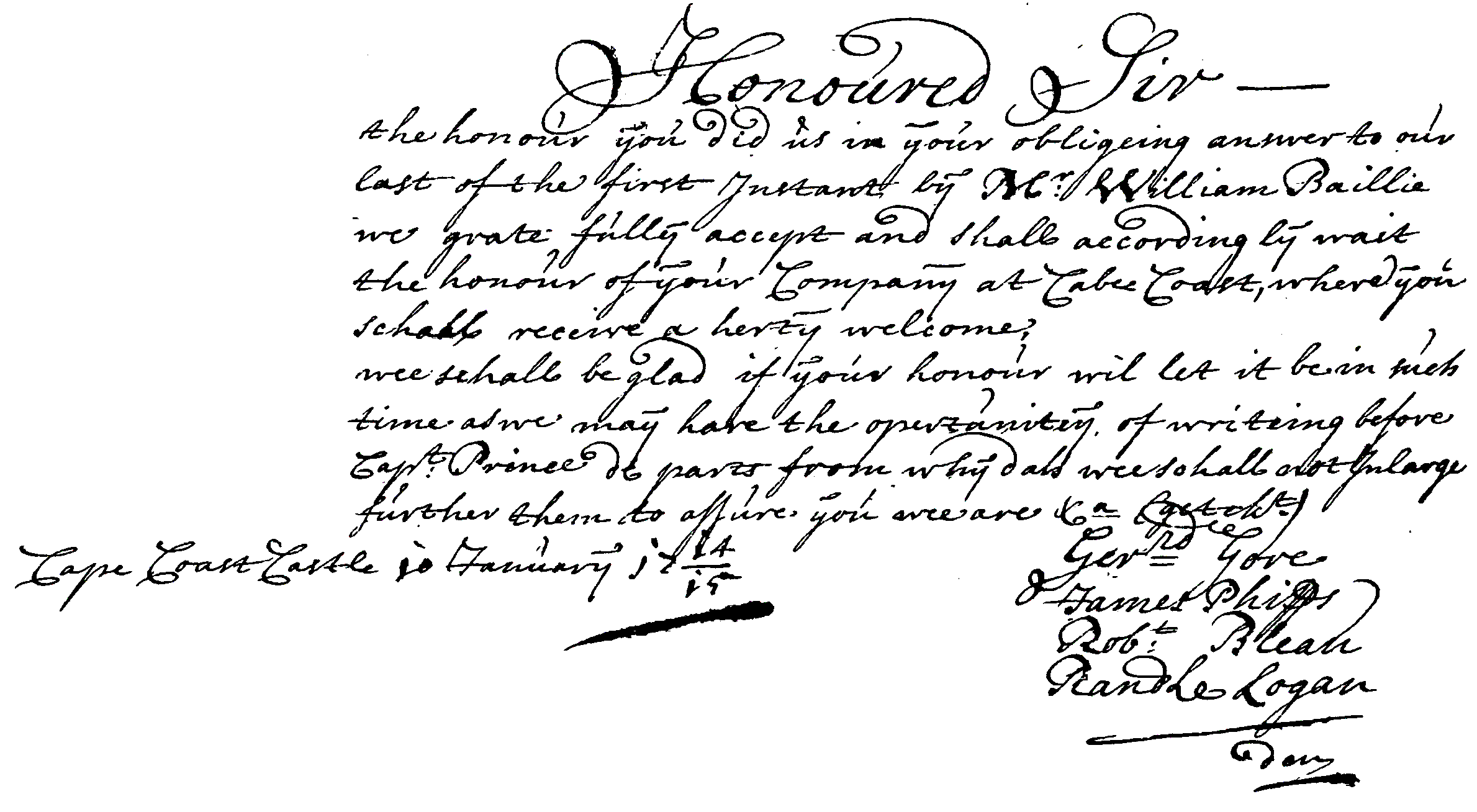

weights from: https://huggingface.co/stabilityai/stable-diffusion-2, fp32 version code for the demo: https://github.com/chenxwh/cog-stable-diffusion/tree/sd-v2

TEST version!

This stable-diffusion-2 model is resumed from stable-diffusion-2-base (512-base-ema.ckpt) and trained for 150k steps using a v-objective on the same dataset. Resumed for another 140k steps on 768x768 images.

Compared to the mainline stable-diffusion https://replicate.com/stability-ai/stable-diffusion, the differences are: - seems only DDIM, K_EULER, and DPMSolverMultistep schedulers work properly - removed image inpainting pipeline - Default size set 768X768 - less num_output is allowed to fit to on V100