How to use open source language models

Table of contents

Let's cover the basics of how to use open source language models by exploring the important language model parameters, and how they work.

To work through these examples, you can use a language model on Replicate, like meta/llama-3.1-405b-instruct.

- prompt

- tokens

- context window

- system prompt

- temperature

- top_p

- top_k

- minimum and maximum tokens

- stop sequences

- frequency penalty

Prompt

The most important parameter for language models is the prompt. The prompt is the primary input instruction for the language model. When you feed the prompt to the language model, the language model responds with what it thinks is the most-likely next word.

Try out some of your own prompts below.

Tell me a story about cats!

Tokens

Tokens are the foundational unit of language model inputs and outputs. Language models don't see words or characters like you and I -- they see tokens.

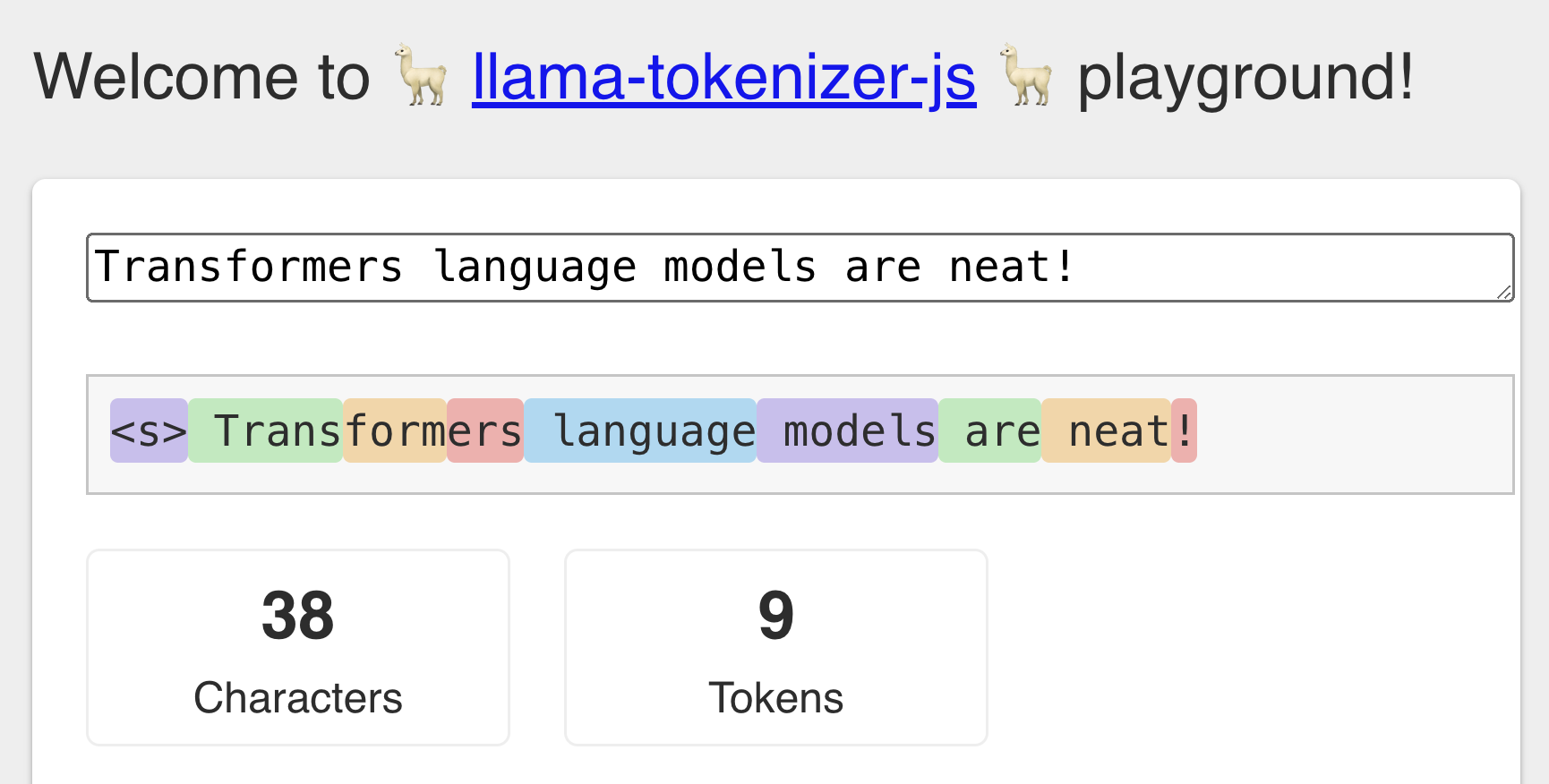

For example, the sentence of "Transformers language models are neat!" actually is broken down into 9 tokens: <s> Trans form ers language models are neat and !.

Each language model has a distinct vocabulary of tokens, so the exact number of tokens will vary from model to model. For Meta's Llama2 models, the vocabulary consists of 32,000 possible tokens. A token vocabulary is a map of text string to integer id value.

As a rule of thumb, tokens are typically around 4 characters long. But this isn’t always the case, sometimes a single character can count as an entire token.

Below you can visualize how the Llama2 tokenizer chunks words into tokens. You can also play with this yourself on the llama-tokenizer-js playground. You'll also notice that this tool adds a <s> tokento the beginning of the list of tokens. This this is a special "beginning of sentence token" or bos_token, and it is how Llama2 understands that this is the beginning of a prompt. You don't need to include this in your prompt, it will automatically be included by the model tokenizer, but you will occasionally see it mentioned when reading about Llama2

Context window

Language models typically have a limited set of context, meaning they can only handle so many tokens before they start forgetting text they have previously seen or generated. This limit of tokens is called the context window.

Different language models have different sized context windows. For example the Llama2 model has a context window of 4,096 tokens or about 12 pages of text. The leading-edge proprietary models like GPT-4-Turbo have a context window of 128,000 tokens, or about 300 pages of text.

It's important to understand the context window of the model you're using, and how it impacts your use case. For example if you're using Llama2, you won't be able to ingest an entire book and answer questions; you'll need to use additional strategies like retrieval augmented generation. While GPT-4-Turbo can read an entire book without skipping a beat.

System prompt

The system prompt is a secondary prompt that you can use with instruction-tuned language models to to define the desired "behavior" or "personality" of the language model response.

For example, if you want the language model to only respond with haikus, you can set the system prompt to Only respond with haikus.

It's a beautiful day in the neighborhood, would you be my neighbor? system_prompt: Only respond with haikus.

System prompts are great for defining the "character" of your language model response. For example, this is what happens when we set our system prompt to be Yarr matey! respond as if ye are a pirate.

Describe the free energy principle system_prompt: Yarr matey! respond as if ye are a pirate.

Temperature

The temperature parameter is used to set the randomness of a language model response, by scaling its probability distribution over tokens.

When the temperature is set to 0.01, the language model will always respond with the most likely token.

When the temperature is set to 1.0, the language model's probability distribution is used as-is, without any scaling. While the most likely token is still the most probable choice, there's room for diversity, as the model doesn't always select it.

As the temperature increases above 1.0, the probability distribution is scaled to increase entropy, meaning that lower-probability tokens gain a higher chance of being selected. This leads to more surprising and varied outputs, as the model starts to explore less likely options. High temperatures, such as 5.0, can result in highly random and creative responses, but they may also reduce coherence and relevance.

In summary, a lower temperature (<1.0) steers the model towards more deterministic and predictable behavior. A temperature of 1.0 offers a balance, using the model's learned probability distribution to guide token selection. Higher temperatures (>1.0) increase randomness and creativity in the outputs.

Experimenting with different temperature settings can help you find the optimal balance for your specific use case, depending on whether you prioritize predictability or creativity.

Write me lyrics for a song about the free energy principle

temperature

- defaultValue: 0.7

- min: 0.1

- max: 5

- step: 0.1top_p

The top_p parameter is used to control the diversity of a language model's responses by adjusting its word selection process.

When top_p is set to a lower value, like 0.1, the language model restricts its choices to a very small set of the most likely tokens. This leads to more predictable and conservative outputs, as it only considers the top 10% of the most probable words in each step.

As top_p increases, the model includes a broader range of words in its selection pool, allowing for more varied and creative responses. A top_p value of 0.9 or higher enables the model to consider a wider array of possibilities, picking from the top 90% of probable words. This setting is useful when you want the language model to generate more diverse and less constrained text.

So, as a general guideline: if you're aiming for more consistent and focused outputs, use a lower top_p value. If you're seeking creativity and a wider range of responses, opt for a higher top_p.

top_p is also influenced by both the temperature and top_k parameters. Try playing with all of the knobs in the example below.

Explain Docker but pretend to be a RuneScape wizard

top_p

- defaultValue: 0.95

- min: 0.0

- max: 1.0

- step: 0.01top_k

- defaultValue: -1

- min: -1

- max: 50

- step: 1.0temperature

- defaultValue: 0.7

- min: 0.1

- max: 5

- step: 0.1top_k

The top_k parameter is a method used to refine the selection process of a language model when it generates text. It limits the number of words or tokens the model considers at each step of the generation process.

When top_k is set to a specific value, say 10, the model only considers the top 10 most likely next words or tokens at each step. It essentially ignores all other words in its vocabulary, regardless of their probability. This restriction helps to focus the model's choices and can lead to more predictable and relevant text.

If top_k is set to a very high number, the model's behavior begins to resemble more unrestricted, probabilistic generation, as it's allowed to consider a wide range of possible words. Conversely, a very low top_k value (like 1 or 2) makes the model's output highly deterministic and less varied.

Therefore, top_k is a key parameter for balancing creativity and coherence in text generation. A lower top_k leads to safer, more predictable text, while a higher top_k allows for more diverse and potentially creative outputs. The ideal top_k value often depends on the specific task and desired output characteristics.

Explain Docker but pretend to be a RuneScape wizard

top_p

- defaultValue: 0.95

- min: 0.0

- max: 1.0

- step: 0.01top_k

- defaultValue: -1

- min: -1

- max: 50

- step: 1.0temperature

- defaultValue: 0.7

- min: 0.1

- max: 5

- step: 0.1Minimum and maximum new tokens

The min_new_tokens and max_new_tokens parameters are used to control the length of the generated output.

min_new_tokens sets the minimum number of new tokens that the model should generate. This is useful when you need to ensure that the output has a certain amount of substance or detail.

max_new_tokens defines the maximum number of tokens the model is allowed to generate. This parameter ensures that the output doesn't exceed a certain length. It's helpful in keeping generated content concise.

Explain Docker but pretend to be a RuneScape wizard

max_new_tokens

- defaultValue: 128

- min: 1

- max: 1024

- step: 1.0min_new_tokens

- defaultValue: -1

- min: -1

- max: 1024

- step: 1.0Stop sequences

These are sequences of tokens that, when generated, will stop the model from generating any more text.

This is useful for controlling the length and relevance of the output. For example, if you set the stop sequence to a period ".", the model will stop generating text once it completes a sentence.

Without a stop sequence the model can continue generating text up to its maximum token limit, or until it reaches a conclusion. This can lead to long or off-topic responses.

Frequency penalty (repetition_penalty)

This parameter controls the amount of repetition in the generated text. It changes the likelihood of repeatedly using the same words or phrases.

With a repetition_penalty of 0, there is no penalty, allowing the model to use words as frequently as it needs. This can sometimes lead to repetitive text, especially in longer outputs.

If you're noticing too much repetition in the model's output, increasing the repetition_penalty can help. A higher setting, generally ranging from 0.1 to 1, imposes a stronger penalty on the recurrence of words. This motivates the model to employ a broader range of vocabulary and to write sentences with greater variation.

Do not set the penalty too high. This can lead the model to avoid relevant terms that are necessary for coherent text.