Explore

prunaai / z-image-turbo

Z-Image Turbo is a super fast text-to-image model of 6B parameters developed by Tongyi-MAI.

27.4M runs

prunaai / p-image-edit

A sub 1 second 0.01$ multi-image editing model built for production use cases. For image generation, check out p-image here: https://replicate.com/prunaai/p-image

15.2M runs

vaibhavs10 / incredibly-fast-whisper

whisper-large-v3, incredibly fast, powered by Hugging Face Transformers! 🤗

26.4M runs

jaaari / kokoro-82m

Kokoro v1.0 - text-to-speech (82M params, based on StyleTTS2)

80M runs

Featured models

runwayml/gen-4.5

runwayml/gen-4.5State-of-the-art video motion quality, prompt adherence and visual fidelity

1.6K runs

recraft-ai/recraft-v4

recraft-ai/recraft-v4Recraft's latest image generation model, built around design taste. Strong prompt accuracy, art-directed composition, and integrated text rendering. Fast and cost-efficient at standard resolution.

2.9K runs

Generate videos using xAI's Grok Imagine Video model

59K runs

Latest video model from Pixverse with astonishing physics

5.6K runs

Moonshot AI's latest open model. It unifies vision and text, thinking and non-thinking modes, and single-agent and multi-agent execution into one model

15.8K runs

Google's most intelligent model built for speed with frontier intelligence, superior search, and grounding

216.1K runs

A unified Text-to-Speech demo featuring three powerful modes: Voice, Clone and Design

67.7K runs

Very fast image generation and editing model. 4 steps distilled, sub-second inference for production and near real-time applications.

4.3M runs

openai/gpt-image-1.5

openai/gpt-image-1.5OpenAI's latest image generation model with better instruction following and adherence to prompts

3.3M runs

bytedance/seedream-4.5

bytedance/seedream-4.5Seedream 4.5: Upgraded Bytedance image model with stronger spatial understanding and world knowledge

3.7M runs

Google's state of the art image generation and editing model 🍌🍌

14.9M runs

Compose a song from a prompt or a composition plan

4.7K runs

Official models

Official models are always on, maintained, and have predictable pricing.

google / gemini-3.1-pro

Google's most intelligent model, with improved reasoning and a new medium thinking level

runwayml / gen-4.5

State-of-the-art video motion quality, prompt adherence and visual fidelity

recraft-ai / recraft-v4-pro-svg

Generate detailed SVG vector graphics from text prompts. Recraft V4 Pro's design taste with more geometric detail and finer paths — clean layers, editable output, and scalable to any size.

recraft-ai / recraft-v4-svg

Generate production-ready SVG vector images from text prompts. Recraft V4's design taste applied to vector output — clean geometry, structured layers, and editable paths.

recraft-ai / recraft-v4-pro

Recraft's latest image generation model at ~2048px resolution. Same design taste and prompt accuracy as V4, with higher resolution for print-ready and large-scale work.

recraft-ai / recraft-v4

Recraft's latest image generation model, built around design taste. Strong prompt accuracy, art-directed composition, and integrated text rendering. Fast and cost-efficient at standard resolution.

kwaivgi / kling-v3-video

Kling Video 3.0: Generate cinematic videos up to 15 seconds with multi-shot control, native audio, and improved consistency

kwaivgi / kling-v3-omni-video

Kling Video 3.0 Omni: Unified multimodal video generation with reference images, video editing, native audio, and multi-shot control

kwaivgi / kling-o1

Modify an existing video through natural-language commands, changing subjects, environments, and visual style while preserving the original motion and timing.

xai / grok-imagine-image

SOTA image model from xAI

tencent / hunyuan-3d-3.1

3D models with texture fidelity and geometry precision

bria / video-remove-background

Automatically remove backgrounds from videos -perfect for creating clean, professional content without a green screen.

bria / video-increase-resolution

Upscale videos up to 8K output resolution. Trained on fully licensed and commercially safe data.

topazlabs / dust-and-scratch-v2

Remove dust and scratches from old photos

topazlabs / image-colorization

Image colorization model from Topaz Labs

bytedance / dreamactor-m2.0

Animate any character, humans, cartoons, animals, even non-humans, from a single image + driving video

minimax / speech-2.8-turbo

Minimax Speech 2.8 Turbo: Turn text into natural, expressive speech with voice cloning, emotion control, and support for 40+ languages

minimax / speech-2.8-hd

Minimax Speech 2.8 HD focuses on high-fidelity audio generation with features like studio-grade quality, flexible emotion control, multilingual support, and voice cloning capabilities

xai / grok-imagine-video

Generate videos using xAI's Grok Imagine Video model

anthropic / claude-opus-4.6

Anthropic's most intelligent model with state-of-the-art coding, reasoning, and agentic capabilities

I want to…

View all collectionsGenerate images

Use AI to generate images & photos with an API

Caption videos

Use AI to caption videos with an API

Generate speech

Use AI for text-to-speech or to clone your voice via API

Generate images from a face

Use AI to generate images from a face with an API

Generate videos

Use AI to generate videos with an API

Upscale images with super resolution

Use AI to upscale images with super resolution with an API

Generate music

Use AI to generate music with an API

Edit any image

Use AI to edit any image via API

Transcribe speech to text

Use AI to transcribe speech to text via API

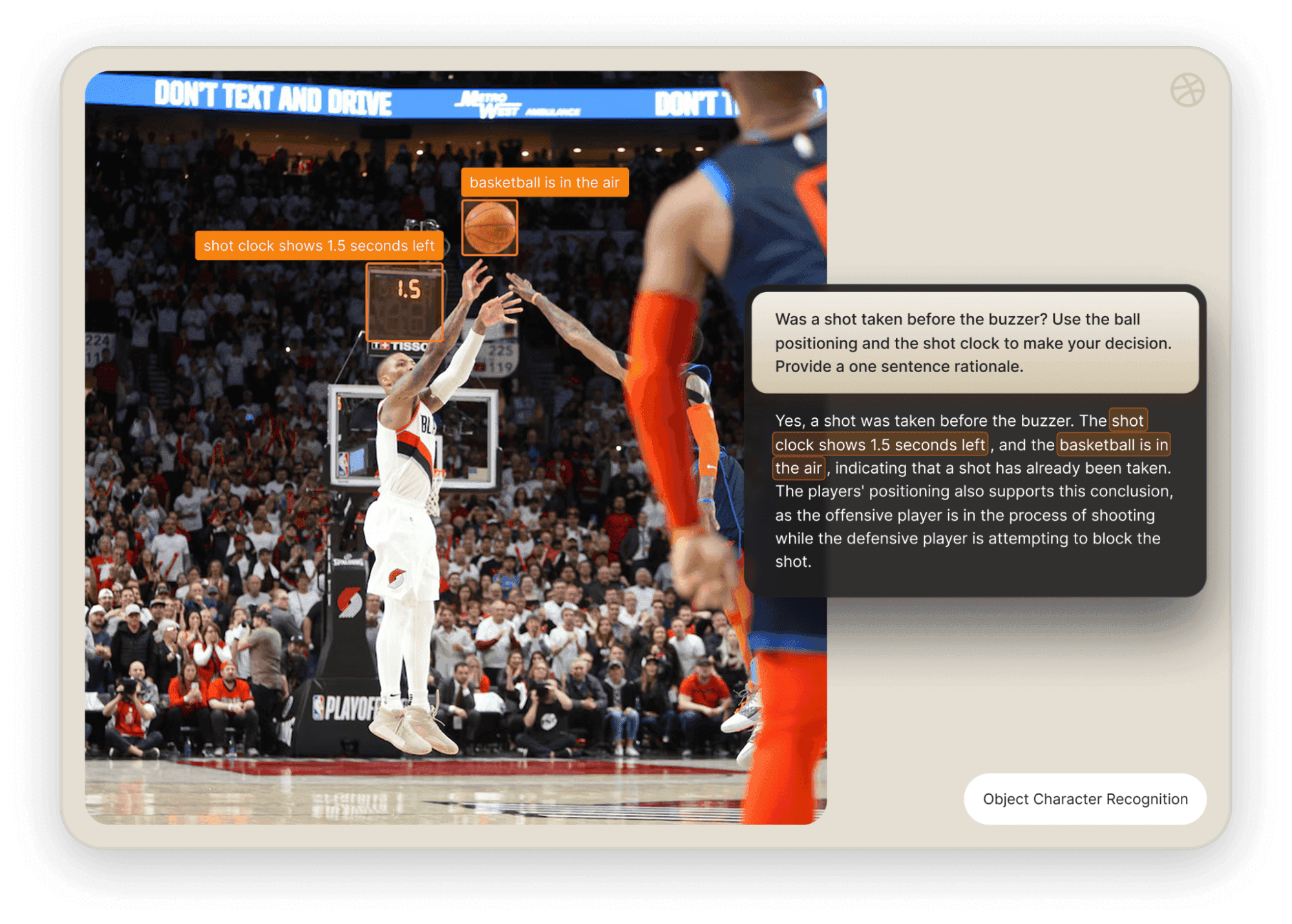

OCR to extract text from images

Use AI For Optical Character Recognition (OCR) to extract text from images via API

Remove backgrounds

Use AI to remove backgrounds from images and videos with an API

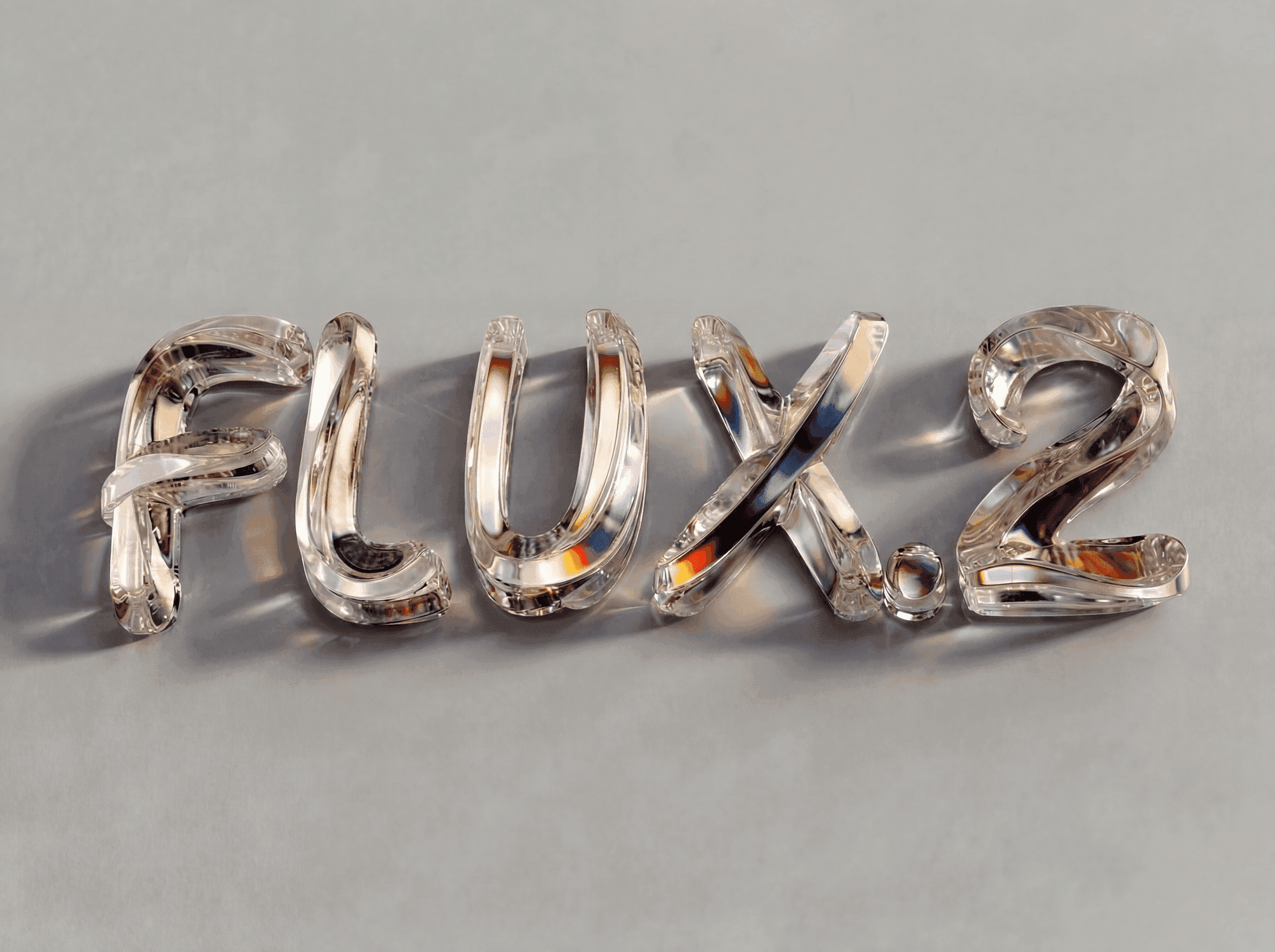

FLUX family of models

FLUX AI models: advanced image generation & editing via API

Restore images

Use AI to restore images via API

Enhance videos

Use AI to enhance videos via API - Replicate

Detect NSFW content

Detect NSFW content in images and text

Classify text

Classify text by sentiment, topic, intent, or safety

Speaker diarization

Identify speakers from audio and video inputs

Create realistic face swaps

Replace faces across images with natural-looking results.

Turn sketches into images

Transform rough sketches into polished visuals

Generate emojis

Generate custom emojis from text or images

Generate anime-style images and videos

Create anime-style characters, scenes, and animations

Try AI models for free

Try AI Models for free: video generation, image generation, upscaling, and photo restoration

Large Language Models (LLMs)

Explore Large Language Models (LLMs) for chat, generation & NLP tasks via API

Generate videos from images

Use AI to Generate Videos from Images with API

Lipsync videos

Use AI to generate lipsync videos with an API

Create 3D content

Use AI to create 3D content with an API

Vision models

Chat with images for understanding, captioning & detection via API

Control image generation

Use AI to control image generation with an API

Embedding models

Embedding models for AI search and analysis

Edit your videos

Use AI to edit your videos with an API

Object detection and segmentation

Use AI object detection and segmentation models to distinguish objects in images & videos

Official AI models

Official AI models: Always available, stable, and predictably priced

Flux fine-tunes

Flux fine-tunes: build and run custom AI image models via API

Kontext fine-tunes

Kontext fine-tunes: Build custom AI image models with an API

Create songs with voice cloning

Create songs with voice cloning models via API

Media utilities

AI media utilities: auto-caption, watermark, frame extraction & more via API

Qwen-Image fine-tunes

Browse the diverse range of qwen-image fine-tunes the community has custom-trained on Replicate.

WAN family of models

WAN family of models: powerful image-to-video & text-to-video models

Caption Images

Use AI To Caption Images with an API