Explore

Fine-tune FLUX fast

Customize FLUX.1 [dev] with the fast FLUX trainer on Replicate

Train the model to recognize and generate new concepts using a small set of example images, for specific styles, characters, or objects. It's fast (under 2 minutes), cheap (under $2), and gives you a warm, runnable model plus LoRA weights to download.

Featured models

black-forest-labs / flux-kontext-max

A premium text-based image editing model that delivers maximum performance and improved typography generation for transforming images through natural language prompts

black-forest-labs / flux-kontext-pro

A state-of-the-art text-based image editing model that delivers high-quality outputs with excellent prompt following and consistent results for transforming images through natural language

leonardoai / phoenix-1.0

Leonardo AI’s first foundational model produces images up to 5 megapixels (fast, quality and ultra modes)

leonardoai / motion-2.0

Create 5s 480p videos from a text prompt

google / imagen-4

Preview of Google's Imagen-4 flagship model. As a preview, this model might change.

replicate / fast-flux-trainer

Train subjects or styles faster than ever

anthropic / claude-4-sonnet

Claude Sonnet 4 is a significant upgrade to 3.7, delivering superior coding and reasoning while responding more precisely to your instructions

pixverse / pixverse-v4.5

Quickly make 5s or 8s videos at 540p, 720p or 1080p. It has enhanced motion, prompt coherence and handles complex actions well.

prunaai / vace-14b

This is VACE-14B model optimised with pruna ai. Wan2.1 VACE is an all-in-one model for video creation and editing.

Official models

Official models are always on, maintained, and have predictable pricing.

I want to…

Generate images

Models that generate images from text prompts

Make videos with Wan2.1

Generate videos with Wan2.1, the fastest and highest quality open-source video generation model.

Generate videos

Models that create and edit videos

Caption images

Models that generate text from images

Transcribe speech

Models that convert speech to text

Caption videos

Models that generate text from videos

Use the FLUX family of models

The FLUX family of text-to-image models from Black Forest Labs

Edit images

Tools for manipulating images.

Use a face to make images

Make realistic images of people instantly

Get embeddings

Models that generate embeddings from inputs

Generate speech

Convert text to speech

Generate music

Models to generate and modify music

Generate text

Models that can understand and generate text

Use handy tools

Toolbelt-type models for videos and images.

Upscale images

Upscaling models that create high-quality images from low-quality images

Restore images

Models that improve or restore images by deblurring, colorization, and removing noise

Use official models

Official models are always on, maintained, and have predictable pricing.

Enhance videos

Models that enhance videos with super-resolution, sound effects, motion capture and other useful production effects.

Remove backgrounds

Models that remove backgrounds from images and videos

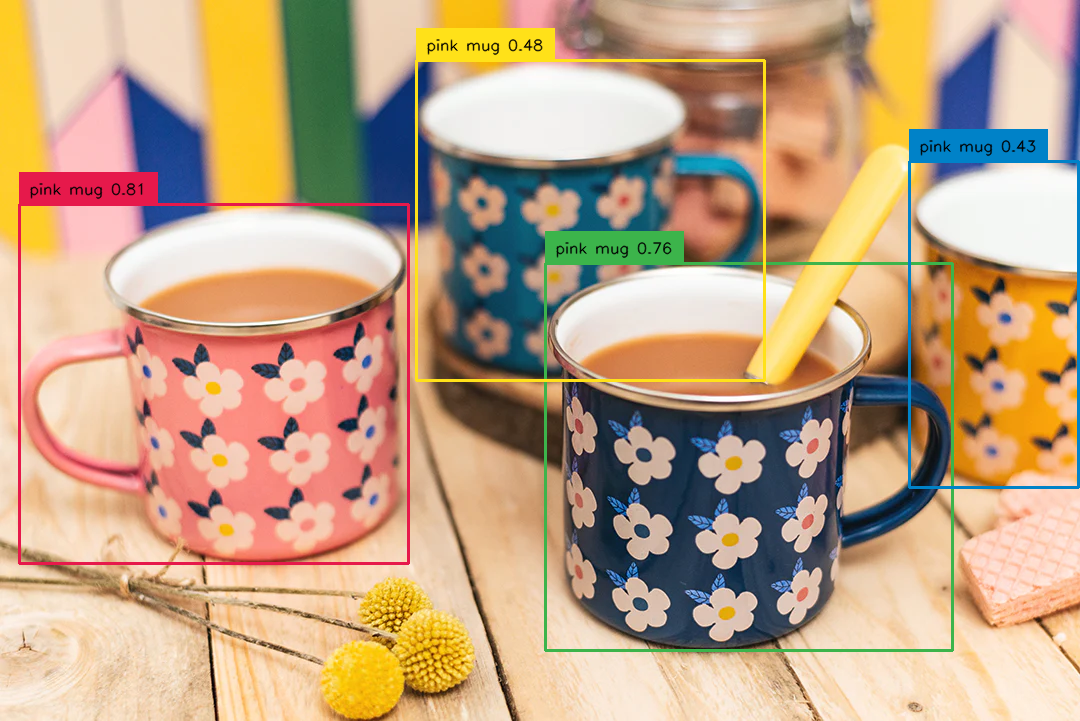

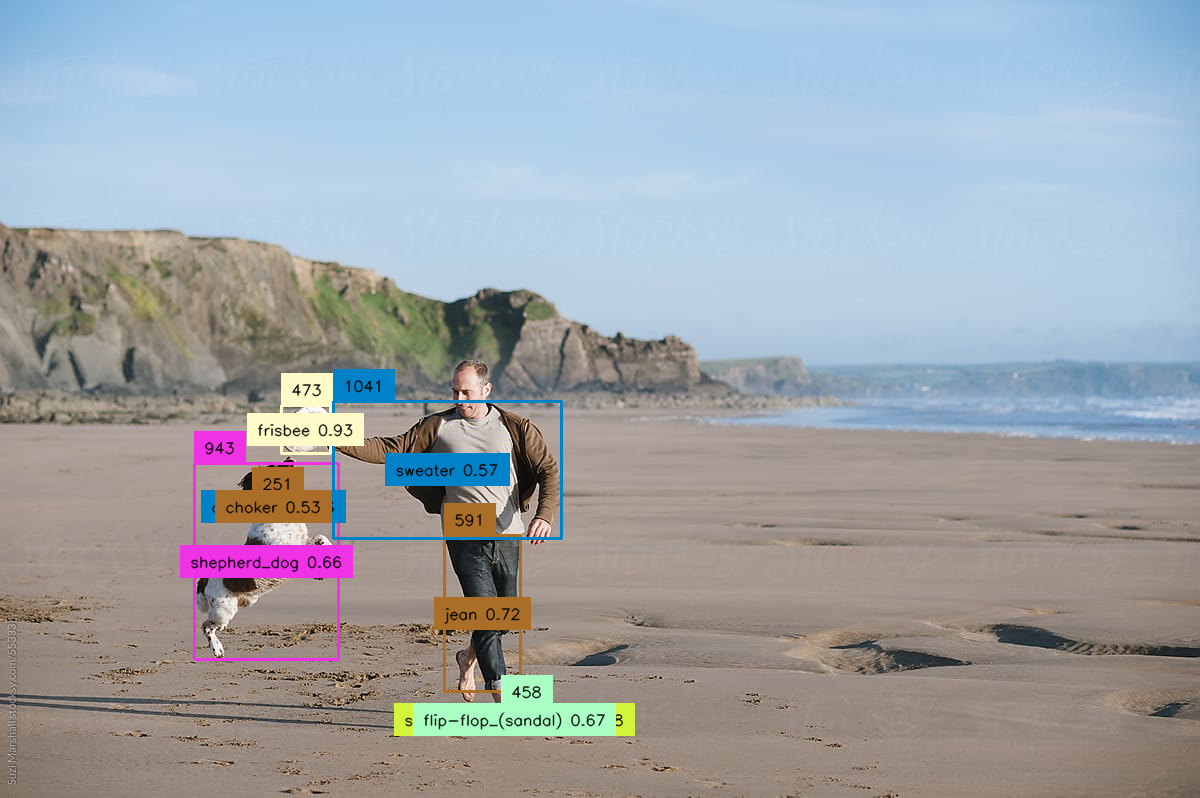

Detect objects

Models that detect or segment objects in images and videos.

Sing with voices

Voice-to-voice cloning and musical prosody

Make 3D stuff

Models that generate 3D objects, scenes, radiance fields, textures and multi-views.

Chat with images

Ask language models about images

Extract text from images

Optical character recognition (OCR) and text extraction

Use FLUX fine-tunes

Browse the diverse range of fine-tunes the community has custom-trained on Replicate

Control image generation

Guide image generation with more than just text. Use edge detection, depth maps, and sketches to get the results you want.

Popular models

SDXL-Lightning by ByteDance: a fast text-to-image model that makes high-quality images in 4 steps

multilingual-e5-large: A multi-language text embedding model

Practical face restoration algorithm for *old photos* or *AI-generated faces*

Latest models

Create your own Realistic Voice Cloning (RVC v2) dataset using a YouTube link

Simple version of https://huggingface.co/teknium/OpenHermes-2-Mistral-7B

Very fast img2img for a collaboration with AI in real time

Source: NousResearch/Obsidian-3B-V0.5 ✦ Worlds smallest multi-modal LLM

Source: PocketDoc/Dans-AdventurousWinds-Mk2-7b ✦ Quant: TheBloke/Dans-AdventurousWinds-Mk2-7B-AWQ ✦ This model is proficient in crafting text-based adventure games

Source: Intel/neural-chat-7b-v3-1 ✦ Quant: TheBloke/neural-chat-7B-v3-1-AWQ ✦ Fine-tuned model based on mistralai/Mistral-7B-v0.1

Animate Your Personalized Text-to-Image Diffusion Models with SDXL and LCM

Text to video diffusion model with variable length frame conditioning for infinite length video

Dreamshaper-7 img2img with LCM LoRA for faster inference

An auto-regressive causal LM created by combining 2x finetuned Llama-2 70B into one.

RealvisXL-v2.0 with LCM LoRA - requires fewer steps (4 to 8 instead of the original 40 to 50)

Take a list of image URLs as frames and output a video

Auto fuse a user's face onto the template image, with a similar appearance to the user

Create song covers with any RVC v2 trained AI voice from audio files.

A combination of ip_adapter SDv1.5 and mediapipe-face to inpaint a face

The Yi series models are large language models trained from scratch by developers at 01.AI.

The Yi series models are large language models trained from scratch by developers at 01.AI.

The Yi series models are large language models trained from scratch by developers at 01.AI.

Custom improvements like a custom callback to enhance the inference | It's a WIP and it may causes some wrong outputs

An extremely fast all-in-one model to use LCM with SDXL, ControlNet and custom LoRA url's!

Create variations of an uploaded image. Please see README for more details

Source: meta-llama/Llama-2-7b-chat-hf ✦ Quant: TheBloke/Llama-2-7B-Chat-AWQ ✦ Intended for assistant-like chat

Source: meta-math/MetaMath-Mistral-7B ✦ Quant: TheBloke/MetaMath-Mistral-7B-AWQ ✦ Bootstrap Your Own Mathematical Questions for Large Language Models

Source: Severian/ANIMA-Phi-Neptune-Mistral-7B ✦ Quant: TheBloke/ANIMA-Phi-Neptune-Mistral-7B-AWQ ✦ Biomimicry Enhanced LLM

Animate Your Personalized Text-to-Image Diffusion Models (Long boot times!)

Latent Consistency Model (LCM): SDXL, distills the original model into a version that requires fewer steps (4 to 8 instead of the original 25 to 50)

Generate high-quality images faster with Latent Consistency Models (LCM), a novel approach that distills the original model, reducing the steps required from 25-50 to just 4-8 in Stable Diffusion (SDXL) image generation.

The image prompt adapter is designed to enable a pretrained text-to-image diffusion model to generate SDXL images with an image prompt