Explore

Fine-tune FLUX fast

Customize FLUX.1 [dev] with the fast FLUX trainer on Replicate

Train the model to recognize and generate new concepts using a small set of example images, for specific styles, characters, or objects. It's fast (under 2 minutes), cheap (under $2), and gives you a warm, runnable model plus LoRA weights to download.

Featured models

google / imagen-4

Preview of Google's Imagen-4 flagship model. As a preview, this model might change.

replicate / fast-flux-trainer

Train subjects or styles faster than ever

google / lyria-2

Lyria 2 is a music generation model that produces 48kHz stereo audio through text-based prompts

anthropic / claude-4-sonnet

Claude Sonnet 4 is a significant upgrade to 3.7, delivering superior coding and reasoning while responding more precisely to your instructions

pixverse / pixverse-v4.5

Quickly make 5s or 8s videos at 540p, 720p or 1080p. It has enhanced motion, prompt coherence and handles complex actions well.

openai / gpt-4.1

OpenAI's Flagship GPT model for complex tasks.

prunaai / vace-14b

This is VACE-14B model optimised with pruna ai. Wan2.1 VACE is an all-in-one model for video creation and editing.

minimax / speech-02-hd

Text-to-Audio (T2A) that offers voice synthesis, emotional expression, and multilingual capabilities. Optimized for high-fidelity applications like voiceovers and audiobooks.

ideogram-ai / ideogram-v3-turbo

Turbo is the fastest and cheapest Ideogram v3. v3 creates images with stunning realism, creative designs, and consistent styles

Official models

Official models are always on, maintained, and have predictable pricing.

I want to…

Generate images

Models that generate images from text prompts

Make videos with Wan2.1

Generate videos with Wan2.1, the fastest and highest quality open-source video generation model.

Generate videos

Models that create and edit videos

Caption images

Models that generate text from images

Transcribe speech

Models that convert speech to text

Edit images

Tools for manipulating images.

Use a face to make images

Make realistic images of people instantly

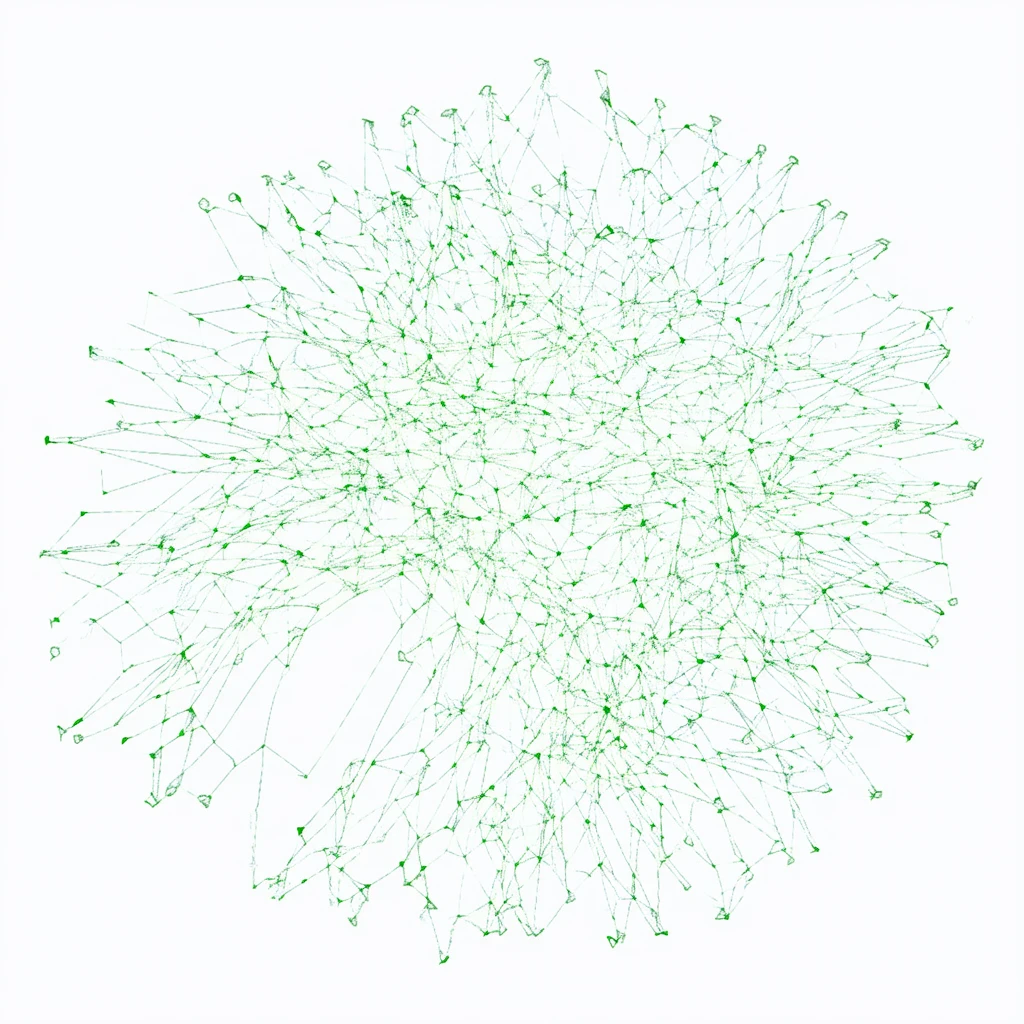

Get embeddings

Models that generate embeddings from inputs

Generate speech

Convert text to speech

Generate music

Models to generate and modify music

Generate text

Models that can understand and generate text

Use handy tools

Toolbelt-type models for videos and images.

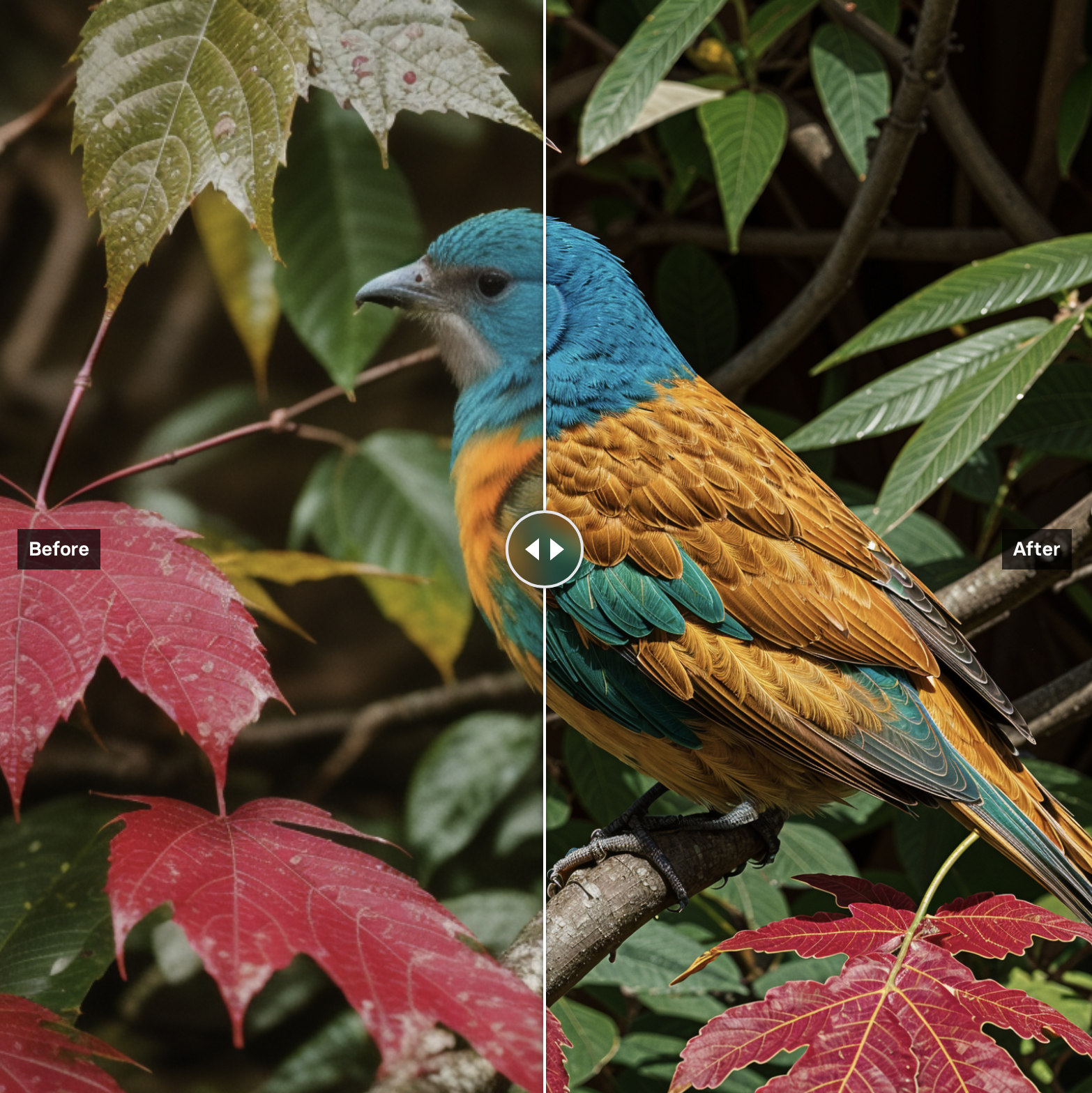

Upscale images

Upscaling models that create high-quality images from low-quality images

Restore images

Models that improve or restore images by deblurring, colorization, and removing noise

Caption videos

Models that generate text from videos

Use official models

Official models are always on, maintained, and have predictable pricing.

Enhance videos

Models that enhance videos with super-resolution, sound effects, motion capture and other useful production effects.

Remove backgrounds

Models that remove backgrounds from images and videos

Detect objects

Models that detect or segment objects in images and videos.

Sing with voices

Voice-to-voice cloning and musical prosody

Make 3D stuff

Models that generate 3D objects, scenes, radiance fields, textures and multi-views.

Chat with images

Ask language models about images

Extract text from images

Optical character recognition (OCR) and text extraction

Use the FLUX family of models

The FLUX family of text-to-image models from Black Forest Labs

Use FLUX fine-tunes

Browse the diverse range of fine-tunes the community has custom-trained on Replicate

Control image generation

Guide image generation with more than just text. Use edge detection, depth maps, and sketches to get the results you want.

Popular models

SDXL-Lightning by ByteDance: a fast text-to-image model that makes high-quality images in 4 steps

multilingual-e5-large: A multi-language text embedding model

whisper-large-v3, incredibly fast, powered by Hugging Face Transformers! 🤗

Practical face restoration algorithm for *old photos* or *AI-generated faces*

High resolution image Upscaler and Enhancer. Use at ClarityAI.co. A free Magnific alternative. Twitter/X: @philz1337x

Latest models

Generates realistic talking face animations from a portrait image and audio using the CVPR 2025 Sonic model

Transform your portrait photos into any style or setting while preserving your facial identity

Wan 2.1 1.3b Video to Video. Wan is a powerful visual generation model developed by Tongyi Lab of Alibaba Group

Easily create video datasets with auto-captioning for Hunyuan-Video LoRA finetuning

Cost-optimized MMAudio V2 (T4 GPU): Add sound to video using this version running on T4 hardware for lower cost. Synthesizes high-quality audio from video content.

Add sound to video using the MMAudio V2 model. An advanced AI model that synthesizes high-quality audio from video content, enabling seamless video-to-audio transformation.

A redux adapter trained from scratch on Flex.1-alpha, that also works with FLUX.1-dev

Indic Parler-TTS Pretrained is a multilingual Indic extension of Parler-TTS Mini.

SANA-Sprint: One-Step Diffusion with Continuous-Time Consistency Distillation

The fastest image generation model tailored for fine-tuned use

Open-weight inpainting model for editing and extending images. Guidance-distilled from FLUX.1 Fill [pro].

FLUX1.1 [pro] in ultra and raw modes. Images are up to 4 megapixels. Use raw mode for realism.

Faster, better FLUX Pro. Text-to-image model with excellent image quality, prompt adherence, and output diversity.

State-of-the-art image generation with top of the line prompt following, visual quality, image detail and output diversity.

Professional inpainting and outpainting model with state-of-the-art performance. Edit or extend images with natural, seamless results.

Professional edge-guided image generation. Control structure and composition using Canny edge detection

Professional depth-aware image generation. Edit images while preserving spatial relationships.

For the paper "Structured 3D Latents for Scalable and Versatile 3D Generation".

A model Flux.1-dev-Controlnet-Upscaler by www.androcoders.in

Accelerated inference for Wan 2.1 14B text to video, a comprehensive and open suite of video foundation models that pushes the boundaries of video generation.

Accelerated inference for Wan 2.1 14B text to video with high resolution, a comprehensive and open suite of video foundation models that pushes the boundaries of video generation.

Accelerated inference for Wan 2.1 14B image to video, a comprehensive and open suite of video foundation models that pushes the boundaries of video generation.

Accelerated inference for Wan 2.1 14B image to video with high resolution, a comprehensive and open suite of video foundation models that pushes the boundaries of video generation.

DeepSeek-V3-0324 is the leading non-reasoning model, a milestone for open source

Best-in-class clothing virtual try on in the wild (non-commercial use only)

Recraft V3 (code-named red_panda) is a text-to-image model with the ability to generate long texts, and images in a wide list of styles. As of today, it is SOTA in image generation, proven by the Text-to-Image Benchmark by Artificial Analysis

Recraft V3 SVG (code-named red_panda) is a text-to-image model with the ability to generate high quality SVG images including logotypes, and icons. The model supports a wide list of styles.

Generates MagicaVoxel VOX models, using flux dev + hunyuan3d-2. Can generate high detail and low detail models at varying resolutions.

epicrealism-naturalsinfinal-SD1.5-by-epinikion + perfectdeliberate by Desync + More Details by Lykon