Explore

Featured models

pixverse / pixverse-v4

Quickly generate smooth 5s or 8s videos at 540p, 720p or 1080p

minimax / speech-02-hd

Text-to-Audio (T2A) that offers voice synthesis, emotional expression, and multilingual capabilities. Optimized for high-fidelity applications like voiceovers and audiobooks.

minimax / voice-cloning

Clone voices to use with Minimax's speech-02-hd and speech-02-turbo

zsxkib / step1x-edit

✍️Step1X-Edit by stepfun-ai, Edit an image using text prompt📸

ideogram-ai / ideogram-v3-balanced

Balance speed, quality and cost. Ideogram v3 creates images with stunning realism, creative designs, and consistent styles

ideogram-ai / ideogram-v3-turbo

Turbo is the fastest and cheapest Ideogram v3. v3 creates images with stunning realism, creative designs, and consistent styles

ideogram-ai / ideogram-v3-quality

The highest quality Ideogram v3 model. v3 creates images with stunning realism, creative designs, and consistent styles

kwaivgi / kling-v2.0

Generate 5s and 10s videos in 720p resolution

nvidia / sana-sprint-1.6b

SANA-Sprint: One-Step Diffusion with Continuous-Time Consistency Distillation

Fine-tune FLUX

Customize FLUX.1 [dev] with Ostris's AI Toolkit on Replicate. Train the model to recognize and generate new concepts using a small set of example images, for specific styles, characters, or objects. (Generated with davisbrown/flux-half-illustration.)

I want to…

Generate images

Models that generate images from text prompts

Make videos with Wan2.1

Generate videos with Wan2.1, the fastest and highest quality open-source video generation model.

Generate videos

Models that create and edit videos

Caption images

Models that generate text from images

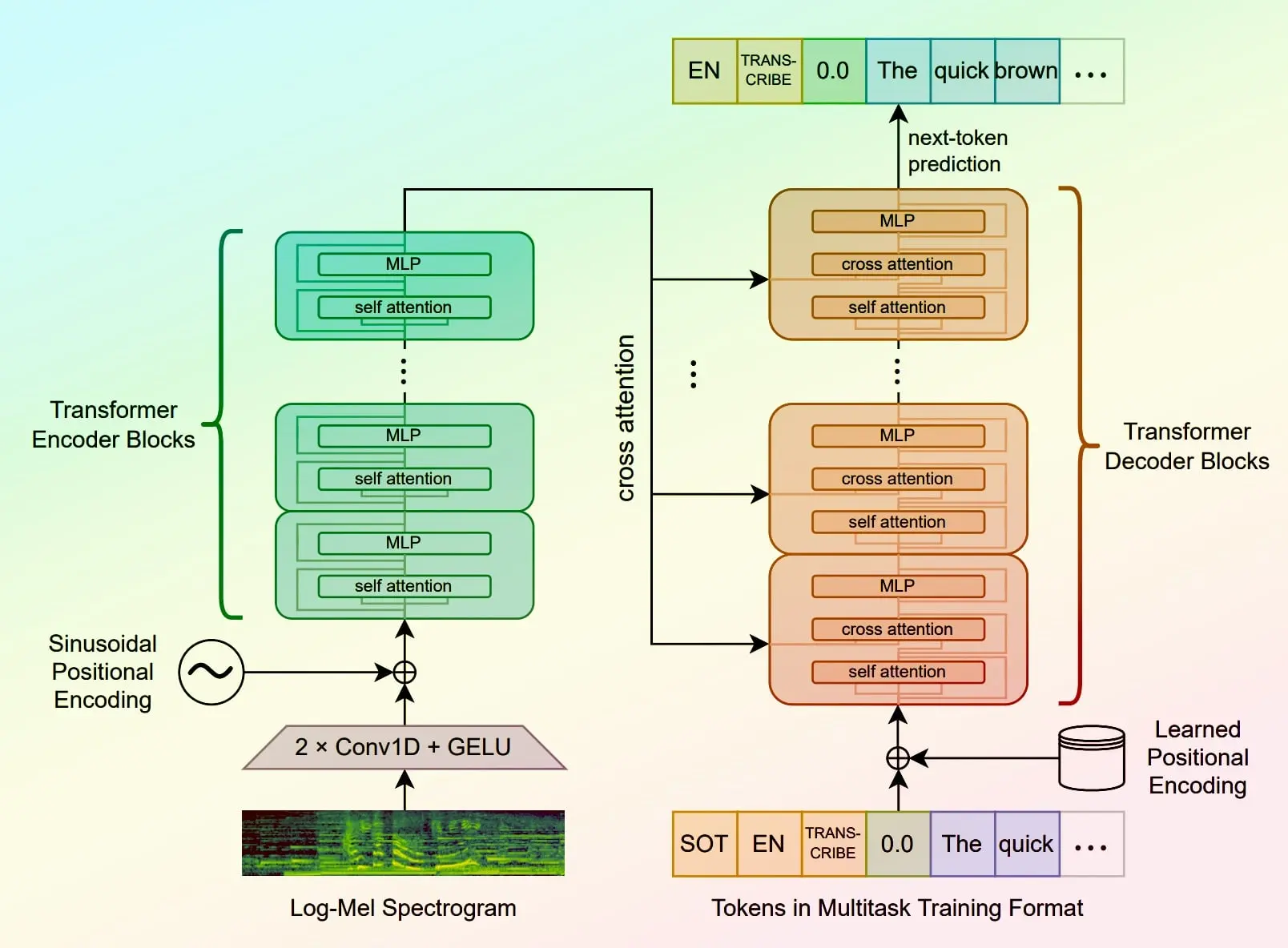

Transcribe speech

Models that convert speech to text

Generate speech

Convert text to speech

Use handy tools

Toolbelt-type models for videos and images.

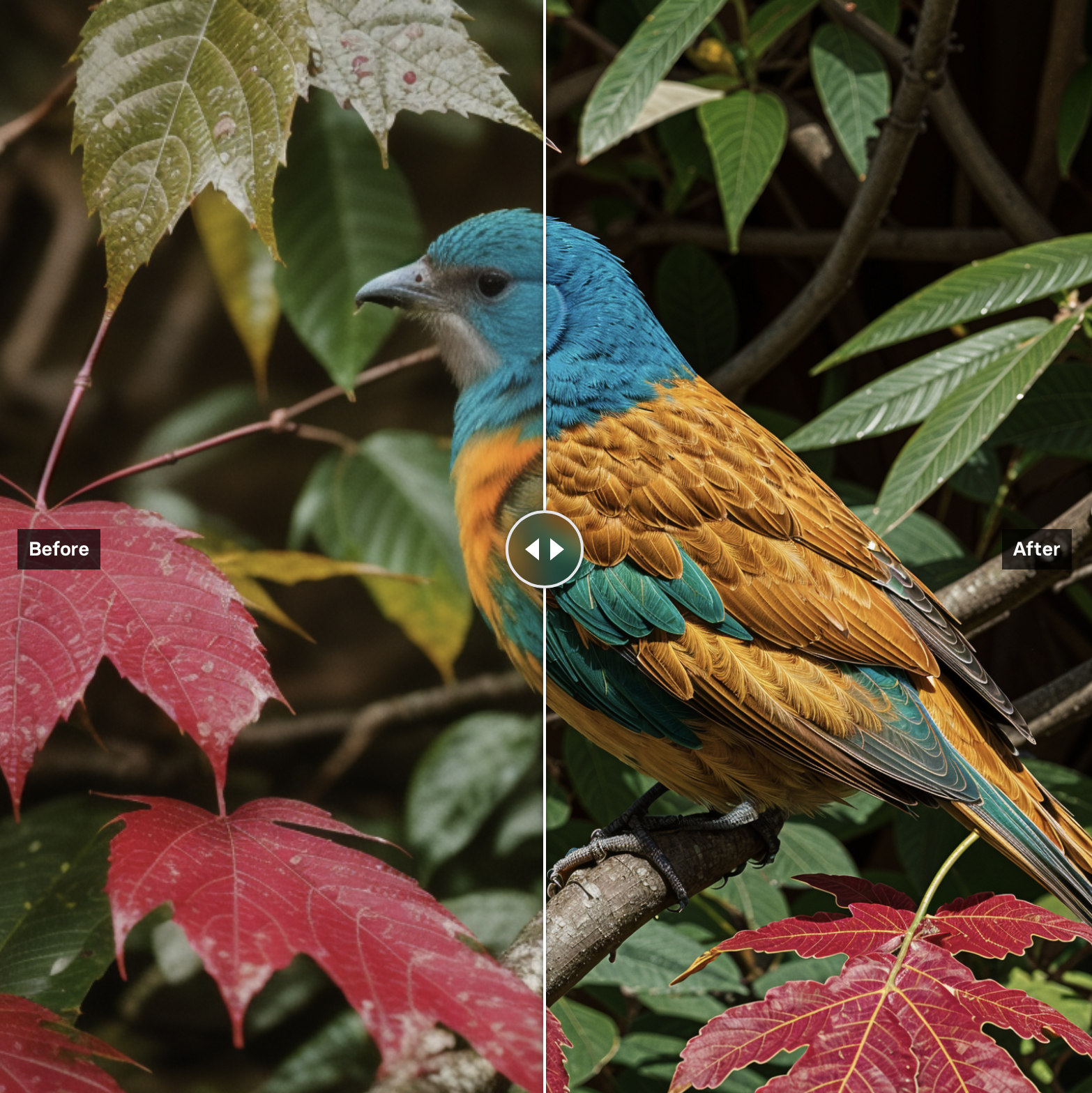

Upscale images

Upscaling models that create high-quality images from low-quality images

Restore images

Models that improve or restore images by deblurring, colorization, and removing noise

Use a face to make images

Make realistic images of people instantly

Edit images

Tools for manipulating images.

Caption videos

Models that generate text from videos

Generate text

Models that can understand and generate text

Use official models

Official models are always on, maintained, and have predictable pricing.

Enhance videos

Models that enhance videos with super-resolution, sound effects, motion capture and other useful production effects.

Remove backgrounds

Models that remove backgrounds from images and videos

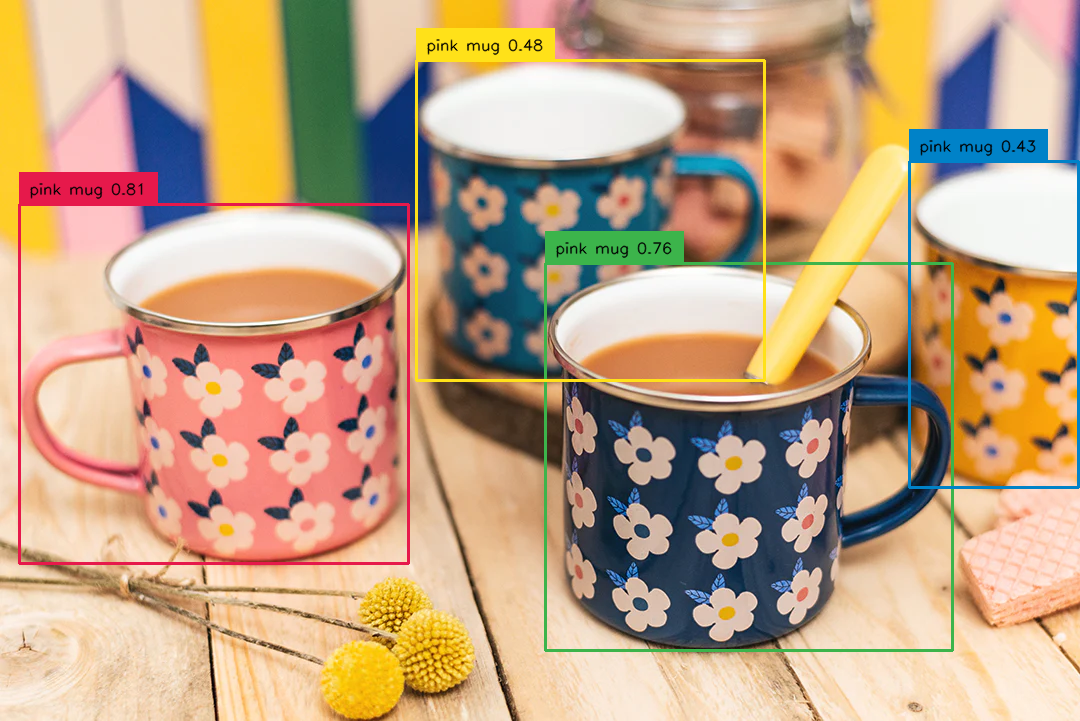

Detect objects

Models that detect or segment objects in images and videos.

Generate music

Models to generate and modify music

Sing with voices

Voice-to-voice cloning and musical prosody

Make 3D stuff

Models that generate 3D objects, scenes, radiance fields, textures and multi-views.

Chat with images

Ask language models about images

Extract text from images

Optical character recognition (OCR) and text extraction

Get embeddings

Models that generate embeddings from inputs

Use the FLUX family of models

The FLUX family of text-to-image models from Black Forest Labs

Use FLUX fine-tunes

Browse the diverse range of fine-tunes the community has custom-trained on Replicate

Control image generation

Guide image generation with more than just text. Use edge detection, depth maps, and sketches to get the results you want.

Popular models

SDXL-Lightning by ByteDance: a fast text-to-image model that makes high-quality images in 4 steps

High resolution image Upscaler and Enhancer. Use at ClarityAI.co. A free Magnific alternative. Twitter/X: @philz1337x

Practical face restoration algorithm for *old photos* or *AI-generated faces*

Practical face restoration algorithm for *old photos* or *AI-generated faces*

Latest models

ControlNet for FLUX.1-dev model jointly released by InstantX and Shakker Labs

InternLM-XComposer-2.5: A Versatile Large Vision Language Model Supporting Long-Contextual Input and Output

Virtual try-on using Stable Diffusion and IP-Adapter

replace background with Stable Diffusion and ControlNet

Join the Granite community where you can find numerous recipe workbooks to help you get started with a wide variety of use cases using this model. https://github.com/ibm-granite-community

Turn text from the law in Spanish to structured data to feed support building a knowledge graph

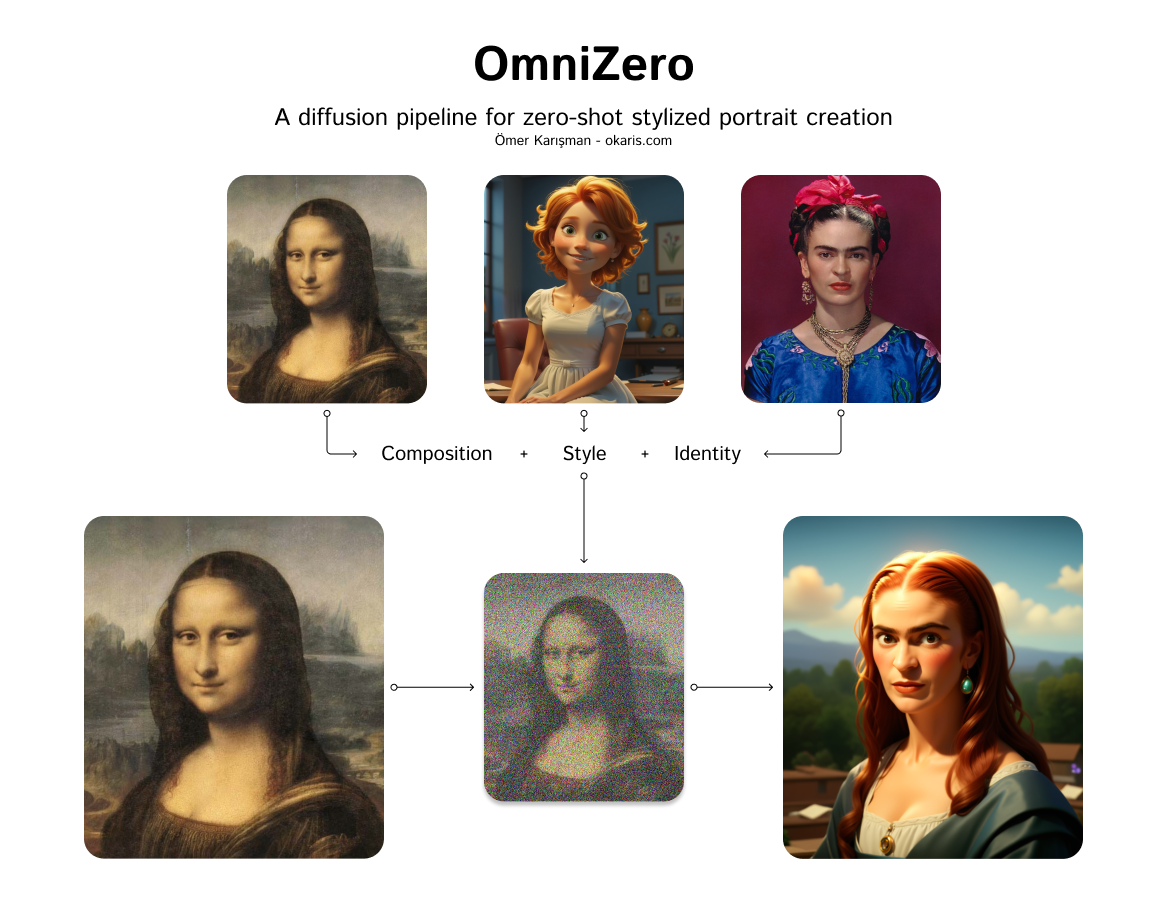

Omni-Zero: A diffusion pipeline for zero-shot stylized portrait creation.

Join the Granite community where you can find numerous recipe workbooks to help you get started with a wide variety of use cases using this model. https://github.com/ibm-granite-community

Cubiq's ComfyUI InstantID node running `instantid_basic.json` example

Ostris AI-Toolkit for Flux LoRA Training (DEPRECATED. Please use: ostris/flux-dev-lora-trainer)

🎨 Fill in masked parts of images with FLUX.1-schnell 🖌️

A wrapper model for captioning multiple images using GPT, Claude or Gemini, useful for lora training

UPDATE: new upscaling algorithm for a much improved image quality. Fermat.app open-source implementation of an efficient ControlNet 1.1 tile for high-quality upscales. Increase the creativity to encourage hallucination.

FLUX.1-Dev LoRA trainer via SimpleTuner (Work in Progress)