Explore

Featured models

anthropic / claude-3.5-sonnet

Anthropic's most intelligent language model to date, with a 200K token context window and image understanding (claude-3-5-sonnet-20241022)

minimax / video-01-director

Generate videos with specific camera movements

google / imagen-3-fast

A faster and cheaper Imagen 3 model, for when price or speed are more important than final image quality

google / imagen-3

Google's highest quality text-to-image model, capable of generating images with detail, rich lighting and beauty

deepseek-ai / deepseek-r1

A reasoning model trained with reinforcement learning, on par with OpenAI o1

tencent / hunyuan-video

A state-of-the-art text-to-video generation model capable of creating high-quality videos with realistic motion from text descriptions

playht / play-dialog

End-to-end AI speech model designed for natural-sounding conversational speech synthesis, with support for context-aware prosody, intonation, and emotional expression.

zsxkib / mmaudio

Add sound to video. An advanced AI model that synthesizes high-quality audio from video content, enabling seamless video-to-audio transformation

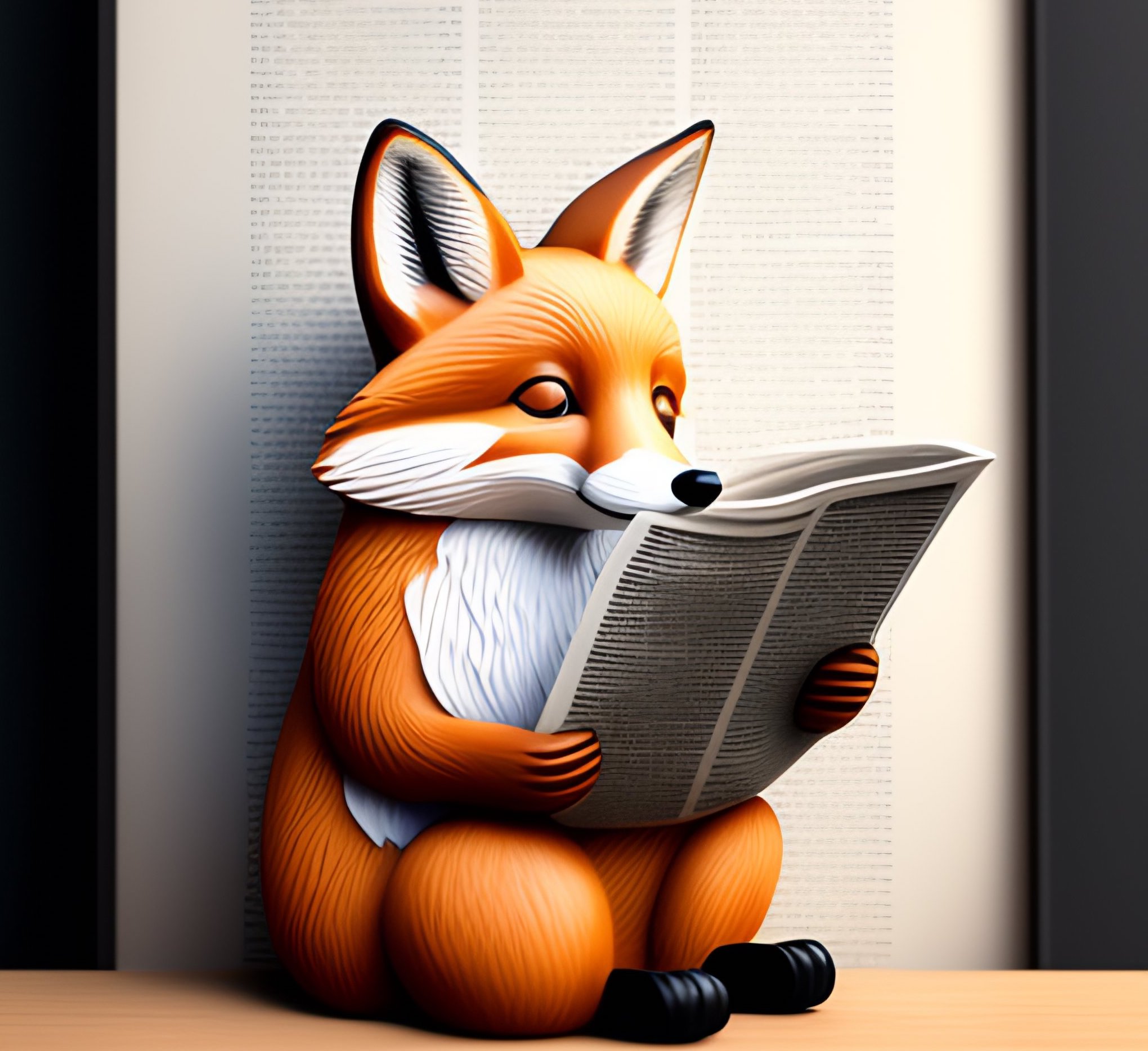

recraft-ai / recraft-v3

Recraft V3 (code-named red_panda) is a text-to-image model with the ability to generate long texts, and images in a wide list of styles. As of today, it is SOTA in image generation, proven by the Text-to-Image Benchmark by Artificial Analysis

I want to…

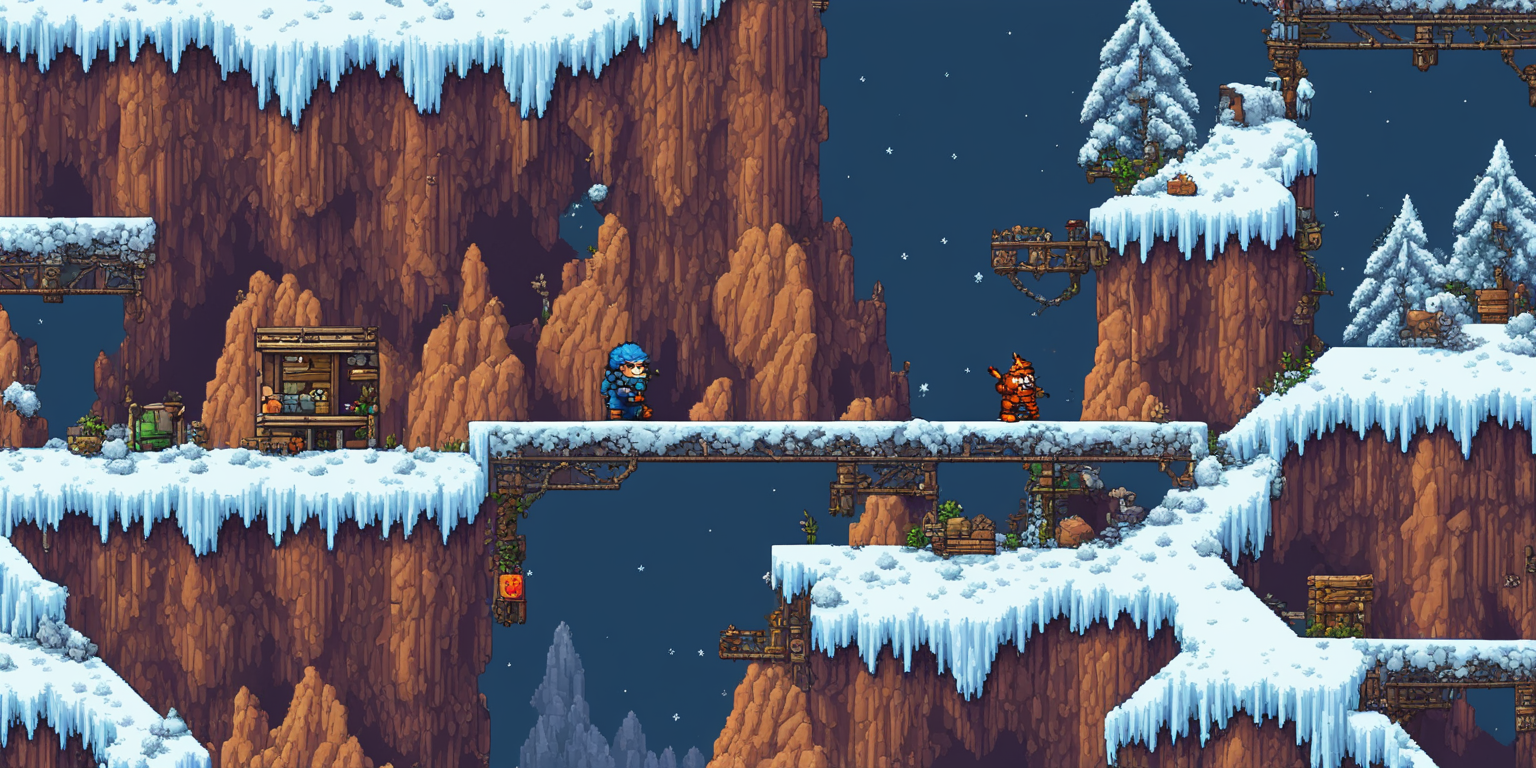

Generate images

Models that generate images from text prompts

Generate videos

Models that create and edit videos

Caption images

Models that generate text from images

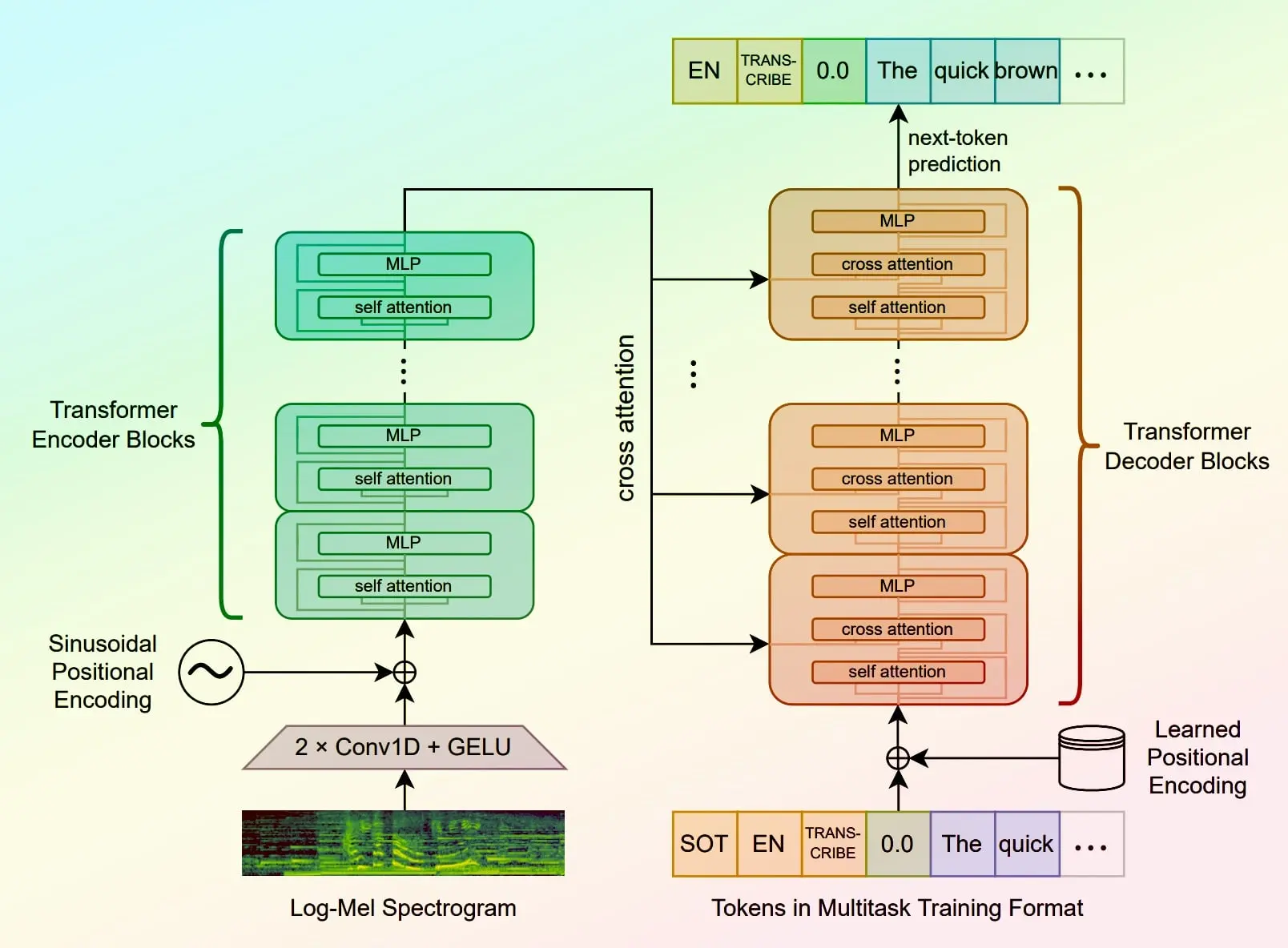

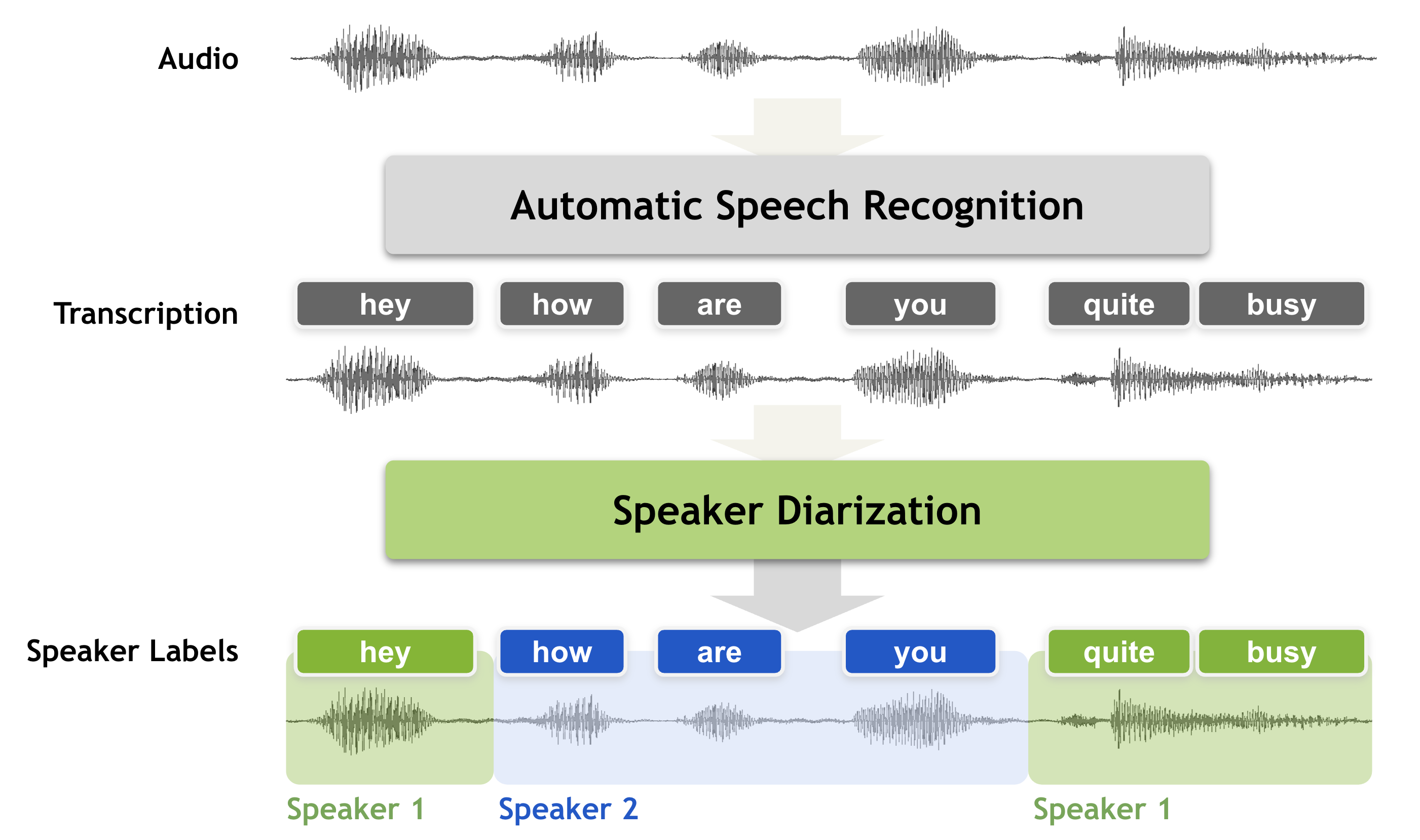

Transcribe speech

Models that convert speech to text

Generate text

Models that can understand and generate text

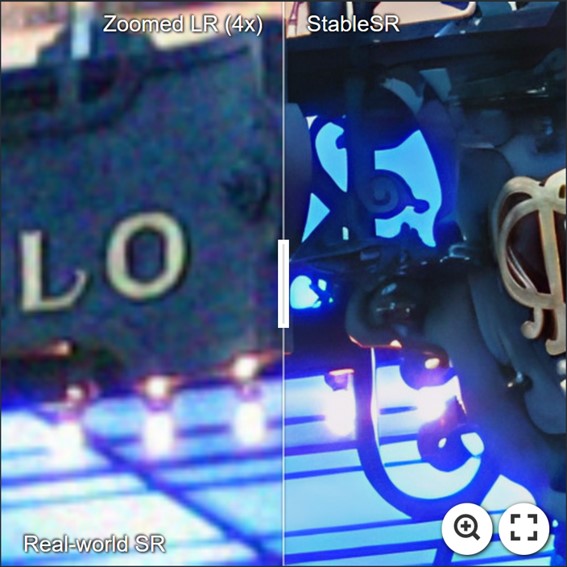

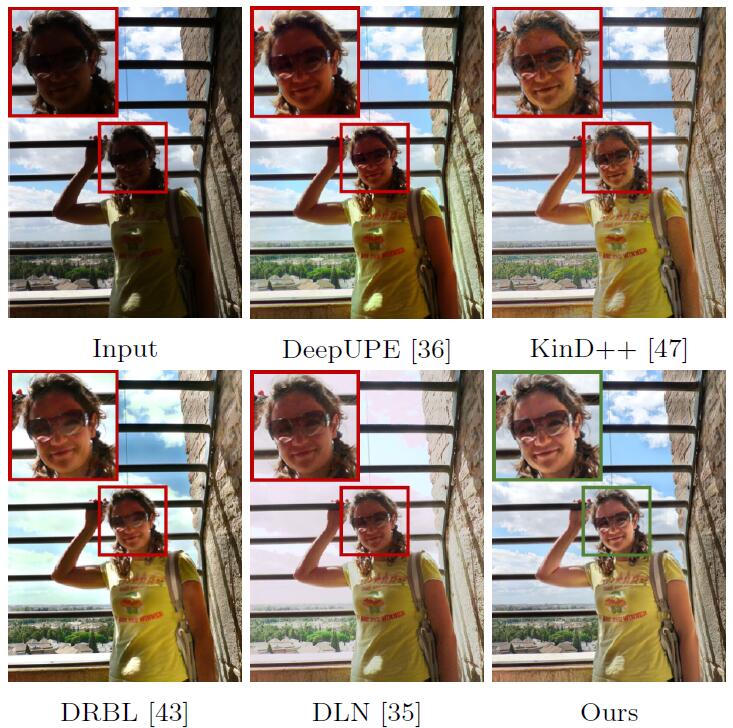

Upscale images

Upscaling models that create high-quality images from low-quality images

Use official models

Official models are always on, maintained, and have predictable pricing.

Restore images

Models that improve or restore images by deblurring, colorization, and removing noise

Enhance videos

Models that enhance videos with super-resolution, sound effects, motion capture and other useful production effects.

Generate speech

Convert text to speech

Caption videos

Model s that generate text from videos

Remove backgrounds

Models that remove backgrounds from images and videos

Use handy tools

Toolbelt-type models for videos and images.

Detect objects

Models that detect or segment objects in images and videos.

Generate music

Models to generate and modify music

Sing with voices

Voice-to-voice cloning and musical prosody

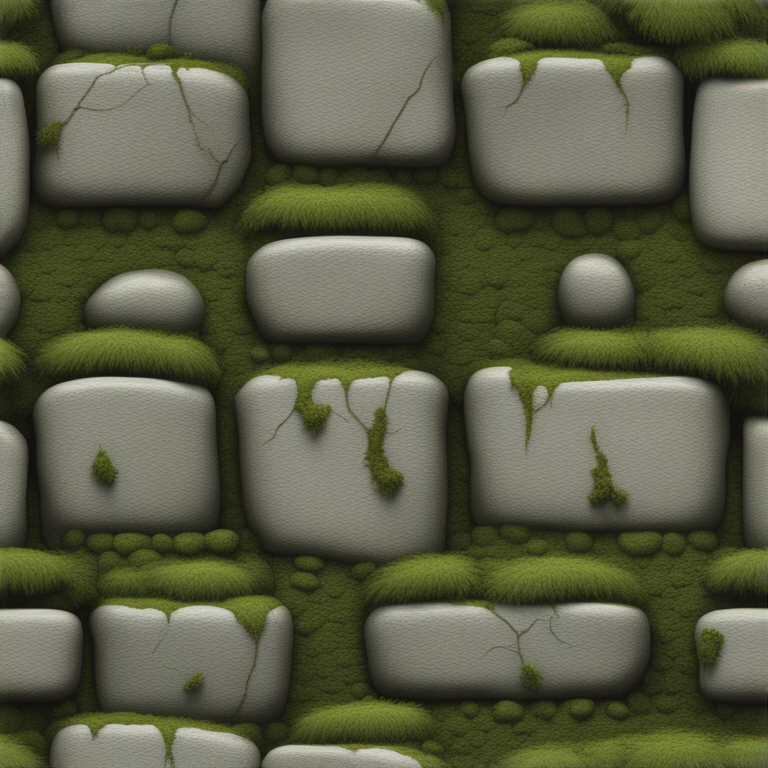

Make 3D stuff

Models that generate 3D objects, scenes, radiance fields, textures and multi-views.

Chat with images

Ask language models about images

Use a face to make images

Make realistic images of people instantly

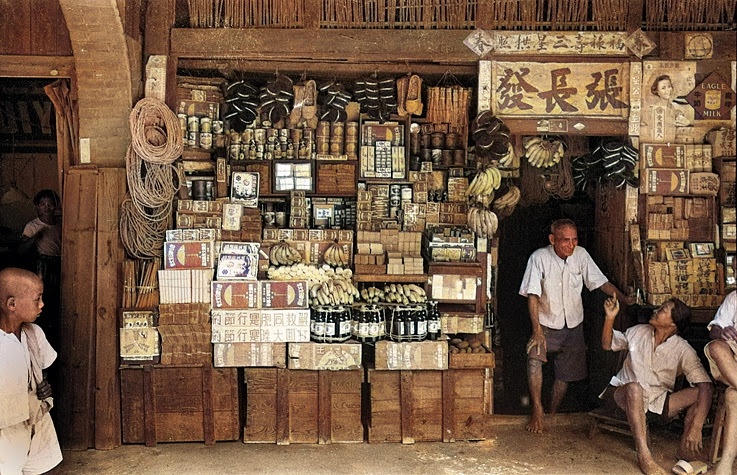

Extract text from images

Optical character recognition (OCR) and text extraction

Get embeddings

Models that generate embeddings from inputs

Use the FLUX family of models

The FLUX family of text-to-image models from Black Forest Labs

Use FLUX fine-tunes

Browse the diverse range of fine-tunes the community has custom-trained on Replicate

Control image generation

Guide image generation with more than just text. Use edge detection, depth maps, and sketches to get the results you want.

Edit images

Tools for manipulating images.

Popular models

SDXL-Lightning by ByteDance: a fast text-to-image model that makes high-quality images in 4 steps

Fine-Tuned Vision Transformer (ViT) for NSFW Image Classification

Return CLIP features for the clip-vit-large-patch14 model

A simple OCR Model that can easily extract text from an image.

Real-ESRGAN with optional face correction and adjustable upscale

A text-to-image generative AI model that creates beautiful images

Latest models

2.7B param open source chat model trained on Glaive’s synthetic data generation platform

Paint Transformer: Feed Forward Neural Painting with Stroke Prediction

Fine-tuned SDXL on my favorite architectural photographer, Iwan Baan

InstructMix Llama 3B is a language model fine-tuned on the InstructMix dataset.

Practical face restoration algorithm for *old photos* or *AI-generated faces* (for larger images)

A diffusion model that changes an input image according to provided prompt

Efficient Diffusion Model for Image Super-resolution by Residual Shifting

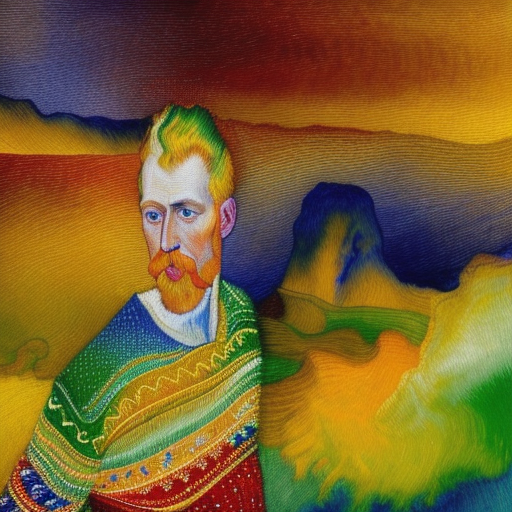

This is a tutorial example, where I take `nitrosocke/Ghibli-Diffusion` diffusion model and generate Van Gogh Paintings.

Faster and cheaper Whisper-AI Large-v2 responses. JAX implementation of OpenAI's Whisper model for up to 15x speed-up (doesn't support TPU).

Deployment of Realistic vision v5.0 with xformers for fast inference

Adding Vietnamese punctuation and capitalization raw text from ASR system.

Segments an audio recording based on who is speaking (on A100)

The online demo of Bread (Low-light Image Enhancement via Breaking Down the Darkness). This demo is developed to enhance images with poor/irregular illumination and annoying noises.